Ensuring Data Integrity in Lipidomics: A Comprehensive Guide to Quality Control Samples and Sequence Design

This article provides a complete framework for implementing robust quality control (QC) strategies in lipidomics workflows.

Ensuring Data Integrity in Lipidomics: A Comprehensive Guide to Quality Control Samples and Sequence Design

Abstract

This article provides a complete framework for implementing robust quality control (QC) strategies in lipidomics workflows. Tailored for researchers and drug development professionals, it covers the foundational role of QC samples, practical methodologies for their integration into analytical sequences, advanced troubleshooting techniques, and rigorous validation protocols. By synthesizing current best practices and addressing common pitfalls, this guide empowers scientists to achieve high reproducibility, accuracy, and reliability in their lipidomic data, which is crucial for meaningful biological interpretation and biomarker discovery.

The Critical Role of Quality Control Samples in Lipidomics: Foundations for Reliable Data

In mass spectrometry-based lipidomics, the reliability of data is paramount. Quality Control (QC) samples are indispensable tools for monitoring analytical performance, detecting technical variability, and ensuring that the biological results obtained are accurate and reproducible. Within a typical lipidomics workflow, QC samples are analyzed repeatedly throughout the acquisition sequence alongside the study samples. This allows researchers to track system stability, correct for instrumental drift, and filter out unreliable measurements during data pre-processing. The strategic use of QC samples is a critical component of quality assurance, forming the foundation for confident biomarker discovery and biological interpretation [1].

This application note details three core types of QC samples used in lipidomics: Pooled QC (PQC) samples, surrogate QC (sQC) samples, and Long-Term Reference (LTR) materials. We define each type, outline their preparation, and evaluate their performance based on a recent large-scale cohort study. Furthermore, we provide a detailed protocol for implementing these QC strategies, enabling robust and reproducible lipidomic analysis.

Defining and Comparing QC Sample Types

Core Definitions and Characteristics

- Pooled QC (PQC): The gold standard for quality control, a PQC is created by combining equal aliquots from every biological sample within a study. This results in a homogeneous QC material that is chemically representative of the entire sample cohort. Its composition mirrors the average lipid profile of the study, making it ideal for monitoring analytical variation specific to that project [2] [3] [4].

- Surrogate QC (sQC): An externally sourced, matrix-matched commercial material used as an alternative to a PQC. sQCs are not derived from the study samples themselves but are designed to mimic the general matrix, such as human plasma or serum. Their commercial availability makes them a practical solution when sample volume is limited or when a PQC is logistically challenging to prepare for large cohorts [2] [3].

- Long-Term Reference (LTR): A type of QC material, often a commercially available sQC, intended for use over an extended period across multiple studies or platforms within and between laboratories. LTRs are crucial for intra- and inter-laboratory harmonization, allowing for the comparison of data across different experiments and time, thereby aiding in the standardization of lipidomics data [2] [1].

Performance Comparison: PQC vs. sQC

A recent comprehensive study directly compared the performance of PQC and sQC in a targeted lipidomics workflow analyzing 701 plasma samples. The results are summarized in the table below.

Table 1: Performance Comparison of PQC and sQC in a Targeted Lipidomics Study [2] [3] [4]

| Performance Metric | Pooled QC (PQC) | Surrogate QC (sQC) | Interpretation |

|---|---|---|---|

| Analytical Repeatability | High | High | Both QC types are effective for monitoring instrumental precision and stability during data acquisition. |

| Composition | Chemically representative of the study cohort | Distinct from the study cohort | PQC's composition is inherently matched to the study, while sQC differs. |

| Lipid Species Retained Post-Pre-processing | Benchmark (retained ~4% more species than sQC) | Slightly fewer | PQC-based processing is marginally more conservative in filtering out lipid species. |

| Univariate Analysis Outcome | Identified a larger number of statistically significant lipids | Identified fewer significant lipids | PQC may offer higher sensitivity for discovering individual lipid biomarkers. |

| Multivariate Model Performance | Similar | Similar | Both QC strategies are equally effective for classification models and pattern recognition. |

| Primary Application | Gold standard for single-study quality assessment and pre-processing | Suitable alternative for quality assessment; ideal as a Long-Term Reference (LTR) | sQC is a viable alternative, especially for long-term data harmonization. |

Experimental Protocol: Implementing QC Strategies in a Lipidomics Workflow

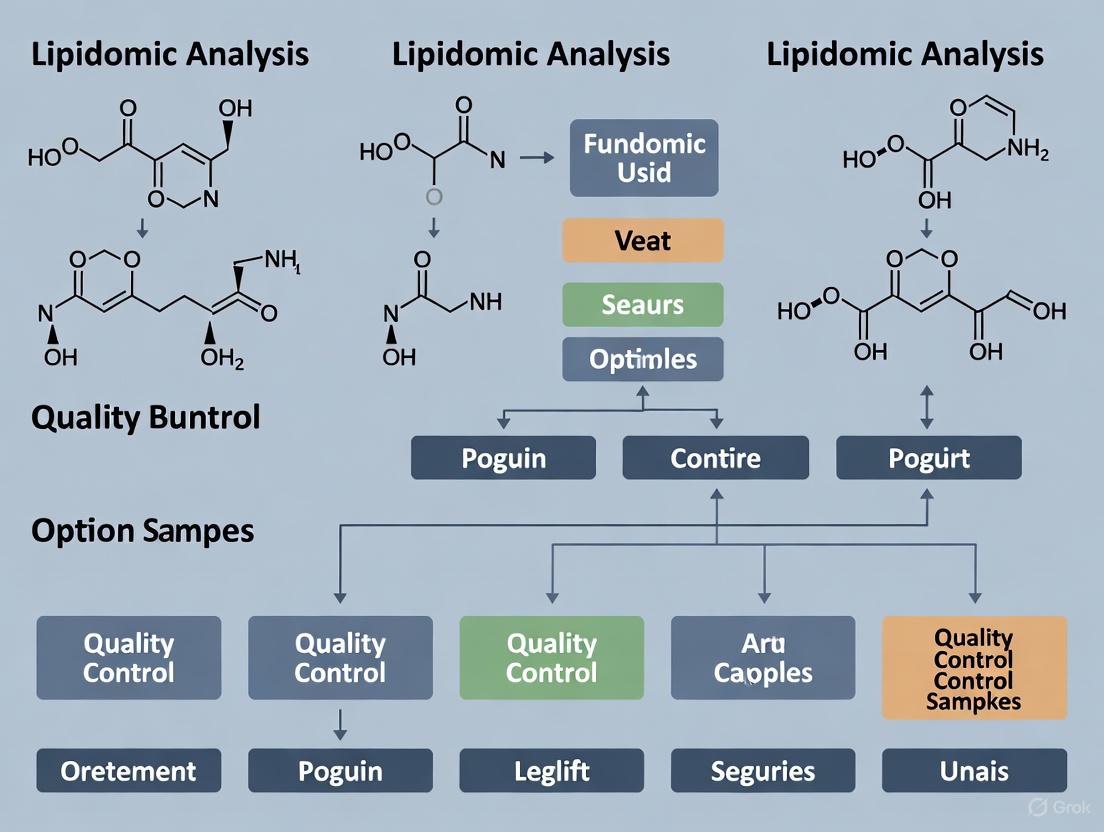

The following diagram illustrates the integration of PQC, sQC, and LTR samples within a standard lipidomics analytical sequence.

Detailed Procedural Steps

3.2.1 Project Design and Sample Collection (Timing: Days-Weeks) A successful lipidomics study requires joint planning between clinical biologists and analytical chemists. For human studies, key physiological factors such as age, sex, body mass index (BMI), and fasting status must be matched between case and control groups to minimize bias. Ethical approval must be obtained prior to initiation. Estimate the minimum sample size required for statistical power using tools like MetaboAnalyst [5].

3.2.2 Preparation of QC Samples

- Pooled QC (PQC):

- After all individual study samples have been processed, take a small, equal-volume aliquot (e.g., 10-20 µL) from each sample.

- Combine all aliquots into a single container.

- Mix the combined aliquot thoroughly by vortexing to ensure homogeneity.

- Divide the pooled mixture into multiple low-volume aliquots in sample vials. The number of PQC aliquots should be sufficient for analysis throughout the entire acquisition sequence (typically 5-10% of total injections).

- Store the PQC aliquots at -80°C alongside the study samples until analysis [3] [4].

- Surrogate QC (sQC) / Long-Term Reference (LTR):

- Procure a commercial matrix-matched material, such as commercial human plasma.

- Following the manufacturer's instructions, reconstitute the material if lyophilized.

- Divide the bulk sQC/LTR material into multiple single-use aliquots to avoid repeated freeze-thaw cycles.

- Store the aliquots at -80°C or as recommended by the manufacturer [2] [1].

3.2.3 Analytical Sequence Planning and Data Acquisition

- Lipid Extraction: Extract lipids from all study samples, PQC aliquots, and sQC/LTR aliquots using a standardized method, such as the MTBE or Bligh & Dyer method, with the addition of appropriate internal standards for quantification [6].

- Sequence Setup: Design the LC-MS/MS injection sequence to analyze samples in a randomized order. Critical QC injections include:

- System Equilibration: 5-10 injections of a PQC or sQC at the beginning of the sequence to condition the column and stabilize the MS system. Data from these injections are typically discarded.

- Blank Injection: A solvent blank to monitor carryover.

- QC Interspersion: Inject a PQC and/or sQC/LTR sample after every 5-10 study samples throughout the entire sequence to monitor analytical performance and stability over time [7] [5].

- LC-MS/MS Analysis: Perform data acquisition using Ultra-High Performance Liquid Chromatography-Tandem Mass Spectrometry (UHPLC-MS/MS). The specific conditions (column, mobile phase, gradient, MS parameters) will depend on the targeted or untargeted assay. For example, a common setup uses a Waters ACQUITY UPLC BEH C18 column with mobile phases containing ammonium formate for positive/negative ion switching [8] [5].

3.2.4 Data Pre-processing and Quality Assessment

- Pre-processing: Process the raw data using specialized software (e.g., MS-DIAL, LipidSearch) for peak picking, alignment, and identification. Perform normalization using the data from either the PQC or sQC injections. Common techniques include LOESS (Locally Estimated Scatterplot Smoothing) or SERRF (Systematic Error Removal using Random Forest) to correct for signal drift [7].

- QC Criteria: Apply quality filters based on the QC data. A common metric is the relative standard deviation (RSD%) of each lipid feature measured in the QC injections. Lipid species with an RSD% exceeding a predefined threshold (e.g., 20-30%) are considered unreliable and are removed from the dataset [2] [7].

The Scientist's Toolkit: Essential Reagents and Materials

Table 2: Key Research Reagent Solutions for Lipidomics QC

| Item | Function / Application | Examples / Notes |

|---|---|---|

| Commercial Human Plasma | Serves as a surrogate QC (sQC) and Long-Term Reference (LTR). | Commercially sourced from biological suppliers; provides a consistent, matrix-matched QC material across studies [2] [1]. |

| Internal Standard Mixture | Critical for quantitative accuracy. Corrects for extraction efficiency, ionization efficiency, and instrument variability. | A mixture of stable isotope-labeled lipid standards covering multiple lipid classes added to each sample prior to lipid extraction [6]. |

| Chromatography Column | Separation of complex lipid mixtures prior to MS detection. | e.g., Waters ACQUITY UPLC BEH C18 column (2.1 x 100 mm, 1.7 µm). Provides high-resolution separation of lipids [8] [5]. |

| Mobile Phase Additives | Enhance ionization efficiency and control adduct formation in MS. | e.g., Ammonium formate. Using 10 mM ammonium formate in the mobile phase promotes the formation of [M+H]+ and [M+HCOO]- adducts, which are standard for lipid identification [8] [9]. |

| Lipid Extraction Solvents | Isolate lipids from the biological matrix. | Methyl-tert-butyl ether (MTBE), chloroform, methanol. The MTBE method is popular for high-throughput and automation as the organic phase is on top [6]. |

| Data Processing Software | For peak detection, alignment, identification, and QC-based normalization. | MS-DIAL, LipidHunter, LipidXplorer. These tools use rule-based approaches for identification and integrate with QC-based drift correction algorithms [7] [9]. |

| Trityl-PEG10-Azide | Trityl-PEG10-Azide, MF:C39H55N3O10, MW:725.9 g/mol | Chemical Reagent |

| mAChR-IN-1 | mAChR-IN-1, MF:C23H25IN2O2, MW:488.4 g/mol | Chemical Reagent |

The selection of an appropriate QC strategy is a critical decision in lipidomics study design. While Pooled QC (PQC) samples remain the gold standard for single-study analysis due to their perfect matrix match and slightly superior performance in univariate analysis, commercially available surrogate QC (sQC) samples present a robust and practical alternative. sQCs demonstrate comparable performance in monitoring analytical variation and in multivariate analysis, and their utility extends to serving as Long-Term References (LTRs) for data harmonization across multiple studies and laboratories. By implementing the detailed protocols and workflows outlined in this application note, researchers can ensure the generation of high-quality, reproducible lipidomics data.

In mass spectrometry (MS)-based lipidomics, the ambition to comprehensively characterize the lipid complement of a biological system is coupled with significant technical hurdles. The transition from raw data to biological insight is complicated by analytical variation that can obscure true biological signals and lead to irreproducible findings. Quality Control (QC) practices are therefore non-negotiable, serving as a critical subsystem within a broader quality framework to ensure the validity, reliability, and accuracy of generated data. This is especially paramount in core facilities and drug development settings, where confidence in delivered results is essential for drawing meaningful biological conclusions [10]. Effective QC strategies isolate extrinsic measurement variance from intrinsic sample variability, providing confidence in the total workflow from sample preparation to data acquisition and initial analysis [10].

The fundamental goal of QC is to monitor, control, and mitigate sources of analytical variation throughout the experimental lifecycle. Systematic technical variance, known as batch effects, can arise from differences in reagent lots, instrument calibration, LC column performance, or different technicians. When these technical factors are confounded with the biological groups under study, they can generate false-positive discoveries [11]. Furthermore, the complex nature of biological samples introduces challenges like ion suppression, where co-eluting components alter ionization efficiency and bias quantification [12]. A robust QC system enables researchers to detect, correct for, and prevent these issues, thereby ensuring that the molecular insights derived from the lipidome are robust, reproducible, and ready for translation.

The Indispensable Role of QC Samples

Conceptual Framework: QC Levels and Their Applications

QC materials are not a one-size-fits-all solution; their composition and complexity determine the specific type of QC information they can provide. These materials can be systematically categorized into different levels to match their use cases [10].

Table 1: A Tiered Framework for QC Materials in Mass Spectrometry

| QC Level | Material Composition | Primary Use Case | Information Provided |

|---|---|---|---|

| QC1 | Known mixture of pure peptides or digest of a few proteins; can be isotopically labeled [10]. | System Suitability Testing (SST); Retention Time Calibration [10]. | Verifies instrument performance; calibrates retention times; checks LC-MS/MS system independently of experimental samples. |

| QC2 | Digest of a whole-cell lysate or biofluid (e.g., human plasma) [10]. | Process Control | Monitors the entire workflow from sample preparation to data acquisition; assesses technical variation introduced during processing. |

| QC3 | Isotopically labeled peptides (QC1) spiked into a complex whole-cell lysate or biofluid digest (QC2) [10]. | System Suitability Testing (SST) with Complex Matrix | Evaluates instrument performance in a matrix similar to experimental samples; enables monitoring of detection limits and quantitative accuracy. |

| QC4 | A suite of two or more distinct, predigested whole-cell lysates or biofluids, potentially with known ratio differences [10]. | Benchmarking Quantitative Accuracy | Assesses the accuracy and precision of label-free or isotopically labeled quantification across multiple samples and runs. |

The Pooled QC Sample: A Practical Cornerstone

A central tool in the QC strategy is the pooled QC sample, which is typically created by combining a small aliquot of every biological sample in the study. This pool represents the average composition of the entire sample set. It is then processed and—crucially—analyzed repeatedly at regular intervals throughout the instrumental run sequence [11]. This sample serves multiple critical functions [11] [10]:

- Monitoring Instrument Stability: By tracking metrics like peak intensity, retention time, and peak shape in the pooled QC over the sequence, scientists can identify and correct for instrument drift.

- Assessing Technical Precision: The coefficient of variation (CV) calculated for lipid abundances across the repeated injections of the pooled QC provides a measure of the method's technical precision. A low CV (ideally below 15-20%) indicates a stable analytical process [11].

- Data Correction: The data from pooled QC injections can be used for post-acquisition normalization to correct for systematic run-order variation.

Protocols for Implementing a Robust QC Strategy

Protocol 1: Experimental Design to Mitigate Batch Effects

Principle: Proactively design experiments to prevent technical factors from becoming confounded with biological factors of interest.

Procedure:

- Randomization: Do not run all samples from one biological group in a single batch. Instead, use a randomized block design where samples from all comparison groups are distributed evenly and randomly across all processing and analytical batches [11].

- Pooled QC Placement: Prepare a pooled QC sample from all experimental samples. Inject this pooled QC at the beginning of the run sequence to condition the system, and then repeatedly every 5-10 experimental samples throughout the entire sequence [11] [12].

- Balanced Labeling: When using isobaric labeling, ensure that samples from all biological groups are represented within each plex to avoid confounding batch effects with biology [11].

Protocol 2: Monitoring and Mitigating Matrix Effects

Principle: Matrix effects, caused by co-eluting components that suppress or enhance ionization, must be evaluated and minimized to ensure accurate quantification [12].

Procedure:

- Post-Column Infusion (Mapping): Continuously infuse a standard analyte into the LC effluent post-column while injecting a prepared matrix sample. The resulting chromatogram will reveal regions of ion suppression or enhancement, allowing for chromatographic optimization to avoid these "suppression windows" [12].

- Standard Addition with SIL-IS: Use class-matched stable isotope-labeled internal standards (SIL-IS). Prepare calibration curves using the standard addition method, where known amounts of analyte are spiked into the matrix. This accounts for matrix-specific impacts on ionization and provides a more accurate quantification [12].

- Sample Cleanup and Dilution: Employ techniques like solid-phase extraction to remove phospholipids and other interferents. If sensitivity allows, dilute the final sample extract to reduce the overall matrix load entering the ion source [12].

Protocol 3: A Standard QC Lipidomics Workflow

The following workflow integrates QC materials and procedures into a typical lipidomics experiment. It visualizes the process from sample preparation to data acquisition, highlighting key QC steps.

Protocol 4: QC Data Assessment and Acceptance Criteria

Principle: Establish pre-defined metrics and acceptance criteria to objectively determine whether an analytical run is valid.

Procedure:

- Track Key Parameters: For the pooled QC injections, monitor:

- Retention Time Shift: The CV for the retention time of key lipids should be very low (e.g., < 0.5%) [11].

- Peak Intensity and Area: The intensity and integrated peak area for identified lipids should be stable. The CV should ideally be maintained below 15-20% [11].

- Peak Shape: Monitor peak width and symmetry to detect chromatographic issues.

- Missing Data: The number of lipids identified across all pooled QC injections should be consistent, with a low rate of missing values.

- Visualization: Use principal component analysis (PCA) to plot all QC samples. They should cluster tightly together, indicating technical stability. Any outlier QC injections signal potential problems.

- Acceptance Criteria: Define thresholds for the above parameters (e.g., CV < 15%, tight PCA clustering). If a batch fails these criteria, investigate instrumental or methodological issues and consider re-running the samples.

The Scientist's Toolkit: Essential QC Reagents and Materials

Successful implementation of a QC strategy requires specific materials. The table below details key reagents and their functions.

Table 2: Essential Research Reagent Solutions for QC in Lipidomics

| Reagent/Material | Function & Application | Example |

|---|---|---|

| Retention Time Calibration Mix (QC1) | Provides a set of known analytes to calibrate and monitor retention time stability across runs, correcting for chromatographic drift [10]. | Pierce Peptide Retention Time Calibration (PRTC) Mixture [10]. |

| Complex Reference Matrices (QC2) | A well-characterized, complex digest used as a process control to monitor the entire workflow's performance and technical precision over time [10]. | Yeast or E. coli whole-cell lysate digest; commercially available human plasma digests. |

| Labeled Internal Standard Mix (QC1/QC3) | A mixture of stable isotope-labeled lipids added to every sample to correct for matrix effects, monitor extraction efficiency, and enable accurate quantification [12]. | Class-specific SIL-IS (e.g., labeled phosphatidylcholines, triglycerides); commercial lipidomics internal standard kits. |

| System Suitability Test Mix (QC3) | A complex material containing labeled standards spiked into a background matrix, used to verify instrument performance meets sensitivity and quantitative specifications before sample analysis [10]. | Commercially available MS Qual/Quant QC Mixes [10]. |

| Quality Control Software | Software tools designed to automate the tracking of QC metrics, visualize instrument performance over time, and flag out-of-tolerance batches. | Vendor-specific software (e.g., Thermo Scientific QCs), open-source packages integrated with xcms or Progenesis QI. |

| 6-O-Acetylcoriatin | 6-O-Acetylcoriatin, CAS:1432063-63-2, MF:C17H22O7, MW:338.4 g/mol | Chemical Reagent |

| 1-Acetyltagitinin A | Parthenolide Analog|(12-acetyloxy-1-hydroxy-2,11-dimethyl-7-methylidene-6-oxo-5,14-dioxatricyclo[9.2.1.04,8]tetradecan-9-yl) 2-methylpropanoate | This (12-acetyloxy-1-hydroxy-2,11-dimethyl-7-methylidene-6-oxo-5,14-dioxatricyclo[9.2.1.04,8]tetradecan-9-yl) 2-methylpropanoate is a parthenolide derivative for cancer and inflammation research. For Research Use Only. Not for human or veterinary use. |

In modern mass spectrometry, particularly in the high-stakes fields of lipidomics and drug development, quality control is an integral component of the scientific method, not an optional add-on. The implementation of a rigorous, tiered QC strategy—encompassing a conceptual framework, practical protocols, and essential reagents—is fundamental to generating credible and reproducible data. By systematically using QC samples, proactively designing experiments to mitigate batch effects, and continuously monitoring performance against strict criteria, researchers can confidently separate analytical noise from biological signal. This diligence ensures that conclusions are built upon a foundation of reliable data, ultimately accelerating and de-risking the path from discovery to clinical application.

Lipidomics, the large-scale study of lipid pathways and networks, is crucial for understanding cellular mechanisms in health and disease. However, the accuracy and biological relevance of its findings are highly dependent on robust quality control (QC) procedures. Technical variations arising from instrument instability and batch effects can compromise data integrity, leading to both false positives and false negatives. This document outlines the core objectives and practical protocols for monitoring instrument stability, correcting for batch effects, and ensuring overall data quality within a lipidomics workflow. Implementing these QC measures is essential for generating reliable, reproducible data that can confidently inform drug development and other scientific research.

The Lipidomics Quality Control Scoring System

To standardize the reporting and quality assessment of lipidomics data, a formal scoring system has been proposed. This system abstracts complex structural information into a numerical score, providing researchers with an immediate, intuitive understanding of data quality [13].

The table below summarizes the layers of analytical information considered in the scoring scheme and their contribution to the overall quality score.

Table 1: Lipidomics Data Quality Scoring Framework

| Analytical Layer | Key Parameters Assessed | Points Awarded For | Importance for Annotation Level |

|---|---|---|---|

| Mass Spectrometry (MS) | Accurate mass, isotopic pattern, MS/MS fragmentation | Characteristic head group fragments, diagnostic neutral losses, fatty acyl fragments [9]. | Distinguishes lipid class; essential for species-level ID. |

| Chromatography | Retention Time (RT) | Adherence to class-specific retention patterns (e.g., Equivalent Carbon Number model) [9]. | Confirms identity and reduces false positives from isobaric lipids. |

| Ion Mobility | Collision Cross Section (CCS) | Match to validated CCS libraries or standards. | Provides an orthogonal identifier for increased confidence. |

The merit of this scoring system is its ability to provide a granular assessment of data quality that is integrated with the official lipid shorthand nomenclature. It encourages best practices by rewarding data that includes multiple, orthogonal lines of evidence for lipid identification. For example, an annotation based solely on accurate mass would score low, while one supported by accurate mass, a validated retention time, and a characteristic MS/MS spectrum with a head group fragment would achieve a high score. This system can serve as an invaluable tool for internal quality control and for peer review of lipidomics data [13].

Monitoring and Correcting for Batch Effects

Batch effects are unwanted technical variations introduced when samples are processed or analyzed in separate groups (batches) over time. These effects are a major threat to the reproducibility of large-scale lipidomics studies.

The Impact of Batch Effects

In mass spectrometry-based omics, batch effects can originate from multiple sources, including:

- Variations in reagent lots.

- Instrument performance drift over time.

- Differences between operators or laboratory environments [14]. If left uncorrected, these technical variations can be confounded with biological factors of interest, leading to spurious findings and hindering the integration of datasets from different studies.

Strategies for Batch-Effect Correction

The stage at which batch-effect correction is applied—precursor, peptide, or protein level—has been a subject of debate. Recent comprehensive benchmarking studies using reference materials have provided critical insights. Leveraging real-world multi-batch data and simulated datasets, researchers have compared correction at precursor, peptide, and protein levels combined with various quantification methods and algorithms [14].

Table 2: Comparison of Batch-Effect Correction (BEC) Strategies and Algorithms

| BEC Strategy | Description | Recommended Algorithms | Key Findings from Benchmarking |

|---|---|---|---|

| Protein-Level Correction | BEC is performed on the final, aggregated protein-level data matrix. | Combat, Ratio, Harmony, WaveICA2.0 | Demonstrated to be the most robust strategy, enhancing data integration in large cohort studies [14]. |

| Peptide-Level Correction | BEC is applied to the peptide-level data before protein quantification. | Combat, RUV-III-C | Performance can be variable and interacts with the protein quantification method used. |

| Precursor-Level Correction | BEC is applied to the most raw, precursor-level data. | NormAE | Less robust compared to protein-level correction. |

| Algorithm Performance | - | Harmony | In single-cell RNA-seq analyses, Harmony was the only method that consistently performed well without introducing measurable artifacts [15]. Other methods like MNN, SCVI, and LIGER often altered the data considerably. |

The findings indicate that batch-effect correction at the protein level is the most robust strategy for MS-based proteomics, and this principle is highly applicable to lipidomics. The process of aggregating lower-level data (e.g., precursor intensities) into higher-level features (e.g., lipid species) appears to mitigate some technical noise, making protein-level correction more stable. Furthermore, the Ratio method, which scales intensities based on concurrently profiled reference samples, has been shown to be particularly effective when batch effects are confounded with biological groups [14].

Figure 1: Workflow for evaluating batch-effect correction strategies. Benchmarking studies suggest protein-level correction is the most robust approach [14].

Presentation of Quantitative QC Data

Effective presentation of quantitative data is vital for quickly assessing QC metrics and communicating them to collaborators or in publications.

Frequency Tables and Histograms

For quantitative QC variables, such as a specific lipid's intensity across hundreds of QC samples, a frequency table is the first step before interpretation. The data should be divided into class intervals, which are groupings of equal size. The number of classes is typically optimal between 6 and 16, and the table must have a clear title, headings, and units [16] [17].

A histogram provides a pictorial representation of this frequency distribution. It consists of a series of contiguous rectangular bars, where the width represents the class interval of the quantitative variable (e.g., intensity value), and the length represents the frequency of observations within that interval. The area of each bar is proportional to the frequency, making it ideal for visualizing the distribution of QC metrics [16] [17].

Frequency Polygons for Comparative Analysis

A frequency polygon is an alternative representation that starts like a histogram. Instead of drawing bars, a point is placed at the midpoint of each interval at a height equal to the frequency, and these points are connected with straight lines. This graph type is particularly useful for comparing the distribution of two or more sets of data on the same diagram, such as comparing the intensity distribution of a lipid in case versus control samples, or comparing data from different instrument batches [17].

Experimental Protocols for Lipidomics QC

Protocol: Quality Control Sample Preparation and Analysis

Objective: To monitor instrument stability and performance throughout a lipidomics sequence.

- QC Pool Preparation: Combine equal aliquots from all study samples to create a homogeneous QC pool.

- Sample Sequence Design: Inject the QC pool sample repeatedly:

- At the beginning of the sequence to condition the system.

- At regular intervals throughout the sequence (e.g., every 5-10 analytical samples).

- At the end of the sequence.

- Data Acquisition: Acquire data for all QC samples using the same method as the analytical samples.

- Data Analysis:

- Stability: Extract the retention time and peak area for key lipids across all QC injections. Plot these values in a line graph to visualize drift.

- Precision: Calculate the coefficient of variation (CV) for the peak areas of lipids in the QC samples. A CV of <15-20% is generally acceptable, depending on the platform and lipid abundance.

Protocol: Validating Lipid Identifications

Objective: To minimize false-positive lipid annotations [9].

- MS/MS Spectral Match: Ensure MS/MS spectra contain characteristic fragments for the lipid class (e.g., m/z 184.07 for phosphocholine-containing lipids in positive mode) [9].

- Retention Time Validation: Compare the retention time of the identified lipid to the expected elution behavior for its class (e.g., using the Equivalent Carbon Number model). Lipids that do not follow the predicted retention pattern should be flagged for re-inspection [9].

- Use of Multiple Adducts: Where possible, confirm identifications by detecting more than one adduct ion (e.g., [M+H]+ and [M+Na]+ for lipids in positive ion mode).

- Comparison to Standards: The gold standard for identification is to correlate the retention time and fragmentation pattern of the putative lipid with an authentic analytical standard run under identical conditions [9].

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Reagents and Materials for Lipidomics Quality Control

| Item | Function / Application | Example / Key Feature |

|---|---|---|

| Universal Reference Materials | Monitors and corrects for batch effects across multiple labs or runs. | Used in Ratio-based correction; e.g., Quartet project reference materials [14]. |

| Internal Standards (IS) | Corrects for variability in extraction, ionization efficiency, and instrument response. | Stable isotope-labeled lipids for each major lipid class. |

| Quality Control (QC) Pool | Assesses instrument stability and analytical performance over the sequence. | Pooled aliquot of all study samples; analyzed intermittently. |

| Authentic Lipid Standards | Validates lipid identifications by confirming retention time and MS/MS spectrum. | Commercially available pure lipid standards. |

| MACS Tissue Storage Solution | Preserves tissue integrity for consistent lipidomic analysis from biological samples. | Used in human orbital adipose tissue studies [18]. |

| Dregeoside Ga1 | Dregeoside Ga1, CAS:98665-66-8, MF:C49H80O17, MW:941.1 g/mol | Chemical Reagent |

| Gelsempervine A | Gelsempervine A, MF:C22H26N2O4, MW:382.5 g/mol | Chemical Reagent |

Workflow Visualization for Data Quality Assessment

The following diagram summarizes the logical workflow for integrating these QC measures into a standard lipidomics pipeline, from sample preparation to data reporting.

Figure 2: Integrated lipidomics workflow with iterative quality checkpoints. The process is cyclical, allowing for re-analysis if QC metrics are not met.

The Impact of Poor QC on Lipid Identification and Quantification

Lipidomics, the large-scale study of lipid pathways and networks in biological systems, provides crucial insights into health, disease, and therapeutic development [19]. The accuracy of these studies, however, is fundamentally dependent on the quality control (QC) measures implemented throughout the analytical workflow. Poor QC directly compromises the reliability of lipid identification and quantification, leading to erroneous biological interpretations, irreproducible research, and flawed conclusions in downstream applications such as drug development and biomarker discovery.

This application note details the major risks associated with inadequate QC practices in lipidomics, provides validated protocols to mitigate these risks, and establishes a robust QC framework to ensure data integrity.

Consequences of Inadequate Lipid Quality Control

Failures in lipid QC can introduce errors at multiple levels, from initial lipid extraction to final data annotation. The following table summarizes the primary consequences and their impacts on lipidomic data.

Table 1: Major Consequences of Poor Quality Control in Lipidomics

| QC Failure Point | Impact on Lipid Identification & Quantification | Downstream Effect |

|---|---|---|

| Unchecked Raw Material Purity | Introduction of reactive impurity species (e.g., peroxides, aldehydes) that form adducts with target analytes [20]. | Loss of biological activity; e.g., mRNA inactivation in lipid nanoparticles (LNPs) due to lipid-mRNA adduction, independent of mRNA integrity [20]. |

| Inadequate Chromatographic Validation | Misidentification of isobaric and isomeric lipids that co-elute or exhibit anomalous retention behavior [9]. | High false-positive identification rates; e.g., reports of impossible lipid isomers or structures that violate biosynthetic principles [9]. |

| Insufficient MS/MS Spectral Validation | Reliance on software-assisted annotation without manual verification of characteristic fragments and head groups [9]. | Incorrect structural assignment; failure to distinguish lipid classes (e.g., PC vs. SM) due to missing diagnostic fragments [9]. |

| Poor Control During Sample Preparation | Inconsistent lipid extraction efficiency and activation of endogenous lipases that modify the native lipid profile [19]. | Corrupted lipid profiles that do not reflect the in vivo state, reducing data accuracy and reproducibility [19]. |

Case Study: Reactive Impurities in Lipid Nanoparticles

A critical example comes from LNP-based therapeutic development. Ionizable lipids with unsaturated tails can contain peroxide degradants that convert to reactive aldehydes during storage. These aldehydes form adducts with encapsulated mRNA, which was observed as a distinct late-eluting peak in Ion-Pairing Reverse-Phase Chromatography (IP-RP) analysis [20]. The critical finding was that this adduction caused a significant decrease in protein expression efficiency in vitro without degrading the physical integrity of the mRNA, a potency loss that would be missed by standard purity assays [20]. This underscores that stringent QC of raw lipid materials is essential for maintaining the biological efficacy of the final product.

Essential QC Procedures for Robust Lipidomics

Protocol 1: QC for Lipid Raw Materials and Formulations

This protocol is designed to detect and quantify reactive impurities in lipids, crucial for ensuring the stability and performance of lipid-based formulations like LNPs.

Materials & Reagents:

- Lipid raw material (e.g., ionizable lipid for LNP formulation)

- Internal standards (e.g., SPLASH LIPIDOMIX Mass Spec Standard)

- LC-MS grade solvents: Methanol, Isopropanol, Methyl tert-butyl ether (MTBE)

- Liquid Chromatography-Mass Spectrometry (LC-MS) system

Procedure:

- Sample Preparation: Dissolve the lipid raw material in a suitable solvent (e.g., isopropanol) to a predetermined concentration.

- LC-MS Analysis:

- Chromatography: Use Reversed-Phase Liquid Chromatography with a C18 column. Employ a gradient elution with mobile phase A (water with 10 mM ammonium formate) and mobile phase B (isopropanol:acetonitrile 9:1 with 10 mM ammonium formate).

- Mass Spectrometry: Perform full MS and MS/MS scans in both positive and negative electrospray ionization (ESI) modes.

- Data Analysis:

- Interrogate the Total Ion Chromatogram (TIC) and Extracted Ion Chromatograms (XIC) for oxidative degradants.

- Specifically monitor for species with characteristic mass shifts (e.g., +16 Da for monoperoxides, +32 Da for diperoxides) [20].

- Correlate the abundance of these species with functional performance tests (e.g., mRNA activity assays in LNPs).

Protocol 2: Comprehensive Lipidomic Profiling with Integrated QC

This protocol outlines a high-throughput lipidomics workflow with embedded QC checks for tissue and serum samples.

Materials & Reagents:

- Tissue or serum samples

- Homogenization buffer: 6 M guanidinium chloride, 1.5 M thiourea (GCTU) [19]

- Extraction solvent: Dichloromethane:Methanol:Triethylammonium chloride (3:1:0.0005) (DMT) [19]

- Internal standard mixture in methanol [19]

- LC-MS, DI-MS, and ³¹P NMR systems

Procedure:

- Sample Homogenization:

- For tissues, homogenize using a mechanical homogenizer in GCTU buffer to inactivate lipases. Fibrous tissues may benefit from a freeze-thaw cycle prior to homogenization [19].

- Lipid Extraction:

- Combine homogenate, internal standards, water, and DMT solvent.

- Agitate vigorously, centrifuge, and transfer the organic (lower) phase.

- For phospholipid profiling from triglyceride-rich tissues, wash a portion of the organic phase with hexane to concentrate the phospholipid fraction [19].

- Multi-Modal Lipid Analysis:

- Direct Infusion MS (DI-MS): Provides a rapid lipid profile.

- Liquid Chromatography-MS (LC-MS): Enables separation of isobaric lipids.

- ³¹P NMR: Allows for absolute quantification of phospholipid classes.

- Data Processing & QC Validation:

- Retention Time Validation: Plot the retention time of identified lipids against their equivalent carbon number (ECN). Discard identifications that significantly deviate from the predicted ECN model for the chromatographic setup [9].

- Spectral Validation: Manually inspect MS/MS spectra for the presence of characteristic, class-specific fragment ions and neutral losses (e.g., m/z 184.07 for phosphocholine-containing lipids in positive mode) [9].

- Adduct Consistency: Confirm that detected adducts align with the mobile phase composition (e.g., formate adducts in formate-containing buffers).

The following diagram illustrates the logical workflow and decision points for proper lipid identification.

The Scientist's Toolkit: Essential Research Reagents

The following table lists key reagents and their critical functions in ensuring lipidomic QC.

Table 2: Essential Reagents for Lipidomics Quality Control

| Reagent / Material | Function & Role in QC |

|---|---|

| Stable Isotope-Labeled Internal Standards | Correct for extraction efficiency and matrix effects during MS analysis; enable absolute quantification [19]. |

| Guanidine/Thiourea Buffer (GCTU) | Inactivate endogenous lipases ex vivo during tissue homogenization, preventing artifactual degradation of the lipid profile [19]. |

| Authentic Chemical Standards | Validate retention times, calibrate the ECN model, and confirm MS/MS fragmentation patterns for confident lipid identification [9]. |

| LC-MS Grade Solvents with Additives | Ensure reproducible chromatography and predictable formation of molecular adducts (e.g., [M+H]âº, [M+FA-H]â») during ESI-MS [9]. |

| Standard Reference Materials | Quality control samples used to monitor instrument performance and analytical reproducibility across multiple batches [19]. |

| Manitimus | Manitimus, CAS:185915-33-7, MF:C15H11F3N2O2, MW:308.25 g/mol |

| Sulotroban | Sulotroban, CAS:72131-33-0, MF:C16H17NO5S, MW:335.4 g/mol |

Rigorous quality control is the foundation of reliable lipidomics. As demonstrated, failures in QC—whether of raw materials, during sample processing, or in data annotation—propagate errors that invalidate biological conclusions and jeopardize downstream applications like drug development. The protocols and frameworks provided herein, emphasizing multi-adduct detection, retention time validation, and manual spectral verification, offer a actionable path to robust, reproducible, and high-fidelity lipid identification and quantification. Integrating these practices is indispensable for any serious lipidomics research program.

Building a Robust QC Framework: Practical Integration into the Analysis Sequence

Mass spectrometry-based lipidomics has become an indispensable tool for understanding the mechanisms of lipid dysfunction in cardiometabolic diseases, obesity, and for evaluating responses to nutritional interventions [21] [22]. In large-scale cohort studies and clinical trials, sample analysis is inevitably performed across multiple batches and extended timeframes, making advanced quality control (QC) strategies essential for generating accurate and biologically meaningful data [21] [23]. Effective QC protocols must compensate for batch-to-batch and interday analytical variation, which can otherwise obscure true biological signals and introduce technical artifacts [21] [24]. This application note provides a detailed framework for designing a robust QC injection sequence, leveraging insights from recent large-scale lipidomic studies to ensure data integrity throughout long-term analytical projects.

Essential QC Samples and Preparation

Types of Quality Control Samples

A comprehensive QC strategy incorporates several types of reference materials, each serving a distinct purpose in monitoring and correcting analytical performance.

Table 1: Types of Quality Control Samples for Lipidomic Sequencing

| QC Sample Type | Composition | Primary Function | Frequency of Use |

|---|---|---|---|

| Pooled QC | Equal-volume aliquot from all study samples [21] [25] | Monitor system stability, correct for instrumental drift, assess technical precision [21] [23] | Every 5-10 experimental samples [23] |

| Process Blank | Solvents only, processed alongside biological samples [25] | Detect background contamination from solvents, tubes, or sample preparation | Each extraction batch |

| Reference Plasma | Commercially available pooled human plasma (e.g., NIST SRM 1950) [26] | Cross-batch alignment, inter-laboratory comparability, long-term performance tracking | Each analytical batch [27] |

| Internal Standard Mix | Stable isotope-labeled lipid standards added to every sample [21] [27] | Correct for matrix effects, extraction efficiency, and ionization variability [24] | Every sample |

Preparation of a Pooled QC Sample

The pooled QC sample is the cornerstone of sequence normalization. The following protocol, adapted from large-cohort studies, ensures a representative QC material [21] [25].

- Aliquoting: After the final resuspension step of sample preparation, take a 5-10 µL aliquot from each individual study sample [25].

- Pooling: Combine all aliquots into a single container. Vortex thoroughly to ensure homogeneity.

- Portioning: Dispense the homogenized pool into single-use vials to avoid repeated freeze-thaw cycles.

- Storage: Store the portioned QC samples at -80°C under conditions identical to the study samples.

Internal Standard Addition

Stable isotope-labeled internal standards (LIS) are critical for compensating for matrix effects and variations in extraction efficiency. The workflow should incorporate a broad mixture of LIS covering all targeted lipid classes.

- Recommended Mixture: Use commercially available mixes like the SPLASH LipidoMIX (Avanti Polar Lipids) or the Lipidyzer Internal Standards Kit (SCIEX) [21] [25].

- Addition Protocol: Add the LIS working solution at the very beginning of the lipid extraction process, prior to protein precipitation or liquid-liquid extraction, to account for losses during preparation [21] [25]. A typical dilution is 1:200 with propan-2-ol, with a consistent volume added to every sample, blank, and QC [21].

Designing the QC Injection Sequence

A strategically designed injection sequence is paramount for distinguishing technical variance from biological variance. The sequence must facilitate both real-time monitoring and post-acquisition data correction.

Sequence Structure and Block Design

The sequence should be organized into analytical batches of manageable size, with QC samples strategically embedded throughout.

- System Conditioning: Begin the sequence with 5-10 injections of the pooled QC. These data are not used for normalization but serve to condition the LC-MS system and are discarded from the final dataset [23].

- Randomized Block Design: Within each batch, inject study samples in a randomized order to avoid confounding analytical drift with systematic biological groups.

- QC Frequency: Insert a pooled QC sample at regular intervals, typically after every 5-10 experimental samples [23]. This frequency provides sufficient data points to model and correct for systematic drift.

- Batch Size: Limit batch sizes to a manageable number of injections (e.g., 40-50 samples per batch) to maintain analytical consistency, with each batch being a self-contained sequence including all necessary QC samples [23].

Comprehensive Sequence Workflow

The complete workflow integrates all QC elements from sample preparation to data acquisition. The following diagram and protocol detail the entire process.

Step-by-Step Protocol:

- Preparation: Extract lipids from all study samples using a standardized method (e.g., MTBE/MeOH extraction) [25]. In parallel, prepare the pooled QC, process blank, and a set of reference plasma samples.

- Internal Standardization: Add a known amount of stable isotope-labeled internal standard mixture to every sample, including all QCs and blanks, before the extraction begins [21] [25].

- Sequence Assembly: Construct the injection sequence in the mass spectrometer's software (e.g., SCIEX Analyst or Agilent MassHunter):

- Place the process blank at the beginning (after conditioning) to check for carryover.

- Distribute the pooled QC injections evenly throughout the sequence after every 5-10 experimental samples [23].

- Randomize the injection order of study samples within the batch.

- Include the commercial reference plasma (e.g., NIST) at the start and end of each batch [26].

- Data Acquisition: Run the sequence using a targeted LC-MS/MS method with polarity switching to comprehensively cover lipid classes [21].

Evaluating QC and Batch Performance

Rigorous assessment of QC data is required to validate the analytical run and proceed with data normalization.

Key Performance Metrics

The following metrics, calculated from the pooled QC samples, determine batch acceptability.

Table 2: Key Performance Metrics for QC Assessment

| Metric | Calculation | Acceptance Criterion | Rationale |

|---|---|---|---|

| Intra-batch Precision | Median CV% of all lipids in replicate QC injections within a batch [23] | < 15-20% for most lipids [27] | Measures instrumental stability and preparation repeatability in a single run |

| Inter-batch Precision | Median CV% of all lipids in QC across multiple batches [23] | < 30% for a majority of lipids [21] | Assesses long-term reproducibility and batch-to-batch consistency |

| Signal Drift | Correlation of lipid response in sequential QC injections over time | R² > 0.7 for linear drift | Identifies systematic trends requiring correction |

| Total Analyte Coverage | Number of lipid species with CV% < 30% in QC [21] | > 75% of targeted lipids [21] | Ensures quantitative robustness for most of the panel |

Data Normalization and Batch Correction

Upon confirming that QC metrics meet acceptance criteria, apply normalization to remove technical variance.

- Drift Correction: Use the data from the pooled QC injections to model and correct for systematic signal drift throughout the sequence. Common methods include linear regression, LOESS smoothing, or quality control-based robust spline correction (QCRSC) [23].

- Batch Integration: If multiple batches were analyzed, combine the datasets using the pooled QC samples and reference plasma data to align the batches. This can be achieved by calculating a correction factor from the median response of each lipid in the pooled QC across batches [26].

- Final Filtering: Filter the final dataset to exclude lipid species that show poor reproducibility (e.g., CV > 30%) in the pooled QC samples across the entire study [21].

The Scientist's Toolkit: Essential Reagents and Materials

Table 3: Essential Research Reagent Solutions for Lipidomics QC

| Item | Specification / Example | Critical Function |

|---|---|---|

| Labeled Internal Standards | SPLASH LIPIDOMIX (Avanti), Lipidyzer IS Kit (SCIEX) [21] [25] | Compensates for matrix effects & extraction variability; enables quantification [24] |

| Reference Plasma | NIST SRM 1950 Metabolites in Frozen Human Plasma [26] | Provides a benchmark for cross-study and cross-laboratory data alignment |

| LC-MS Grade Solvents | Optima LC/MS grade Methanol, Acetonitrile, MTBE, Water [21] [25] | Minimizes background noise and ion suppression caused by contaminants |

| Standardized LC Columns | Waters Acquity UPLC BEH C18, 1.7µm, 2.1x100mm [21] | Ensures consistent chromatographic retention times and separation |

| Quality Control Software | Skyline, In-house R/Python scripts [21] | Performs peak integration, normalization, and statistical QC analysis |

| Cyclo-(Pro-Gly) | (S)-Hexahydropyrrolo[1,2-a]pyrazine-1,4-dione | |

| Fusicoccin H | Fusicoccin H, CAS:50906-51-9, MF:C26H42O8, MW:482.6 g/mol | Chemical Reagent |

Implementing a rigorously designed QC injection sequence is not optional but fundamental for generating reliable lipidomic data in long-term cohort studies. By integrating pooled QC samples at a high frequency, using stable isotope standards comprehensively, and applying stringent performance metrics, researchers can effectively control for analytical variation. This protocol, built on methodologies proven in large-scale studies [21] [23] [27], provides a robust framework that ensures data quality, thereby enabling valid biological conclusions and strengthening the foundation for discoveries in precision medicine and nutrition.

Within the framework of a comprehensive thesis on quality control for lipidomic analysis sequences, the establishment of robust quality control (QC) samples represents a foundational pillar. In mass spectrometry-based lipidomics, technical variability arising from instrument fluctuations and sample preparation batch effects can obscure biological signals and compromise data integrity [28]. Pooled QC (PQC) samples, created by combining aliquots from all individual study samples, serve as a critical tool for monitoring analytical performance throughout a data acquisition sequence [2]. Meanwhile, surrogate QC (sQC) samples, often derived from commercial pooled plasma, provide a stable long-term reference material that can be used across multiple studies or for method validation, effectively acting as a long-term reference (LTR) [2]. This protocol details the creation and application of these essential QC materials, enabling researchers to distinguish technical artifacts from true biological phenomena in lipidomic profiling.

Protocols for Preparation of Quality Control Samples

Protocol: Creation of Pooled QC (PQC) Samples from Human Serum/Plasma

The PQC sample is a technical mixture that embodies the average lipid profile of the entire cohort, allowing for the monitoring of instrumental stability and data reproducibility during sequence runs [5] [2].

Before You Begin:

- Timing: 1–2 hours

- Biosafety: Perform all work with appropriate personal protective equipment (PPE) including laboratory coats, goggles, masks, and gloves to mitigate infection risks from blood-borne pathogens [5].

- Critical Note: Ensure all parent samples have been collected under standardized, well-documented pre-analytical conditions (e.g., consistent clotting time, fasting status) to minimize introducing bias [5].

Materials and Reagents:

- Individual human serum or plasma samples from your study cohort, stored at -80°C [5]

- Sterile pipette tips (e.g., 200 μL, 1 mL) [5]

- Sterile 1.5 mL or 2 mL microcentrifuge tubes [5]

- Vortex mixer [5]

- Refrigerated centrifuge [5]

Procedure:

- Thaw Samples: Gently thaw all individual serum or plasma samples on ice or in a refrigerator at 4°C. CRITICAL: Avoid repeated freeze-thaw cycles of individual samples, as this can degrade labile lipids and generate analytical artifacts [5] [28].

- Mix and Centrifuge: Briefly vortex each thawed sample to ensure homogeneity. Then, centrifuge all samples at 4°C for 5 minutes at approximately 10,000 g to pellet any insoluble debris or precipitates.

- Aliquot Pooling: Using a calibrated pipette, withdraw a precise, equal-volume aliquot (e.g., 10–50 μL) from each cleared individual sample and combine them into a new, sterile microcenttube. CRITICAL: The volume taken from each sample should be proportional to ensure the PQC is representative of the entire cohort.

- Create Master Pool: Vortex the combined aliquot mixture vigorously for 1–2 minutes to ensure complete homogenization. This mixture is your PQC master stock.

- Aliquot for Use: Immediately divide the PQC master stock into small, single-use aliquots (e.g., in volumes suitable for a single lipid extraction) in labeled microcentrifuge tubes. CRITICAL: This step prevents repeated freeze-thaw cycles of the PQC itself, preserving lipid integrity [5].

- Storage: Store all PQC aliquots at -80°C until needed for lipid extraction and LC-MS analysis.

Protocol: Preparation of Surrogate QC (sQC) Samples

Surrogate QCs are valuable when the volume of the study cohort is limited or for longitudinal studies requiring a stable reference material. Commercial pooled plasma is a typical source [2].

Before You Begin:

- Timing: 30 minutes

- Commercial Source: Acquire a characterized commercial pooled plasma (e.g., NIST SRM 1950) [29].

Procedure:

- Reconstitution (if lyophilized): If the commercial plasma is lyophilized, reconstitute it exactly as per the manufacturer's instructions using the specified volume of high-purity water or buffer.

- Aliquoting: Gently mix the commercial plasma by inverting the container several times. Do not vortex vigorously if the product instructions advise against it. Pipette the solution into small, single-use aliquots.

- Storage: Store the aliquots at -80°C. These sQC aliquots can be interspersed throughout multiple analytical sequences as a long-term reference to track platform stability over weeks or months [2].

The Scientist's Toolkit: Research Reagent Solutions

Table 1: Essential Reagents and Materials for QC Sample Preparation and Lipidomics Workflow

| Item | Function/Application in QC Sample Preparation | Example Sources / Specifications |

|---|---|---|

| Commercial Pooled Plasma | Serves as a ready-made, standardized surrogate QC (sQC) material for cross-study comparisons and long-term stability monitoring. | NIST SRM 1950 [29] |

| Sterile Serological Pipettes | For accurate, sterile transfer of biological fluids during the creation of master pooled QC stocks. | Various suppliers |

| Sterile Microcentrifuge Tubes | For storing single-use aliquots of PQC and sQC samples to prevent freeze-thaw degradation. | 1.5 mL or 2 mL screw-cap tubes [5] |

| Pipettes & Filtered Tips | For precise and contamination-free volumetric transfer of sample aliquots during pooling. | 200 μL, 1 mL pipettes and corresponding tips [5] |

| Vortex Mixer | To ensure complete homogenization of individual samples and the final pooled QC mixture. | [5] |

| Refrigerated Centrifuge | To pellet debris and precipitates from thawed samples before aliquot pooling, ensuring a clear QC matrix. | Capable of 4°C operation [5] |

| -80°C Freezer | For long-term storage of QC aliquots to preserve lipid stability and integrity. | [5] |

| Dipsanoside A | Dipsanoside A, MF:C66H90O37, MW:1475.4 g/mol | Chemical Reagent |

| ZAPA sulfate | ZAPA sulfate, MF:C4H8N2O6S2, MW:244.3 g/mol | Chemical Reagent |

Integrated Experimental Workflow for QC in Lipidomic Sequencing

The following workflow integrates the preparation of both PQC and sQC samples into a typical lipidomics analysis sequence, illustrating their central role in quality assurance.

Figure 1: Integrated workflow for QC samples in lipidomics. This diagram outlines the process from sample source through data analysis, highlighting the critical role of interspersed PQC and sQC samples in monitoring the entire LC-MS sequence.

Quantitative QC Metrics and Data Pre-processing

Once the LC-MS data is acquired, the stability of the measurement is quantified using the PQC and sQC samples. The table below summarizes key metrics and pre-processing steps used to ensure data quality.

Table 2: Key Quantitative Metrics for Lipidomics QC Assessment

| Metric | Description | Target / Acceptable Threshold | Application in Data Pre-processing |

|---|---|---|---|

| Retention Time Drift | The shift in the elution time of a lipid species across the sequence. | < 0.1 min or 2% RSD [28] | Enables alignment of lipid peaks across all samples. |

| Signal Intensity Drift | The change in peak area/height for a lipid in QCs over the sequence. | Monitor for consistent trend; used for correction. | Normalization algorithms (e.g., LOWESS) use PQC data to correct for systematic drift in study samples [2]. |

| Relative Standard Deviation (RSD) | The coefficient of variation (%CV) of a lipid's signal intensity across all PQC injections. | < 20-30% for a robust method [2] [28] | Lipids with high RSD in PQC are often flagged as unreliable and removed from downstream analysis. |

| Total Ion Chromatogram (TIC) Stability | The consistency of the total signal across the chromatographic run in QCs. | Stable baseline, reproducible profile. | Used for initial, gross-level quality assessment of each injection. |

| Lipid Identification Confidence | The level of certainty in annotating a lipid species, based on MS/MS, standards, or ion mobility. | Level 1 (identified) > Level 3 (putative) [29] | Annotations in the final dataset are often filtered based on a minimum confidence level. |

The power of PQC samples is fully realized during data processing. By calculating the Relative Standard Deviation (RSD) for each quantified lipid across the PQC injections, researchers can objectively assess the precision of their analytical method. Lipids with an RSD below an acceptable threshold (e.g., 20-30%) in the PQC samples are considered to have been measured with sufficient reliability for biological interpretation [2] [28]. Furthermore, machine learning models for biomarker discovery can be trained on datasets where unreliable variables (high RSD lipids) have been filtered out, leading to more robust and reproducible classifiers [5]. The sQC/LTR samples provide a second layer of assurance, allowing for the monitoring of long-term instrumental performance and facilitating the merging of datasets acquired over extended periods [2].

In mass spectrometry-based lipidomics, the accuracy and reproducibility of data acquired across large-scale, multibatch studies are paramount. The inherent complexity of biological samples, combined with potential analytical variations introduced during lengthy instrument runs, necessitates robust quality control (QC) strategies. Pooled Quality Control (PQC) samples, typically derived from a representative pool of all study samples, are the cornerstone of such strategies. This application note delineates evidence-based protocols for the optimal frequency and placement of QC sample injections within an analytical sequence, contextualized within a broader thesis on quality control for lipidomic analysis. The systematic integration of these practices is critical for monitoring instrument stability, evaluating batch effects, and ensuring the generation of high-quality, reliable lipidomic data suitable for research and drug development.

The Critical Role of QC Samples in Lipidomics

Lipidomics workflows are susceptible to numerous sources of variation, including batch-to-batch analytical variation, ion suppression effects, and instrumental drift over time [21]. Quality control samples serve as a vital tool to monitor, detect, and correct for these variations. The primary objectives of integrating QC samples are:

- Monitoring Stability: To track the performance of the liquid chromatography-tandem mass spectrometry (LC-MS/MS) system throughout the acquisition sequence.

- Assessing Precision: To evaluate the technical reproducibility of the lipid measurements.

- Data Pre-processing: To facilitate the correction of systematic drift and filter out unreliable lipid species based on their variance in QC samples [21]. For instance, in a large-scale lipidomic workflow, lipids with a relative standard deviation (RSD) of more than 30% in replicate QC plasma samples are often filtered out to ensure data quality [21].

- Acting as a Surrogate: Commercial plasma can be evaluated as a surrogate QC (sQC) or long-term reference (LTR) when a sufficient volume of a study-specific pooled sample is unavailable [2].

The following workflow diagram illustrates the integration of QC samples within a comprehensive lipidomics analysis pipeline.

Optimal Frequency and Placement of QC Injections

The strategic placement and density of QC injections within a sequence are critical for capturing and correcting for analytical variation. The following recommendations are synthesized from established, large-scale lipidomic studies.

Empirical Guidelines from Large-Scale Studies

Large-scale lipidomic studies provide concrete evidence for effective QC strategies. One optimized workflow for quantifying 1163 lipid species across 16 independent batches (total injection count = 6142) embedded replicate QC plasma samples throughout the acquisition [21]. The performance of these QCs was used to ensure robustness, with 820 lipids reporting a relative standard deviation (RSD) of <30% in the 1048 replicate QC samples, demonstrating the high precision achievable with rigorous QC [21].

Recommended QC Injection Protocol

The table below summarizes the empirical guidelines for QC injection frequency and placement, derived from current literature.

Table 1: Protocol for QC Sample Injection Frequency and Placement

| Stage in Sequence | Recommended Practice | Rationale and Purpose |

|---|---|---|

| System Equilibration | Inject a minimum of 3-5 pooled QC samples at the beginning of the sequence until stable response is observed. [21] [30] | Conditions the analytical system (column and ion source) and establishes a baseline for stable lipid signals. Data from these initial injections are typically excluded from final QC assessment. |

| Start of Batch | Inject one or more QC samples after the conditioning phase. | Provides a baseline measurement for instrumental performance at the start of data acquisition. |

| Throughout the Run | Inject QC samples at regular intervals, approximately every 6-10 analytical samples. [21] [30] | Enables continuous monitoring of instrumental drift (retention time, signal intensity) and batch-to-batch variation. This frequency is sufficient to model and correct for systematic errors. |

| End of Batch | Inject one or more QC samples at the conclusion of the sequence. | Allows for assessment of total system drift over the entire batch run time. |

A key practice is the use of a randomized sample injection order to avoid confounding analytical effects with biological groups. The placement of QC samples, however, remains fixed and systematic within this randomized sequence to accurately model technical variance.

Detailed Experimental Protocol for QC-Based Lipidomics

This section provides a detailed methodology for a robust lipidomic analysis, incorporating the QC strategy outlined above.

Materials and Reagents

- Biological Samples: Plasma, serum, or tissue samples from study cohorts and healthy volunteers for PQC preparation. [21]

- Commercial QC Material: Commercially available pooled human plasma (e.g., from BioIVT) can be used as a surrogate QC (sQC) or long-term reference (LTR). [2] [21]

- Internal Standards: A comprehensive mixture of stable isotope-labeled internal standards (SIL-IS) is crucial. Examples include the Lipidyzer Internal Standards Kit (SCIEX) and SPLASH LipidoMIX (Avanti Polar Lipids). [21] These are diluted in propan-2-ol (IPA) to create a working solution.

- Extraction Solvents: LC-MS grade solvents are mandatory. Common choices include methyl tert-butyl ether (MTBE), methanol, and MTBE-based phase separation is widely used. [31] [30]

- LC-MS Mobile Phases: Mobile phase A: Water/Acetonitrile/Propan-2-ol (e.g., 50/30/20, v/v/v) with 10 mM ammonium acetate. Mobile phase B: Propan-2-ol/Acetonitrile/Water (e.g., 90/9/1, v/v/v) with 10 mM ammonium acetate. [21]

Step-by-Step Procedure

Sample Preparation and Lipid Extraction

- Thawing: Thaw frozen plasma/serum samples on ice. [31]

- Aliquoting: Pipette a precise volume (e.g., 50 μL) of sample, including study samples, PQC, and sQC, into a labeled tube. [31]

- Internal Standard Addition: Add a fixed volume of the SIL-IS working solution to each sample. This step is critical for compensating for matrix effects and enabling quantitative accuracy. [21]

- Lipid Extraction: Perform lipid extraction. For the MTBE method:

- Add 300 μL of pre-cooled methanol to the sample and vortex for 1 minute. [31]

- Add 1 mL of MTBE, vortex for 1 minute, and gently agitate for 1 hour. [31]

- Add 300 μL of water to induce phase separation, vortex for 1 minute, and incubate at 4°C for 10 minutes. [31]

- Centrifuge at 14,000 g for 15 minutes at 4°C. [31]

- Collection and Reconstitution: Collect the upper organic layer (lipid-containing phase). Aliquot, vacuum-dry, and store the dried lipid extract at -80°C. Before LC-MS analysis, reconstitute the dried extract in an appropriate solvent mixture (e.g., Acetonitrile/Isopropanol/Water, 65/30/5, v/v/v). [31]

Analytical Sequence Design and LC-MS Analysis

- Sequence Design:

- Program the autosampler sequence to begin with 3-5 injections of PQC for system conditioning.

- Randomize the injection order of all study samples.

- Intersperse PQC and/or sQC samples at regular intervals (every 6-10 study samples) throughout the sequence.

- Conclude the sequence with one or more QC injections.

- Liquid Chromatography:

- Column: Use a reversed-phase C18 column (e.g., Waters Acquity BEH C18, 1.7 μm, 2.1 × 100 mm) maintained at 55-60°C. [21]

- Gradient: Employ a binary gradient with a total cycle time of 15-20 minutes. A representative gradient starts at 10% B, ramping to 100% B over 12-13 minutes, holding, and re-equilibrating. [21]

- Flow Rate: 0.4 mL/min. [21]

- Injection Volume: 5 μL. [21]

- Mass Spectrometry:

- Instrument: Triple quadrupole (QQQ) mass spectrometer (e.g., SCIEX QTRAP 6500+). [21]

- Ionization: Electrospray Ionization (ESI) with rapid polarity switching.

- Acquisition Mode: Time-scheduled Multiple Reaction Monitoring (MRM).

- Source Parameters: Capillary voltage: +5500 V/-4500 V; Temperature: 300°C; Curtain gas: 20 psi; Ion source gas 1 & 2: 40 and 60 psi. [21]

Data Processing and Quality Assessment

Following data acquisition, the performance of the QC protocol must be quantitatively assessed.

- Peak Integration and Data Pre-processing: Import raw data into specialized software (e.g., Skyline) for peak integration and calculation of analyte-to-internal standard response ratios. [21]

- QC-Based Filtering: Apply data quality filters. A standard practice is to remove lipid species with an RSD greater than 20-30% in the PQC injections, as this indicates poor analytical precision. [21]

- Drift Correction: Apply statistical algorithms (e.g., locally estimated scatterplot smoothing - LOESS) to the response ratios of lipids in the sequentially injected QCs to correct for systematic signal drift over time.

Table 2: Key Performance Metrics for Evaluating QC Data in Lipidomics

| Metric | Target Value | Interpretation |

|---|---|---|

| Relative Standard Deviation (RSD) | < 20-30% for the majority of lipids in PQC injections [21] | Measures analytical precision. Lipids with RSD exceeding the threshold should be considered unreliable and filtered out. |

| Retention Time Shift | < 0.1 min across the entire sequence | Indicates chromatographic stability. Significant drift may suggest column degradation or mobile phase issues. |

| Signal Intensity Drift | Corrected via algorithms (e.g., LOESS) using PQC data [21] | Monitors instrumental sensitivity changes over time. Successful correction is key for valid inter-batch comparisons. |

| Batch-to-Batch Variation | Low inter-instrument and inter-batch RSD (e.g., <30% for 820 lipids across 16 batches) [21] | Demonstrates the robustness and transferability of the workflow, enabling large-scale, multi-cohort studies. |

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Reagents and Materials for QC-Based Lipidomics

| Item | Function | Example Products / Specifications |

|---|---|---|

| Stable Isotope-Labeled Internal Standards (SIL-IS) | Compensate for matrix effects, extraction efficiency variances, and ion suppression; enable precise quantification. | Lipidyzer IS Kit (SCIEX), SPLASH LipidoMIX (Avanti Polar Lipids) [21] |

| Pooled Quality Control (PQC) Material | Monitors analytical performance, stability, and precision throughout the sequence. | Study-specific pool, Commercial pooled human plasma (e.g., BioIVT) [2] [21] |

| LC-MS Grade Solvents | Ensure high purity, minimize background noise, and prevent instrument contamination. | Optima LC/MS Grade (Thermo Fisher) [21] |

| Solid-Phase Extraction (SPE) Plates | Optional for phospholipid removal to reduce matrix effects, particularly for fatty acid analysis. [32] | Various manufacturers |

| Mass Spectrometry Quality Control Software | Data processing, peak integration, calculation of response ratios, and lipid quantification. | Skyline, LipidSearch [21] [30] |

| Phosmet-d6 | Phosmet-d6, CAS:2083623-41-8, MF:C11H12NO4PS2, MW:323.4 g/mol | Chemical Reagent |

| Carmichaenine B | Carmichaenine B, MF:C23H37NO7, MW:439.5 g/mol | Chemical Reagent |

The rigorous implementation of a QC strategy, defined by the optimal frequency and placement of QC samples, is non-negotiable for generating high-fidelity lipidomic data. The protocols detailed herein—system conditioning with PQC, regular intercalation every 6-10 samples, and data filtering based on RSD in QCs—provide a proven framework for mitigating analytical variance. Adherence to this standardized protocol ensures that lipidomic data is robust, reproducible, and suitable for advancing research in basic science, biomarker discovery, and drug development.

In mass spectrometry-based lipidomics, the reliability of data is paramount for meaningful biological interpretation. Robust quality control (QC) is essential to monitor analytical performance and ensure the validity of lipid identification and quantification. This protocol details the application of key performance indicators (KPIs)—mass accuracy, retention time, and peak area—within a lipidomics QC framework. We provide detailed methodologies for establishing and utilizing pooled quality control samples to track analytical variation, alongside a novel scoring system for evaluating data quality in the context of large-scale lipidomic studies.

Lipidomics, the large-scale study of lipids, leverages mass spectrometry (MS) to identify and quantify hundreds to thousands of lipid species from biological samples. However, the human lipidome exhibits significant inter-individual variability, influenced by genotype, diet, and gut flora, which complicates data analysis and necessitates robust study design [33]. Furthermore, the analytical process itself introduces variation that can compromise data integrity if not properly monitored and controlled.

Quality control samples, particularly pooled quality control (PQC) samples created by combining aliquots of all study samples, serve as a critical tool for this purpose. They act as a surrogate for the entire sample set, allowing researchers to monitor the stability and performance of the analytical sequence over time [2]. Evaluating KPIs against pre-defined acceptance criteria for these QC samples provides a quantitative measure of data quality. This is especially vital when chromatographic retention time is used to validate lipid identifications and when subtle, but biologically significant, changes in lipid abundance must be reliably detected.

Core Performance Indicators and Their Significance

Three KPIs form the foundation of analytical quality control in lipidomics. They assess different aspects of the mass spectrometry platform's performance, from correct identification to precise quantification.

Table 1: Key Performance Indicators in Lipidomics QC

| Key Performance Indicator | Analytical Aspect Monitored | Impact on Data Quality | Typical Acceptance Criteria |

|---|---|---|---|

| Mass Accuracy | Mass spectrometer calibration and performance | Confidence in lipid identification | ≤ 5 ppm (high-resolution MS) |

| Retention Time | Chromatographic system stability | Confidence in lipid identification and detection of co-eluting isomers | Low relative standard deviation (e.g., < 2%) |

| Peak Area | Detection system stability and sample preparation reproducibility | Confidence in lipid quantification and ability to detect true biological variation | Low relative standard deviation (e.g., < 15-20% in PQC) |

Mass Accuracy

Mass accuracy refers to the difference between the measured mass-to-charge ratio (m/z) of an ion and its true theoretical value. It is typically reported in parts per million (ppm). High mass accuracy is a prerequisite for confident lipid annotation, as it drastically reduces the number of potential molecular formula assignments for a given peak.

Retention Time

Retention time (RT) is the time at which a lipid elutes from the chromatographic column. Stable RT is critical for two reasons: First, it is used to align features across multiple samples in a dataset. Second, and more importantly, corroborating the measured RT of an identified lipid with an expected value based on a standard or a retention time model is a powerful method to eliminate false-positive annotations [9]. Lipids follow predictable retention behavior patterns, such as the Equivalent Carbon Number (ECN) model in reversed-phase chromatography, and deviations from this model can indicate misidentification.

Peak Area

The peak area of a lipid species is a direct measure of its abundance. The consistency of peak areas for individual lipids across repeated injections of the PQC sample reflects the combined precision of the sample preparation, chromatography, and mass spectrometry. High variability in the PQC indicates technical instability, which reduces the statistical power to detect true biological differences between experimental groups.

Experimental Protocols for QC Preparation and Analysis

Protocol: Preparation of Pooled Quality Control (PQC) Samples

- Aliquot Pooling: Combine equal volume aliquots from each individual study sample into a single container.

- Homogenization: Vortex the pooled mixture thoroughly to ensure homogeneity.

- Aliquoting: Dispense the homogenized PQC into multiple low-volume, single-use vials to minimize freeze-thaw cycles.

- Storage: Store all PQC aliquots at -80°C under conditions identical to the study samples.