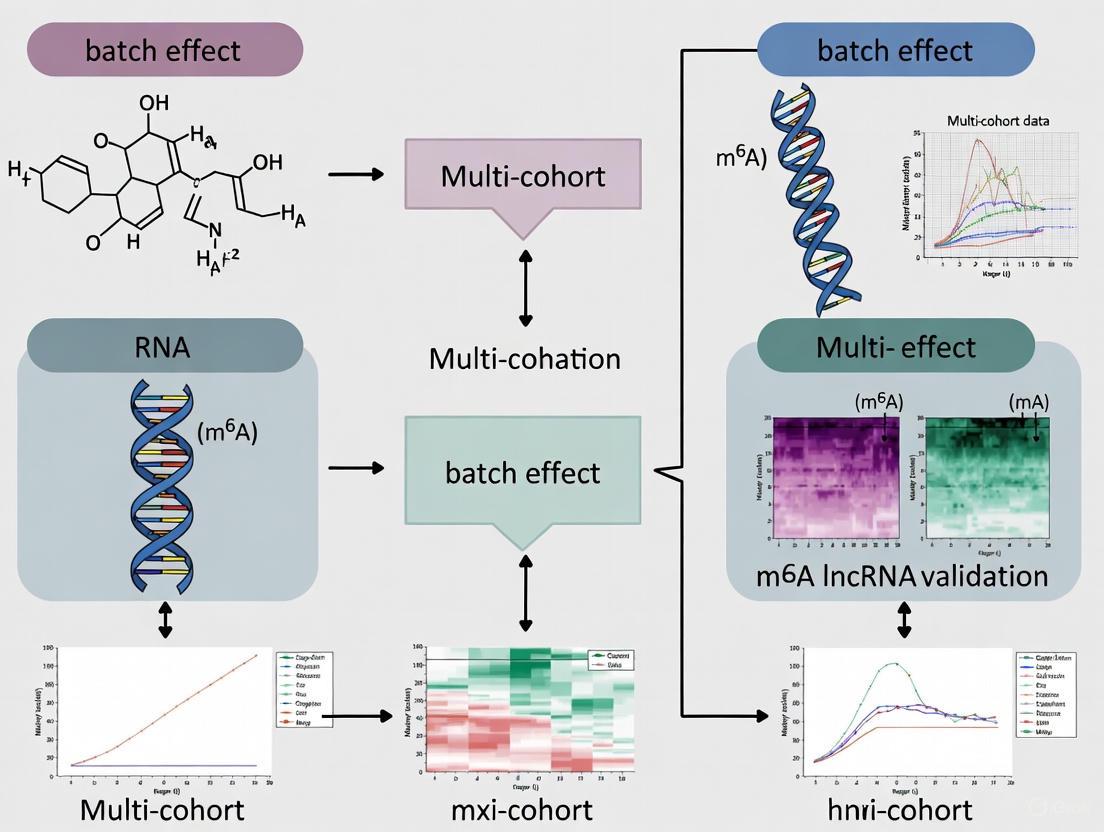

Navigating Batch Effects in Multi-Cohort m6A-lncRNA Studies: A Comprehensive Guide from Discovery to Clinical Validation

The integration of multi-cohort data is paramount for validating robust m6A-related lncRNA signatures in cancer research, yet it is severely compromised by pervasive batch effects.

Navigating Batch Effects in Multi-Cohort m6A-lncRNA Studies: A Comprehensive Guide from Discovery to Clinical Validation

Abstract

The integration of multi-cohort data is paramount for validating robust m6A-related lncRNA signatures in cancer research, yet it is severely compromised by pervasive batch effects. This article provides a comprehensive framework for researchers and bioinformaticians, addressing the foundational principles of batch effects in omics data, practical methodologies for their correction in confounded study designs, advanced troubleshooting for complex scenarios, and robust strategies for the final validation of prognostic or diagnostic models. By synthesizing current evidence and best practices, this guide aims to enhance the reliability, reproducibility, and clinical translatability of multi-cohort m6A-lncRNA studies, ultimately fostering precision medicine advancements.

Understanding the Critical Impact of Batch Effects on m6A-lncRNA Biomarker Discovery

In multi-cohort m6A lncRNA validation research, batch effects represent one of the most significant technical challenges compromising data integrity and biological discovery. These systematic technical variations, unrelated to the biological signals of interest, are introduced during various experimental stages and can lead to misleading conclusions if not properly addressed [1] [2]. In the context of m6A lncRNA studies, where researchers investigate RNA modifications and their functional implications across multiple cohorts, batch effects can obscure true biological relationships, hinder reproducibility, and ultimately invalidate research findings [3] [4].

The fundamental issue arises from the basic assumption in omics data representation that instrument readouts linearly reflect biological analyte concentrations. In practice, fluctuations in experimental conditions disrupt this relationship, creating inherent inconsistencies across different batches [1]. For m6A lncRNA research integrating data from multiple sources—such as different sequencing platforms, laboratory protocols, or analysis pipelines—these technical variations can become confounded with the biological signals of interest, particularly when investigating subtle modification patterns or expression changes [3] [5].

Understanding Batch Effects: Definitions and Impact

What Are Batch Effects?

Batch effects are technical variations systematically introduced into high-throughput data due to differences in experimental conditions rather than biological factors [1] [2]. These non-biological variations can emerge at every step of a typical high-throughput study, from sample collection and preparation to sequencing and data analysis [1] [6].

In multi-cohort m6A lncRNA research, a "batch" refers to any group of samples processed differently from other groups in the experiment. This could include samples sequenced on different instruments, processed by different personnel, prepared using different reagent lots, or analyzed at different times [2] [7]. The complexity is magnified when integrating data from multiple studies or laboratories, as each may employ distinct protocols and technologies [1].

Profound Negative Impacts on Research

The consequences of uncorrected batch effects in m6A lncRNA studies can be severe and far-reaching:

Misleading Conclusions: Batch effects can introduce noise that dilutes biological signals, reduces statistical power, or generates false positives in differential expression analysis [1]. When batch effects correlate with biological outcomes, they can lead to incorrect interpretations of the data [1].

Irreproducibility Crisis: Batch effects are a paramount factor contributing to the reproducibility crisis in scientific research [1]. Technical variations from reagent variability and experimental bias can make key findings impossible to reproduce across laboratories, resulting in retracted articles and invalidated research findings [1].

Clinical Implications: In severe cases, batch effects have led to incorrect patient classifications in clinical trials. One documented example resulted in 162 patients receiving incorrect or unnecessary chemotherapy regimens due to batch effects introduced by a change in RNA-extraction solution [1].

Compromised Multi-Omics Integration: For m6A lncRNA studies that often involve multi-omics approaches, batch effects are particularly problematic because they affect different data types measured on different platforms with different distributions and scales [1]. This technical variation can hinder the integration of data from multiple modification types and obscure true biological relationships [5].

Table 1: Documented Impacts of Uncorrected Batch Effects in Biomedical Research

| Impact Category | Specific Consequences | Documented Example |

|---|---|---|

| Scientific Validity | False discoveries, biased results, misleading conclusions | Species differences attributed to batch effects rather than biology [1] |

| Reproducibility | Retracted papers, discredited findings, economic losses | Failed reproducibility of high-profile cancer biology studies [1] |

| Clinical Translation | Incorrect patient classification, inappropriate treatment | 162 patients receiving incorrect chemotherapy regimens [1] |

| Research Efficiency | Wasted resources, delayed discoveries, invalidated biomarkers | Invalidated risk calculation in clinical trial due to RNA-extraction solution change [1] |

Troubleshooting Guide: Detection and Diagnosis

How to Detect Batch Effects in m6A lncRNA Data

Detecting batch effects is a critical first step in addressing them. Several established methods can help identify technical variations in multi-cohort m6A lncRNA datasets:

Visualization Methods:

Principal Component Analysis (PCA): Perform PCA on raw data and analyze the top principal components. Scatter plots of these components often reveal separations driven by batches rather than biological sources [8] [9] [10]. In PCA plots, samples clustering primarily by batch rather than biological condition indicate significant batch effects.

t-SNE/UMAP Plot Examination: Visualization of cell groups on t-SNE or UMAP plots with labels indicating both sample groups and batch numbers can reveal batch effects [8] [9]. Before correction, cells from different batches often cluster separately; after proper correction, biological similarities should drive clustering patterns [8].

Clustering Analysis: Heatmaps and dendrograms showing samples clustered by batches instead of treatments or biological conditions signal potential batch effects [9]. Ideally, samples with the same biological characteristics should cluster together regardless of processing batch.

Quantitative Metrics:

For objective assessment, several quantitative metrics can detect batch effects with less human bias [8] [9] [6]:

Table 2: Quantitative Metrics for Batch Effect Detection and Evaluation

| Metric | Purpose | Interpretation |

|---|---|---|

| kBET (k-nearest neighbor batch effect test) | Tests whether cells from different batches mix well in local neighborhoods | Higher acceptance rates indicate better mixing |

| LISI (Local Inverse Simpson's Index) | Measures diversity of batches in local neighborhoods | Values closer to the total number of batches indicate better integration |

| ARI (Adjusted Rand Index) | Compares clustering consistency with known cell types | Higher values (closer to 1) indicate better preservation of biological identity |

| NMI (Normalized Mutual Information) | Measures the overlap between batch labels and clustering | Lower values indicate less batch-specific clustering |

| ASW (Average Silhouette Width) | Evaluates separation between batches and within cell types | Values closer to 0 indicate better batch mixing while maintaining biological separation |

Diagnostic Workflow

The following workflow diagram illustrates a systematic approach to diagnosing batch effects in multi-cohort m6A lncRNA studies:

Frequently Asked Questions (FAQs)

FAQ 1: What is the difference between normalization and batch effect correction?

Normalization and batch effect correction address different technical variations and operate at different stages of data processing:

Normalization works on the raw count matrix and mitigates technical variations such as sequencing depth across cells, library size, amplification bias, and gene length effects [8]. It aims to make samples comparable by adjusting for global technical differences.

Batch Effect Correction addresses variations caused by different sequencing platforms, timing, reagents, or laboratory conditions [8]. While some methods correct the full expression matrix, many batch correction approaches utilize dimensionality-reduced data to improve computational efficiency [8].

In multi-cohort m6A lncRNA studies, both processes are essential but serve distinct purposes. Normalization should typically be performed before batch effect correction as part of the standard preprocessing workflow.

FAQ 2: How can I determine if my m6A lncRNA data needs batch correction?

Your data likely requires batch correction if you observe:

- Samples clustering primarily by batch rather than biological condition in PCA or UMAP plots [9] [10]

- Quantitative metrics (kBET, LISI, ARI) indicating poor mixing of batches [8] [6]

- Biological replicates from the same condition separating by processing date, reagent lot, or sequencing run [1] [2]

- Known technical covariates (extraction date, personnel, platform) significantly associated with principal components [2]

For multi-cohort m6A lncRNA studies specifically, if you are integrating datasets from different sources or processing periods, proactive batch correction is generally recommended rather than waiting for obvious signals of batch effects [3].

FAQ 3: What are the signs of overcorrection in batch effect adjustment?

Overcorrection occurs when batch effect removal also eliminates genuine biological signals. Key indicators include:

- Distinct cell types clustering together on dimensionality reduction plots (PCA, t-SNE, UMAP) [8] [9]

- Complete overlap of samples from very different biological conditions [9]

- Cluster-specific markers comprising mainly genes with widespread high expression (e.g., ribosomal genes) rather than specific markers [8]

- Significant overlap among markers specific to different clusters [8]

- Absence of expected canonical markers for known cell types present in the dataset [8]

- Scarcity of differential expression hits in pathways expected based on sample composition [8]

In m6A lncRNA research, overcorrection might manifest as loss of known modification patterns or expression differences that should exist between experimental conditions.

FAQ 4: Are batch correction methods for single-cell RNA-seq the same as for bulk RNA-seq?

While the purpose of batch correction—identifying and mitigating technical variations—is the same across platforms, algorithmic approaches often differ:

Bulk RNA-seq Methods: Techniques like ComBat, limma's removeBatchEffect, and SVA were developed for bulk data and may be insufficient for single-cell data due to differences in data size, sparsity, and complexity [8].

Single-cell RNA-seq Methods: Tools such as Harmony, Seurat, MNN Correct, LIGER, and Scanorama are specifically designed to handle the high dimensionality, sparsity (approximately 80% zero values), and cellular heterogeneity of single-cell data [8].

For m6A lncRNA studies using single-cell approaches, selecting methods specifically validated for single-cell data is crucial, as they better account for the unique characteristics of these datasets [8].

FAQ 5: How does sample imbalance affect batch correction in multi-cohort studies?

Sample imbalance—differences in cell type composition, cell numbers per type, and cell type proportions across samples—substantially impacts batch correction outcomes [9]. This is particularly relevant in cancer m6A lncRNA studies, which often exhibit significant intra-tumoral and intra-patient heterogeneity [9].

In imbalanced scenarios:

- Integration techniques may perform differently depending on the degree and type of imbalance [9]

- Downstream analyses and biological interpretation can be significantly affected [9]

- Methods that explicitly account for compositional differences may be preferable [9]

When designing multi-cohort m6A lncRNA studies, striving for balanced representation across batches improves the reliability of batch correction [9].

Batch Effect Correction Methods and Protocols

Multiple computational methods have been developed to address batch effects in omics data. The choice of method depends on data type (bulk vs. single-cell), study design, and the nature of the batch effects:

Table 3: Common Batch Effect Correction Methods and Their Applications

| Method | Primary Application | Key Algorithm | Advantages | Considerations for m6A lncRNA Studies |

|---|---|---|---|---|

| ComBat/ComBat-seq | Bulk RNA-seq | Empirical Bayes | Effective for known batch effects; ComBat-seq designed for count data | May not handle nonlinear effects; requires known batch information [10] [6] |

| limma removeBatchEffect | Bulk RNA-seq | Linear modeling | Efficient; integrates well with differential expression workflows | Assumes additive batch effects; known batches required [2] [6] |

| SVA | Bulk RNA-seq | Surrogate variable analysis | Captures hidden batch effects; useful when batch labels are incomplete | Risk of removing biological signal; requires careful modeling [6] |

| Harmony | Single-cell RNA-seq | Iterative clustering and correction | Fast runtime; good performance in benchmarks | May be less scalable for very large datasets [8] [9] [7] |

| Seurat Integration | Single-cell RNA-seq | Canonical Correlation Analysis (CCA) and MNN | Widely used; good preservation of biological variation | Lower scalability for very large datasets [8] [9] [7] |

| scANVI | Single-cell RNA-seq | Variational inference and neural networks | Top performance in comprehensive benchmarks | Computational intensity; more complex implementation [9] |

| MNN Correct | Single-cell RNA-seq | Mutual Nearest Neighbors | Identifies shared cell types across batches | High computational resources required [8] [7] |

Practical Correction Protocol for Multi-Cohort m6A lncRNA Data

The following workflow provides a structured approach for batch effect correction in multi-cohort m6A lncRNA studies:

Step-by-Step Implementation:

Data Preprocessing and Normalization

- Begin with quality control and filtering of low-quality cells or samples

- Apply appropriate normalization for your data type (e.g., TMM for bulk RNA-seq, standard scRNA-seq methods for single-cell data)

- For multi-cohort m6A lncRNA data, ensure consistent gene annotation across datasets

Batch Effect Assessment

- Generate PCA plots colored by batch and biological conditions

- Create UMAP/t-SNE visualizations with batch labels

- Calculate quantitative metrics (kBET, LISI, ARI) to establish baseline batch effect severity

Method Selection

- Choose methods based on data type (bulk vs. single-cell)

- Consider study design (balanced vs. imbalanced, known vs. unknown batches)

- For multi-cohort m6A lncRNA studies, consider starting with Harmony or Seurat for single-cell data, or ComBat-seq for bulk data

Application and Validation

- Apply selected correction method with appropriate parameters

- Re-generate visualizations and quantitative metrics to assess improvement

- Verify that biological signals are preserved while batch effects are reduced

- Iterate with different methods or parameters if correction is insufficient

Special Considerations for m6A lncRNA Studies

Multi-cohort m6A lncRNA research presents unique challenges for batch effect correction:

- Preservation of Modification Signals: Ensure correction methods don't remove subtle but biologically important modification patterns [3] [5]

- Multi-Omics Integration: When integrating m6A data with other omics layers, consider cross-platform batch effects [1] [5]

- Validation: Use positive controls (known m6A-modified lncRNAs) to verify biological signal preservation after correction [3] [4]

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful multi-cohort m6A lncRNA research requires careful selection of reagents and materials to minimize batch effects from the outset:

Table 4: Essential Research Reagent Solutions for m6A lncRNA Studies

| Reagent/Material | Function | Batch Effect Considerations | Best Practices |

|---|---|---|---|

| RNA Extraction Kits | Isolation of high-quality RNA from samples | Different lots may vary in efficiency and purity | Use same lot for entire study; test multiple lots initially |

| Library Prep Kits | Preparation of sequencing libraries | Protocol variations affect coverage and bias | Standardize across cohorts; include controls |

| Antibodies (for meRIP-seq) | Immunoprecipitation of modified RNA | Lot-to-lot variation in specificity and efficiency | Validate each new lot; use same lot for comparable experiments |

| Enzymes (Reverse transcriptase, polymerases) | cDNA synthesis and amplification | Activity variations affect efficiency and bias | Use consistent sources and lots; include QC steps |

| Sequencing Platforms | High-throughput read generation | Platform-specific biases and error profiles | Balance biological groups across sequencing runs |

| Reference Standards | Quality control and normalization | Provide benchmark for technical variation | Include in every batch; use commercially available standards |

| Storage Buffers and Solutions | Sample preservation and processing | Composition affects RNA stability and integrity | Standardize recipes and sources; document any changes |

| Kaempferol tetraacetate | Kaempferol tetraacetate, CAS:16274-11-6, MF:C23H18O10, MW:454.4 g/mol | Chemical Reagent | Bench Chemicals |

| Isomurralonginol acetate | Isomurralonginol acetate, MF:C17H18O5, MW:302.32 g/mol | Chemical Reagent | Bench Chemicals |

Batch effects represent a fundamental challenge in multi-cohort m6A lncRNA research, with the potential to compromise data integrity, biological discovery, and clinical translation. Through proactive experimental design, rigorous detection methods, appropriate correction strategies, and comprehensive validation, researchers can mitigate these technical variations while preserving biological signals of interest.

The integration of multiple cohorts in m6A lncRNA studies offers tremendous power for discovery and validation but requires diligent attention to technical variability. By implementing the troubleshooting guides, FAQs, and protocols outlined in this technical support center, researchers can enhance the reliability, reproducibility, and biological relevance of their findings in this rapidly advancing field.

As batch effect correction methodologies continue to evolve, maintaining a balanced approach that addresses technical artifacts while preserving genuine biological signals remains paramount. Through careful application of these principles, the research community can advance our understanding of m6A lncRNA biology while maintaining the highest standards of scientific rigor.

Frequently Asked Questions

What are batch effects and why are they a problem in multi-cohort m6A lncRNA studies? Batch effects are technical variations in data that are unrelated to the biological question you are studying. They arise from differences in experimental conditions, such as different sequencing runs, instruments, reagent lots, labs, or personnel [10] [2]. In m6A lncRNA research, which often relies on combining data from multiple cohorts (like TCGA and GEO), these effects can confound the real biological signals from RNA modifications, leading you to identify false prognostic signatures or incorrect links to tumor immunity [3] [1].

How can I tell if my dataset has significant batch effects? The most common and effective method is Principal Component Analysis (PCA). You create a PCA plot of your samples and color them by batch. If samples cluster more strongly by their batch (e.g., the lab they came from) than by their biological condition (e.g., tumor vs. normal), you have a clear sign of batch effects [10] [2].

My study design is confounded—the biological groups are completely separated by batch. Can I correct for this? This is a major challenge. In a fully confounded design, where a biological group is processed entirely in one batch, it is nearly impossible to statistically disentangle the biological signal from the batch effect [2] [1]. Correction methods may remove the biological signal of interest along with the batch effect. The best solution is a well-planned, balanced experimental design from the start.

What are the consequences of not correcting for batch effects? The consequences are severe and range from:

- Reduced statistical power and increased false negatives [1].

- Spurious findings, where batch-correlated features are mistakenly identified as biologically significant [1].

- Incorrect conclusions that can misdirect research, such as attributing differences to species or disease status when they are actually technical artifacts [1].

- Irreproducibility, which can lead to retracted papers and invalidated research findings, wasting resources and time [1].

Which batch effect correction method should I use for my RNA-seq count data? There are several established methods, and the choice can depend on your data and study design. The table below summarizes three common approaches.

| Method | Description | Best Use Case |

|---|---|---|

| ComBat-seq [10] | Uses an empirical Bayes framework to adjust count data directly. | Ideal for RNA-seq count data before differential expression analysis. |

| removeBatchEffect (limma) [10] [2] | Uses linear models to remove batch effects from normalized, log-transformed data. | Good for microarray or voom-transformed RNA-seq data; often used in visualization. |

| Including Batch as a Covariate [10] | Accounts for batch during statistical modeling (e.g., in DESeq2, edgeR). | A statistically sound approach for differential expression analysis, as it does not alter the raw data. |

Troubleshooting Guides

Guide 1: Diagnosing Batch Effects in Your Multi-Cohort Dataset

Objective: To visually and statistically assess the presence and severity of batch effects in combined datasets (e.g., TCGA and GEO).

Protocol:

- Data Preparation: Combine your raw count or normalized expression matrices from different cohorts. Ensure sample metadata includes both the

batchvariable (e.g., dataset source) and thebiological condition(e.g., disease state). - Filter Low-Expressed Genes: Remove genes with low counts across samples to reduce noise. A common filter is to keep genes with counts > 0 in at least 80% of samples in at least one batch [10].

- Normalization: Apply a normalization method like TMM (Trimmed Mean of M-values) to account for differences in library composition and depth [10].

- Visualization with PCA:

- Perform PCA on the normalized data.

- Generate a PCA plot where points are colored by

batch. Then, generate a separate plot where points are colored bybiological condition. - Interpretation: If the first or second principal component shows strong clustering by

batchthat overlaps or overshadows clustering bycondition, batch effects are present and require correction [2].

Guide 2: A Standardized Protocol for Batch Effect Correction in m6A lncRNA Validation

Objective: To apply a robust batch effect correction pipeline to enable valid integration of multi-cohort data for lncRNA signature validation.

Protocol: This workflow uses ComBat-seq, which is designed for RNA-seq count data, as an example.

- Input: Raw count matrices from all batches/cohorts and a metadata table specifying the batch and group for each sample.

- Environment Setup: Use R and load the required libraries (

svafor ComBat-seq,edgeRorDESeq2for normalization). - Merge and Filter Data: Combine count matrices and apply the low-expression filter from Guide 1.

Correct with ComBat-seq:

The

groupparameter helps preserve biological variation within batches during correction [10].- Validation: Repeat the PCA visualization from Guide 1 on the

corrected_counts. Successful correction will show reduced clustering by batch and improved clustering by biological condition.

This specific approach of using ComBat-seq to integrate multiple GEO datasets (GSE29013, GSE30219, etc.) with TCGA data was successfully employed in a study to develop a robust m6A/m5C/m1A-related lncRNA signature for lung adenocarcinoma [3].

Experimental Protocols from the Literature

The following protocol is adapted from a published study on m6A-related lncRNA signature development, which explicitly handled batch effects [3].

Study Aim: To develop and validate a prognostic signature of m6A/m5C/m1A-related lncRNAs (mRLncSig) in Lung Adenocarcinoma (LUAD) using multiple cohorts.

Key Experimental Workflow:

Detailed Methodological Steps:

Cohort Selection and Data Acquisition:

- Training Cohort: The TCGA-LUAD dataset was used.

- Validation Cohort: Created by amalgamating five publicly accessible GEO datasets: GSE29013, GSE30219, GSE31210, GSE37745, and GSE50081 [3].

Batch Effect Correction and lncRNA Identification:

- Goal: To create a unified, batch-effect-free expression matrix for analysis.

- Action: The study used the

svaR package (which contains theComBatfunction) to remove batch effects when integrating the different GEO datasets and when merging the list of m6A/m5C/m1A-related lncRNAs from different sources [3]. This step was crucial for ensuring that the prognostic signals were biological and not technical.

Prognostic Model Construction:

- LASSO Cox regression analysis was performed on the training cohort to build a signature (mRLncSig) from the batch-corrected lncRNA expression data [3].

Validation:

- The model's performance was rigorously tested in the independent, amalgamated validation cohort using Kaplan-Meier analysis, ROC analysis, and Cox regression [3].

- The real-world expression of the signature lncRNAs was confirmed using quantitative real-time PCR (qRT-PCR) on human LUAD tissues, moving from in-silico findings to wet-lab validation [3].

The Scientist's Toolkit: Essential Research Reagents & Materials

| Item | Function in m6A lncRNA Research |

|---|---|

| TCGA & GEO Databases | Primary sources for acquiring large-scale, multi-cohort RNA-seq data and clinical information for discovery and validation [3]. |

| R/Bioconductor Packages | Open-source software for statistical analysis and batch effect correction. Key packages include sva (ComBat), limma, and edgeR [3] [10]. |

| TRIzol Reagent | Used for the extraction of high-quality total RNA, including lncRNAs, from tissue or cell samples for downstream qRT-PCR validation [4]. |

| qRT-PCR Kits | Essential for validating the expression levels of identified lncRNA signatures in independent clinical samples, confirming bioinformatics findings [3] [4]. |

| ComBat / ComBat-seq | An empirical Bayes method used to adjust for batch effects, with ComBat-seq specifically designed for RNA-seq count data [3] [10]. |

| Yunnancoronarin A | Yunnancoronarin A |

| 6-O-Caffeoylarbutin | 6-O-Caffeoylarbutin, CAS:136172-60-6, MF:C21H22O10, MW:434.4 g/mol |

Welcome to the Technical Support Center for researchers investigating the epitranscriptome. This resource focuses on the specific challenges of studying N6-methyladenosine (m6A) modifications on long non-coding RNAs (lncRNAs), particularly when using multi-omics approaches and single-cell technologies. These studies are crucial for understanding cancer, neurological diseases, and cellular development, but present unique technical hurdles in validation and interpretation. The following guides and FAQs are framed within the broader context of handling batch effects in multi-cohort validation research, providing actionable solutions to ensure the robustness and reproducibility of your findings.

Technical Background: m6A-lncRNA Regulatory Axis

The m6A Modification System

m6A is the most prevalent internal mRNA modification in eukaryotic cells, governed by a dynamic system of writers, erasers, and readers [11]. This system also regulates lncRNAs, influencing their structure, stability, and function.

- Writers (Methyltransferases): The multicomponent methyltransferase complex, with METTL3 as the catalytic core and METTL14 as a structural scaffold, installs m6A marks. Regulatory proteins including WTAP, VIRMA, and RBM15/15B guide the complex to specific RNA regions [11] [12] [13].

- Erasers (Demethylases): FTO and ALKBH5 oxidatively remove m6A marks, making this modification reversible and dynamically responsive to cellular signals [11] [12] [13].

- Readers (Interpreters): Proteins like the YTHDF family (YTHDF1, YTHDF2, YTHDF3), YTHDC1, and IGF2BPs recognize m6A sites and dictate the functional outcomes, influencing RNA splicing, stability, translation, and decay [12] [13].

LncRNAs as Key Regulatory Targets and Regulators

LncRNAs are transcripts longer than 200 nucleotides with low or no protein-coding potential. When modified by m6A, their functional properties can be significantly altered. Furthermore, some lncRNAs can themselves regulate the m6A machinery, creating complex feedback loops [14].

The following diagram illustrates the core workflow and key challenges in m6A-lncRNA multi-omics studies:

Troubleshooting Guide: Addressing Core Technical Challenges

This section addresses the most frequent issues encountered in m6A-lncRNA research, with a special emphasis on mitigating batch effects for reliable multi-cohort validation.

FAQ 1: How Can I Minimize Batch Effects in a Multi-batch m6A-lncRNA Study?

The Problem: Batch effects are technical variations introduced due to differences in reagents, instruments, personnel, or processing time. They are notoriously common in omics data and can confound biological signals, leading to misleading conclusions and irreproducible results [1]. In longitudinal or multi-center studies, where samples are processed over extended periods, batch effects can be severe and incorrectly attributed to time-dependent biological changes [1].

Troubleshooting Steps:

Prevention Through Experimental Design:

- Randomization: Do not run all samples from one experimental group in a single batch. Randomize sample processing and acquisition across batches [15].

- Bridge Samples: Include a consistent control sample (e.g., a pooled aliquot from several samples or a commercial reference RNA) in every batch. This "bridge" allows for technical variation to be quantified and corrected during analysis [15].

- Reagent Banking: If possible, aliquot and use the same lot of critical reagents (e.g., antibodies, enzymes, buffers) for the entire study. Titrate all antibodies before the study begins [15].

Detection and Diagnosis:

- Quality Control Metrics: Monitor RNA Integrity Number (RIN), library concentrations, and sequencing quality metrics per batch.

- Dimensionality Reduction: Use PCA, t-SNE, or UMAP plots colored by batch. If samples cluster strongly by batch rather than biological group, a significant batch effect is present [15].

- Control Charts: For bridge samples, plot the quantification of a stable m6A mark or lncRNA expression across batches using a Levy-Jennings chart to visualize drifts or shifts [15].

Correction in Data Analysis:

- Benchmarking: The optimal stage for batch-effect correction can vary. Evidence from proteomics suggests that correction at the aggregated feature level (e.g., protein-level instead of peptide-level) can be more robust [16]. Evaluate this for your data.

- Algorithm Selection: Use established batch-effect correction algorithms (BECAs) such as ComBat, Harmony, or RUV-III-C [1] [16]. Test multiple methods to find the one that best removes technical variation without erasing biological signal.

- Cautious Application: Be wary of over-correction, especially when batch is confounded with biology. Always validate findings with an orthogonal method.

FAQ 2: Which Detection Method Should I Use for Transcriptome-wide m6A Mapping on lncRNAs?

The Problem: Choosing the right method to locate m6A marks is critical, as each has trade-offs in resolution, input requirements, and specificity. This is particularly challenging for lncRNAs, which may be expressed at low levels.

Troubleshooting Steps:

Define Your Need:

- Do you need absolute quantification of global m6A levels or location-specific information?

- What is your sample input availability?

- What resolution is required for your biological question?

Select the Appropriate Technology: The table below compares the primary methods.

Table 1: Comparison of Primary m6A Detection Methods

| Method | Principle | Resolution | Key Advantages | Key Limitations | Best for m6A-lncRNA Studies |

|---|---|---|---|---|---|

| MeRIP-seq/m6A-seq | Antibody-based enrichment of m6A-modified RNA fragments followed by sequencing [12]. | ~100-200 nt | Well-established; requires standard NGS equipment; can use low input with specialized kits [17]. | Low resolution; antibody specificity issues [18]. | Initial, cost-effective mapping of m6A-lncRNA interactions. |

| miCLIP | Crosslinking immunoprecipitation with an m6A antibody, causing mutations at methylation sites during cDNA synthesis [12]. | Single-nucleotide | High, single-nucleotide resolution [12]. | Technically demanding; lower throughput. | Pinpointing exact m6A sites on specific lncRNAs. |

| ELISA | Colorimetric immunoassay using antibodies against m6A [18]. | Global (no location data) | Simple, rapid, high-throughput; low detection limit (pg range) [18]. | No transcript-specific information; potential for cross-reactivity [18] [17]. | Quickly quantifying global changes in m6A levels before costly NGS. |

| EpiPlex | Uses engineered, non-antibody binders for m6A enrichment and sequencing [17]. | Transcript-level | High specificity and sensitivity; lower input and sequencing depth requirements; provides gene expression data from same sample [17]. | Does not provide absolute quantification of modification stoichiometry [17]. | Sensitive profiling from precious clinical samples; studies requiring paired modification and expression data. |

FAQ 3: How Do I Handle the Low Abundance of lncRNAs and m6A in Single-Cell Experiments?

The Problem: Single-cell sequencing technologies revolutionize the study of heterogeneity but face fundamental issues like high technical noise, low RNA input, and high dropout rates. These issues are compounded when studying lowly expressed lncRNAs and sparse m6A modifications [14] [1].

Troubleshooting Steps:

- Maximize Sample Quality: Start with high-quality cells or nuclei. Use RNase-free reagents and techniques to preserve RNA integrity [18].

- Optimize Library Preparation: Use specialized single-cell kits designed to maximize the capture of non-polyadenylated RNAs if your lncRNAs of interest are not polyadenylated.

- Leverage Multi-omics Deconvolution: Since single-cell m6A sequencing is still emerging, a common strategy is to use multi-omics deconvolution. This involves using single-cell RNA-seq (scRNA-seq) data as a guide to deconvolute bulk m6A-seq data, inferring m6A patterns in different cell types [14].

- Employ Advanced Computational Tools: Use algorithms designed for single-cell data to impute dropouts and address sparsity. Tools like Hermes and LongHorn, which use mutual information and integrative systems biology, can help infer lncRNA and m6A regulatory networks from sparse data [14].

Research Reagent Solutions

This table lists essential materials and tools for conducting robust m6A-lncRNA research, with a focus on minimizing technical variability.

Table 2: Essential Research Reagents and Tools for m6A-lncRNA Studies

| Reagent / Tool Category | Specific Examples | Function & Importance | Considerations for Batch Effect Mitigation |

|---|---|---|---|

| m6A Detection Kits | EpiQuik m6A RNA Methylation Quantification Kit (Colorimetric) [18]; EpiPlex m6A RNA Methylation Kit (Sequencing-based) [17] | Provides optimized, all-in-one reagents for consistent global quantification or location-specific mapping. | Using a single kit lot for a study reduces inter-batch variation from different reagent formulations. |

| High-Specificity Antibodies/Binders | Validated antibodies for METTL3, FTO, YTHDF1, etc. [12]; Non-antibody engineered binders [17] | Critical for immunoprecipitation, ELISA, and Western Blot validation. High specificity minimizes off-target signals. | Antibody lot-to-lot variation is a major source of batch effects. Bank a validated lot from a single manufacturer for the entire study [15]. |

| Reference Materials | Quartet protein reference materials [16]; Universal Human Reference RNA; Custom synthetic m6A-RNA spike-ins [17] | Serves as a "bridge" or "anchor" sample to normalize across batches and monitor technical performance. | The inclusion of reference materials in every batch is one of the most effective strategies for enabling batch-effect correction [16] [15]. |

| RNA Stabilization & Extraction | RNase inhibitors; DNase treatment kits; Liquid nitrogen/commercial stabilizers [18] | Protects labile RNA and m6A marks from degradation; removes contaminating DNA that can interfere with assays [18]. | Standardize the stabilization and extraction protocol across all samples and personnel to minimize introduction of pre-analytical variation. |

| Batch-Effect Correction Algorithms (BECAs) | ComBat, Harmony, RUV-III-C [1] [16] | Computational tools applied post-data-generation to statistically remove unwanted technical variation from the dataset. | No single algorithm is best for all data. Benchmark several BECAs on your dataset to select the most effective one [1]. |

Advanced Multi-omics Integration Workflow

For complex m6A-lncRNA studies, a systematic workflow that integrates data from multiple omics layers is essential. The following diagram outlines a robust pipeline that incorporates batch-effect mitigation at key stages.

Success in m6A-lncRNA research hinges on a meticulous approach that prioritizes reproducibility from the initial experimental design through to final data analysis. By proactively implementing batch-effect mitigation strategies—such as using bridge samples, banking reagents, and applying robust computational corrections—you can significantly enhance the reliability and translational potential of your findings in multi-cohort validation studies. This Technical Support Center provides a foundation for troubleshooting common issues; for further assistance, consult the referenced literature and manufacturer protocols for your specific reagents and platforms.

Frequently Asked Questions & Troubleshooting Guides

This technical support center addresses common challenges in multi-cohort m6A lncRNA validation studies. Below are targeted solutions for issues ranging from batch effects to specific experimental protocols.

What are the most effective strategies to correct for batch effects in multi-cohort m6A-lncRNA studies?

Batch effects are technical variations introduced when samples are processed in different labs, at different times, or on different platforms. They are a major challenge in multi-cohort studies as they can skew results and introduce false positives or negatives [19].

Effective Correction Strategies:

- Ratio-Based Scaling: This method scales absolute feature values of study samples relative to those of concurrently profiled reference materials. It is particularly effective when batch effects are completely confounded with biological factors of interest [19].

- Protein-Level Correction: For proteomics data integrated with m6A studies, performing batch-effect correction at the protein level (after quantification) rather than at the precursor or peptide level proves more robust. Effective algorithms for this include ComBat and Ratio-based methods when combined with MaxLFQ quantification [16].

- Reference Materials: The use of publicly available multiomics reference materials (e.g., from the Quartet Project) is highly recommended. These materials, when profiled concurrently with study samples in each batch, enable more reliable data integration [19] [16].

Troubleshooting Tip: If your biological groups are completely confounded with batch groups (e.g., all controls in one batch and all cases in another), most standard correction algorithms may fail. In this scenario, the ratio-based method using a reference material is the most reliable choice [19].

How do I choose between microarrays and RNA-Seq for profiling lncRNAs in my validation study?

The choice between these two platforms depends on your research goals, budget, and the characteristics of lncRNAs.

Comparison of Platforms:

Table: Microarrays vs. RNA-Seq for LncRNA Profiling

| Feature | LncRNA Microarray | RNA-Seq |

|---|---|---|

| Detection Sensitivity | High; can detect 7,000-12,000 lncRNAs [20] | Lower for low-abundance lncRNAs; may detect only 1,000-4,000 lncRNAs [20] |

| Required Data Depth | N/A | >120 million raw reads per sample for acceptable coverage [20] |

| Cost | Lower [20] | Higher due to deep sequencing requirements [20] |

| Discovery Power | Limited to pre-designed probes | Can identify novel lncRNAs [21] |

| Technical Simplicity | More straightforward analysis | Complex pipeline; results can vary with tools used [21] |

Recommendations:

- Choose Microarrays for large-scale, targeted validation of known lncRNAs due to their lower cost, higher sensitivity for low-abundance transcripts, and simpler data analysis [20].

- Choose RNA-Seq if your goal is to discover novel lncRNAs or splicing variants not covered by existing microarray probes [21].

What specific biases should I anticipate from the reverse transcription (RT) step in RNA-seq?

The reverse transcription reaction, which converts RNA to cDNA, is a significant source of both intra- and inter-sample biases that can affect quantification accuracy [22].

Common RT Biases and Solutions:

Table: Reverse Transcription Biases and Mitigation Strategies

| Bias Type | Description | Recommended Solution |

|---|---|---|

| RNA Secondary Structure | RNA folding can prevent primers and RTases from accessing the template. | Use thermostable reverse transcriptases (e.g., Superscript IV) that operate at higher temperatures to disrupt secondary structures [22]. |

| RNase H Activity | The RNase H domain in some RTases can degrade the RNA template prematurely, introducing a negative bias against long transcripts. | Use RTase enzymes with diminished or absent RNase H activity [22]. |

| Primer-Related Bias | Oligo(dT) primers can miss non-polyadenylated lncRNAs, while random primers have varying binding efficiencies. | For comprehensive coverage, a combination of methods may be needed. Consider TGIRT (Thermostable Group II Intron Reverse Transcriptase) protocols for structure-independent priming [22]. |

| Intersample Bias | Inconsistencies in RNA quantity, integrity, or purity between samples. | Standardize RNA quality and quantity across all samples and follow MIQE guidelines for reporting [22]. |

My m6A profiling results are inconsistent. How can I improve reliability?

Inconsistencies in m6A profiling can stem from the choice of detection method, antibody specificity, or RNA sample quality.

Strategies for Robust m6A Detection:

- Method Selection: Understand the strengths of different techniques.

- m6A-MeRIP (Antibody-based): Offers comprehensive coverage of m6A modifications across whole transcripts, including sites without the "RRACH" consensus motif. It is sensitive for low-level RNAs but may have cross-reactivity with m6Am [20].

- m6A Single Nucleotide Array (Enzyme-based): Uses the MazF enzyme to cleave at specific "ACA" sites within the "RRACH" motif. It provides single-base resolution, is tolerant of poor-quality RNA (e.g., from FFPE), but only profiles a subset of all m6A sites [20].

- Rigorous Controls: Always include appropriate controls. For MeRIP, use a synthetic positive control RNA spiked into your total RNA sample. Analyze the immunoprecipitated (IP), supernatant, and a mock (IgG) IP fraction by qPCR to confirm enrichment efficiency and specificity [20].

How can I validate the cellular localization of my candidate lncRNA?

Since lncRNAs often function in specific subcellular compartments (e.g., nucleus or cytoplasm), and they cannot be validated by immunohistochemistry, localization is key to understanding their mechanism.

Recommended Validation Technique: RNA In Situ Hybridization (ISH)

- Technology: The RNAscope assay is highly recommended for validating lncRNA expression and localization. It provides single-molecule sensitivity, which is crucial for detecting typically low-abundance lncRNAs, and can be used on formalin-fixed, paraffin-embedded (FFPE) tissue sections [23].

- Workflow: This method uses a proprietary probe design that allows for signal amplification while suppressing background noise, enabling precise localization of lncRNAs to specific cell types and subcellular structures within complex tissues [23].

The Scientist's Toolkit: Essential Research Reagents

Table: Key Reagents for m6A and LncRNA Research

| Reagent / Tool | Function / Application | Key Considerations |

|---|---|---|

| Reference Materials (Quartet Project) | Multi-omics quality control materials for batch-effect monitoring and correction [19] [16]. | Enables ratio-based scaling in confounded study designs. |

| Thermostable RTases (e.g., Superscript IV) | Reverse transcription of RNA with high secondary structure [22]. | Reduces intrasample bias by working at higher temperatures. |

| RNAscope Probes | Highly sensitive RNA in situ hybridization for lncRNA localization in FFPE tissues [23]. | Essential for validating spatial expression of non-coding RNAs. |

| m6A-Specific Antibodies | Immunoprecipitation of m6A-modified RNAs (MeRIP) [20]. | Verify specificity and use spike-in controls for quantification. |

| MazF Endonuclease | Enzyme-based detection of specific m6A sites for single-nucleotide resolution arrays [20]. | Only recognizes a subset of m6A sites with "ACA" sequence. |

| 3-Furanmethanol | 3-Furanmethanol, CAS:4412-91-3, MF:C5H6O2, MW:98.10 g/mol | Chemical Reagent |

| Eugenyl benzoate | Eugenyl benzoate, CAS:531-26-0, MF:C17H16O3, MW:268.31 g/mol | Chemical Reagent |

Visualizing Experimental Workflows

The following diagrams outline core experimental and data analysis pipelines to help you plan and troubleshoot your projects.

Diagram 1: m6A LncRNA Signature Discovery & Validation

Diagram 2: Batch Effect Correction Decision Guide

Diagram 3: m6A Detection Method Selection

Practical Strategies and Algorithms for Batch Effect Correction in Multi-Cohort Datasets

Batch effects are systematic technical variations in data that arise from processing samples in different batches, at different times, with different reagents, or by different personnel. These non-biological variations can confound your analysis, leading to misleading biological conclusions and irreproducible results [7] [19]. In the context of multi-cohort m6A lncRNA validation research, where you're integrating data from multiple studies or laboratories, batch effects can be particularly problematic as they may obscure true biological signals related to epitranscriptomic modifications [24] [25].

Sources of Batch Effects:

- Experimental biases (unequal amplification during PCR, cell lysis, reverse transcriptase efficiency)

- Different handling personnel or equipment

- Varying reagent lots or protocols

- Sequencing across different flow cells or platforms

- Library preparation methods (e.g., polyA enrichment vs. ribo-depletion) [7] [26]

Fundamentals of Batch Effect Correction

Batch effect correction aims to remove technical variation while preserving biological variation. The observed data can be statistically decomposed into biological signal, batch-specific variation, and random noise [27]. Effective correction is essential for reliable clustering, classification, differential expression analysis, and multi-site data integration [27].

A critical consideration is your experimental design scenario, which falls into one of two categories:

- Balanced Scenario: Samples across biological groups are evenly distributed across batches

- Confounded Scenario: Biological factors and batch factors are completely mixed (common in longitudinal and multi-center studies) [19]

Most BECAs struggle with confounded scenarios, where distinguishing true biological differences from batch effects becomes challenging [19].

Comprehensive Comparison of BECAs

Table 1: Overview of Major Batch Effect Correction Algorithms

| Method | Core Algorithm | Data Type | Key Features | Limitations |

|---|---|---|---|---|

| ComBat/ComBat-Seq | Empirical Bayes | Microarray (ComBat), RNA-Seq (ComBat-Seq) | Adjusts for mean and variance differences; handles small sample sizes | Assumes batch effects are consistent across genes [28] [26] |

| Harmony | Principal Component Analysis with iterative clustering | Single-cell RNA-seq, Multi-omics | Integrates data while accounting for batch and biological conditions; works well in balanced scenarios | Performance decreases in confounded scenarios [7] [19] |

| MNN (Mutual Nearest Neighbors) | Nearest neighbor matching | Single-cell RNA-seq | Corrects for cell-type specific batch effects; doesn't require all cell types in all batches | Pairwise approach; computationally intensive for many batches [7] [29] |

| DESC | Deep embedding with clustering | Single-cell RNA-seq | Iteratively removes batch effects while clustering; agnostic to batch information | Requires biological variation > technical variation [30] |

| CarDEC | Deep learning with feature blocking | Single-cell RNA-seq | Corrects in both embedding and gene expression space; treats HVGs and LVGs separately | Complex architecture; computationally demanding [29] |

| scVI | Variational autoencoder | Single-cell RNA-seq | Probabilistic modeling of biological and technical noise; joint analysis of all batches | Strong reliance on correct batch definition [29] [30] |

| Ratio-Based Methods | Scaling relative to reference materials | Multi-omics | Effective in confounded scenarios; uses reference materials for scaling | Requires reference materials in each batch [19] |

Table 2: Performance Comparison of BECAs on Benchmark Datasets

| Method | Pancreatic Islet Data (ARI) | Macaque Retina Data (ARI) | Computation Speed | Batch Information Required |

|---|---|---|---|---|

| DESC | 0.945 | 0.919-0.970 | Medium | No |

| Seurat 3.0 | 0.896 | Variable with batch definition | Fast | Yes |

| scVI | 0.696 | 0.242 (without batch info) | Medium | Yes |

| MNN | 0.629 | Variable with batch definition | Slow for many batches | Yes |

| Scanorama | 0.537 | Variable with batch definition | Medium | Yes |

| BERMUDA | 0.484 | Variable with batch definition | Medium | Yes |

Troubleshooting Common Batch Effect Issues

FAQ 1: How do I diagnose batch effects in my m6A lncRNA data?

Issue: Suspected batch effects in multi-cohort lncRNA validation study.

Solution:

- Perform Principal Component Analysis (PCA) before correction, coloring samples by batch and biological condition

- Calculate batch-wise centroids and coefficient of variation (CV) within cell types [29]

- Use metrics like Silhouette Coefficient, kBET, or LISI to quantify batch mixing [27]

- Check if samples cluster more strongly by batch than by biological condition

Experimental Protocol:

FAQ 2: Which correction method should I choose for confounded batch-group scenarios?

Issue: Biological groups are completely confounded with batches in m6A lncRNA validation study.

Solution:

- Use ratio-based methods if reference materials are available [19]

- Consider DESC or CarDEC for their ability to handle confounded scenarios without over-correction [30] [29]

- Avoid methods that rely heavily on explicit batch labeling when batches perfectly align with biological conditions

Experimental Protocol for Ratio-Based Correction:

- Include reference materials (e.g., Quartet multiomics reference materials) in each batch [19]

- Transform expression profiles to ratio-based values using reference sample expression as denominator

- Apply downstream analysis to ratio-scaled data

- Validate with known biological controls specific to m6A modification

FAQ 3: How can I prevent overcorrection when biological differences are subtle?

Issue: Concern about removing true biological signal while correcting batch effects, particularly for subtle m6A-related expression changes.

Solution:

- Use negative control genes (inert to biological variable) to estimate batch effects [27]

- Apply methods with soft clustering or probabilistic approaches (e.g., DESC, scVI) [30] [31]

- Validate with known m6A-modified lncRNAs that should show consistent patterns across batches

- Compare results before and after correction for key biological markers

Experimental Protocol:

FAQ 4: How do I handle batch effects when integrating single-cell RNA-seq data for lncRNA analysis?

Issue: Integrating scRNA-seq data from multiple batches while preserving lncRNA expression patterns.

Solution:

- Use deep learning methods (DESC, CarDEC, scVI) that jointly optimize clustering and batch correction [30] [29] [31]

- Consider the branching architecture in CarDEC that separately handles highly variable genes (HVGs) and lowly variable genes (LVGs) [29]

- Validate integration quality by checking mixing of batches within cell types and preservation of known cell-type markers

Experimental Protocol for DESC:

Advanced Deep Learning Approaches

Recent advances in batch effect correction leverage deep learning frameworks for more powerful integration:

Multi-Level Loss Function Designs

Deep learning methods employ various loss functions at different levels:

- Level 1: Batch effect removal using batch labels (GAN, HSIC, Orthogonal Projection Loss) [31]

- Level 2: Biological conservation using cell-type labels (Cell Supervised contrastive learning, Invariant Risk Minimization) [31]

- Level 3: Integrated approaches combining both batch and biological information [31]

Specialized Architectures

CarDEC's Branching Architecture: Treats highly variable genes (HVGs) and lowly variable genes (LVGs) as distinct feature blocks, using HVGs to drive clustering while allowing LVG reconstructions to benefit from batch-corrected embeddings [29].

DESC's Iterative Learning: Gradually removes batch effects through self-learning by optimizing a clustering objective function, using "easy-to-cluster" cells to guide the network to learn cluster-specific features while ignoring batch effects [30].

Implementation Considerations for m6A lncRNA Research

Reference Material-Based Approaches

For multi-cohort m6A lncRNA studies, consider implementing a reference material-based ratio method:

Workflow for Reference Material-Based Batch Correction

Research Reagent Solutions

Table 3: Essential Research Reagents for Batch Effect Management

| Reagent/Material | Function in Batch Effect Correction | Application in m6A lncRNA Research |

|---|---|---|

| Quartet Reference Materials | Multi-omics reference for ratio-based correction | Cross-platform normalization for m6A quantification [19] |

| Control Cell Lines | Technical replicates across batches | Monitoring batch effects in lncRNA expression |

| Spike-in RNAs | Normalization controls | Distinguishing technical from biological variation |

| Stable m6A-modified Controls | m6A-specific technical controls | Ensuring m6A-specific signals are preserved |

| Multiplexing Oligos | Sample multiplexing in single batches | Reducing batch effects through experimental design |

Validation and Quality Control

After applying batch effect correction, rigorous validation is essential:

Quantitative Metrics:

Biological Validation:

- Check preservation of known biological patterns in m6A lncRNAs

- Verify that established m6A-modified lncRNAs show consistent expression

- Ensure differential expression results align with prior knowledge

Diagnostic Visualization:

- Compare PCA plots before and after correction

- Visualize UMAP/t-SNE embeddings with batch and condition labels

- Examine distribution of key m6A regulators across batches

Batch Effect Correction Workflow with Quality Control

Selecting the appropriate batch effect correction algorithm depends on your specific experimental design, data type, and the extent of confounding between batch and biological factors. For multi-cohort m6A lncRNA validation studies, consider deep learning methods like DESC or CarDEC for their ability to handle complex batch effects while preserving subtle biological signals. Always validate correction efficacy using both technical metrics and biological knowledge to ensure meaningful results in your epitranscriptomic research.

Frequently Asked Questions

What is a batch effect and why is it problematic in multi-omics research? Batch effects are technical variations in data that arise from differences in experimental conditions rather than biological differences. These can occur due to different sequencing runs, reagent lots, personnel, protocols, or instrumentation across laboratories [19] [8] [10]. In multi-cohort m6A lncRNA studies, batch effects can skew analysis, generate false positives/negatives in differential expression analysis, mislead clustering algorithms, and compromise pathway enrichment results, ultimately threatening the validity of your findings [19] [10].

When should I use a ratio-based method over other batch effect correction algorithms? Ratio-based methods are particularly powerful in confounded experimental designs where biological factors of interest are completely confounded with batch factors [19]. For example, when all samples from biological group A are processed in one batch and all samples from group B in another, traditional correction methods may fail or remove genuine biological signal. The ratio-based approach excels in these challenging scenarios by scaling data relative to stable reference materials included in each batch [19].

How do I detect batch effects in my dataset before correction?

- Visualization: Use Principal Component Analysis (PCA) or t-SNE/UMAP plots to see if samples cluster by batch rather than biological condition [8] [10]

- Quantitative Metrics: Employ metrics like normalized mutual information (NMI), adjusted rand index (ARI), or kBET to quantitatively assess batch separation [8]

- Comparative Analysis: Examine if the same samples processed in different batches show significant technical variation [19]

What are the signs of overcorrection in batch effect adjustment?

- Cluster-specific markers comprise genes with widespread high expression (e.g., ribosomal genes)

- Substantial overlap among markers specific to different clusters

- Absence of expected canonical markers for known cell types

- Scarcity of differential expression hits in pathways expected based on sample composition [8]

Troubleshooting Guides

Problem: Poor Data Integration in Confounded m6A lncRNA Multi-Cohort Studies

Symptoms:

- Samples cluster primarily by batch or cohort origin in PCA/t-SNE plots

- Inability to validate findings across different cohorts

- Biological signals appear inconsistent when integrating data from multiple sources

Solution: Implement Reference Material-Based Ratio Correction

Experimental Protocol:

- Reference Material Selection: Include stable, well-characterized reference materials (e.g., Quartet multiomics reference materials) in each batch during sample processing [19]

- Concurrent Profiling: Process both study samples and reference materials under identical experimental conditions

- Ratio Transformation: Convert absolute feature values to ratios by scaling against reference material measurements

- Data Integration: Combine ratio-scaled data from multiple batches for downstream analysis

Implementation Code:

Problem: Choosing Between Batch Effect Correction Methods

Decision Framework:

| Scenario | Recommended Method | Rationale |

|---|---|---|

| Balanced design (biological groups evenly distributed across batches) | ComBat, Harmony, limma's removeBatchEffect | Effective when biological and technical factors aren't confounded [19] [10] |

| Completely confounded design (batch and group variables aligned) | Ratio-based scaling with reference materials | Preserves biological signal that other methods may remove [19] |

| Unknown or complex batch structure | SVA, RUVseq | Handles unmodeled batch effects through surrogate variable analysis [19] |

| Single-cell RNA-seq data | Seurat, Harmony, LIGER | Addresses data sparsity and high dimensionality of single-cell data [8] [7] |

Problem: Validation of m6A lncRNA Findings Across Multiple Cohorts

Symptoms:

- Identified biomarkers fail to replicate in independent cohorts

- Inconsistent prognostic signatures across studies

- Technical variability masks true biological signals

Solution: Unified Ratio-Based Framework for Cross-Cohort Validation

Workflow Implementation:

- Standardize Reference Materials: Use common reference materials across all participating cohorts

- Coordinate Processing: Establish standardized protocols for reference material inclusion and processing

- Centralized Ratio Calculation: Apply consistent ratio-based normalization across all datasets

- Batch-Agnostic Analysis: Conduct downstream m6A lncRNA validation on ratio-scaled data

Performance Comparison of Batch Effect Correction Methods

The table below summarizes quantitative performance metrics from a comprehensive assessment of batch effect correction algorithms in multiomics studies, evaluated using metrics of clinical relevance such as DEF identification accuracy, predictive model robustness, and cross-batch sample clustering accuracy [19]:

| Method | Confounded Design Performance | Biological Signal Preservation | Implementation Complexity | Best Use Cases |

|---|---|---|---|---|

| Ratio-Based Scaling | Excellent | High | Moderate | Completely confounded designs, multi-cohort studies [19] |

| ComBat | Poor to Fair | Variable in confounded designs [19] | Low | Balanced designs, known batch effects [19] [10] |

| Harmony | Fair | Moderate | Low to Moderate | Single-cell data, balanced designs [19] [8] |

| SVA | Fair | Variable | Moderate | Unknown batch effects, surrogate variable identification [19] |

| RUVseq | Fair | Variable | Moderate | Unwanted variation removal with control genes [19] |

| limma removeBatchEffect | Poor in confounded designs [19] | Low in confounded designs [19] | Low | Balanced designs, inclusion as covariate [10] |

Experimental Protocols

Reference Material-Based Ratio Correction Protocol

Purpose: To eliminate batch effects in completely confounded multi-cohort m6A lncRNA studies using ratio-based scaling to reference materials.

Materials:

- Well-characterized reference materials (e.g., Quartet multiomics reference materials) [19]

- Study samples from multiple cohorts

- Standardized RNA extraction and library preparation kits

- Sequencing platform

Procedure:

- Experimental Design:

- Include reference materials in each processing batch

- Ensure consistent number of reference material replicates across batches

- Process all samples (reference and study) using identical protocols

Data Generation:

- Process samples in batches reflecting your study design

- Generate transcriptomics data using standardized pipelines

- Quality control assessment on all samples

Ratio Calculation:

- For each feature (lncRNA, m6A regulator) in each study sample:

- Calculate ratio = Study sample feature value / Reference material feature value

- Use average of reference material replicates if multiple replicates available

- For each feature (lncRNA, m6A regulator) in each study sample:

Downstream Analysis:

- Proceed with differential expression analysis on ratio-scaled data

- Implement prognostic modeling using integrated ratio-scaled datasets

- Validate findings across cohorts using consistent ratio-based framework

Validation Metrics:

- Signal-to-noise ratio (SNR) for biological group separation

- Relative correlation (RC) coefficient between datasets

- Classification accuracy for sample-donor matching [19]

The Scientist's Toolkit: Research Reagent Solutions

| Reagent/Material | Function | Application Notes |

|---|---|---|

| Quartet Multiomics Reference Materials | Provides stable reference for ratio-based scaling across DNA, RNA, protein, and metabolite levels [19] | Derived from matched cell lines from a monozygotic twin family; enables cross-omics integration |

| Commercial RNA Reference Standards | Technical controls for transcriptomics batch effects | Useful when project-specific reference materials unavailable |

| Multiplexed Sequencing Kits | Allows pooling of samples across batches during sequencing | Reduces sequencing-based batch effects; enables reference material inclusion in each lane |

| Stable Cell Line Pools | Consistent biological reference across experiments | Can be engineered to express specific m6A regulators or lncRNAs of interest |

| Synthetic RNA Spikes-ins | External controls for technical variation monitoring | Particularly valuable for lncRNA quantification normalization |

| 3,4-Dimethylbenzoic acid | 3,4-Dimethylbenzoic acid, CAS:619-04-5, MF:C9H10O2, MW:150.17 g/mol | Chemical Reagent |

| ligupurpuroside B | Ligupurpuroside B|Supplier | Ligupurpuroside B is a glycoside with antioxidant activity, isolated from Ku-Ding tea. For Research Use Only. Not for human or veterinary diagnostic or therapeutic use. |

Advanced Implementation: Workflow for Multi-Cohort m6A lncRNA Studies

This workflow emphasizes the critical placement of ratio-based scaling after initial preprocessing but before multi-cohort integration and final analysis, ensuring batch effects are addressed prior to cross-study validation.

Key Considerations for Success

Reference Material Characterization: Ensure your reference materials are well-characterized and stable across the expected timeline of your multi-cohort study [19]

Experimental Consistency: Maintain consistent processing of reference materials across all batches and cohorts

Quality Assessment: Implement rigorous QC metrics to verify ratio-based correction effectiveness using both visual (PCA) and quantitative metrics [8]

Method Validation: Confirm that biological signals of interest are preserved while technical artifacts are removed through positive and negative control analyses

By implementing this ratio-based framework, researchers can overcome the critical challenge of confounded designs in multi-cohort m6A lncRNA studies, enabling robust cross-cohort validation and accelerating biomarker discovery and therapeutic development.

Frequently Asked Questions (FAQs)

Q1: Why is randomization the first line of defense against batch effects in multi-cohort m6A lncRNA studies?

Randomization is a statistical process that assigns samples or participants to experimental groups by chance, eliminating systematic bias and ensuring that technical variations (batch effects) are distributed equally across groups [32] [33]. In multi-cohort m6A lncRNA research, where samples are processed across different times, locations, or platforms, randomization prevents batch effects from becoming confounded with your biological factors of interest (e.g., disease status). This is critical because batch effects are technical variations that can confound analysis, leading to false-positive or false-negative findings [19] [34]. Proper randomization preserves the integrity of your data, allowing you to attribute differences in lncRNA expression or modification levels to biology, not technical artifact.

Q2: What are the main randomization methods, and how do I choose one for my study?

The choice of randomization method depends on your study's scale and specific need for balance in sample size or prognostic factors.

Table 1: Comparison of Common Randomization Methods

| Method | Key Principle | Best For | Advantages | Disadvantages |

|---|---|---|---|---|

| Simple Randomization [32] [35] | Assigning subjects/samples purely by chance, like a coin toss. | Large-scale studies where the law of large numbers ensures balance. | Maximizes randomness and is easy to implement. | High risk of group size and covariate imbalance in small studies. |

| Block Randomization [32] [33] | Randomly assigning subjects within small, predefined blocks (e.g., 4 or 6). | Studies with staggered enrollment or a small sample size where maintaining equal group sizes over time is crucial. | Ensures balanced group sizes at the end of every block. | If block size is known, the final allocation(s) in a block can be predicted, introducing selection bias. |

| Stratified Randomization [32] [33] | Performing randomization separately within subgroups (strata) based on key prognostic factors (e.g., cancer stage, sex). | Studies where balancing specific, known covariates across groups is essential for the validity of the results. | Improves balance for important known factors and can increase statistical power. | Becomes impractical with too many stratification factors, as it leads to numerous, sparsely populated strata. |

| Adaptive Randomization (Minimization) [32] [35] | Dynamically adjusting the allocation probability for each new subject to minimize imbalance in multiple prognostic factors. | Complex studies with several important prognostic factors that are difficult to balance with stratified randomization. | Actively minimizes imbalance across multiple known factors, even with small sample sizes. | Does not meet all the requirements of pure randomization and requires specialized software. |

Q3: A common batch was mislabeled, and samples were randomized using incorrect strata information. How should we handle this error?

Do not attempt to "undo" or "fix" the randomization. The intention-to-treat (ITT) principle, a gold standard in randomized trials, states that all randomized samples should be analyzed in their initially assigned groups to avoid introducing bias [36]. Instead, you should:

- Accept the randomization: Keep the samples in the groups they were originally assigned to.

- Document the error: Meticulously record the correct baseline information (e.g., the true stratum) for each affected sample.

- Account for it in analysis: During the statistical analysis, use the correctly recorded baseline data as a covariate to adjust for the initial error [36]. Attempting to correct the error post-hoc can lead to further complications and selection bias.

Q4: What is the role of balanced experimental design alongside randomization?

While randomization introduces chance to eliminate bias, balancing is a proactive technique to enforce equality across conditions [37]. In the context of an m6A lncRNA experiment, this means:

- Balancing Sample Characteristics: Using stratified or adaptive randomization to ensure that known confounders (e.g., age, sex, tumor stage) are equally represented in your compared groups.

- Balancing Technical Processing: Ensuring that samples from different biological groups are evenly distributed across processing batches, days, and sequencing lanes. This prevents "confounded scenarios," where a batch effect is indistinguishable from a true biological effect (e.g., all control samples are processed in Batch 1 and all disease samples in Batch 2) [19]. A balanced design is the most effective way to mitigate such confounding.

Troubleshooting Guides

Problem: Imbalanced Groups Despite Randomization

You have finished your multi-cohort m6A lncRNA study and find that a key prognostic factor (e.g., patient age) is not equally distributed between your high-risk and low-risk groups, potentially skewing your results.

- Possible Cause: Simple randomization can lead to chance imbalances, especially in small sample sizes [32].

- Solution:

- At the Design Stage: For future studies, switch from simple randomization to a method that guarantees balance for known factors. Stratified Randomization is the most direct solution if you have one or two key factors. For more complex studies with multiple factors, Adaptive Randomization (Minimization) is highly effective [32] [33].

- At the Analysis Stage: To salvage the current study, you must account for the imbalance statistically. Include the imbalanced prognostic factor as a covariate in your regression model (e.g., Cox regression for survival analysis). This statistically adjusts for the factor's effect, helping to isolate the true effect of your m6A lncRNA signature [38] [39].

Problem: Confounded Batch and Biological Effects

Your data shows a strong signal, but you realize that all samples from one clinical site (Batch A) were assigned to the treatment group, and all samples from another site (Batch B) were controls. You cannot tell if the observed effect is due to the treatment or the site-specific processing protocols.

- Possible Cause: A severe failure in experimental design where batch factors and biological factors are completely confounded [19].

- Solution:

- Prevention is Key: There is no perfect statistical fix for a confounded design. The best solution is to avoid it through careful balanced experimental design [37]. Ensure samples from all biological groups are represented in every batch.

- Use Reference Materials: Concurrently profile well-characterized reference materials (e.g., control cell line RNAs) in every batch [19]. The data from these references can be used to create ratio-based values (scaling study sample values to the reference), which is one of the most effective methods for correcting confounded batch effects [19].

- Statistical Correction (with caution): Advanced batch-effect correction algorithms (BECAs) like ComBat or Harmony can be attempted, but their performance is limited in strongly confounded scenarios and may remove genuine biological signal [19] [34].

Diagram: The Impact of Experimental Design on Multi-Batch m6A lncRNA Studies

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Materials for Robust m6A lncRNA Validation Studies

| Research Reagent / Material | Function in the Context of Randomization & Batch Effect Defense |

|---|---|

| Certified Reference Materials (CRMs) | Well-characterized control samples (e.g., synthetic RNA spikes or commercial reference cell lines) processed in every experimental batch. They serve as an internal standard for ratio-based batch effect correction [19]. |

| Interactive Response Technology (IRT/IWRS) | A centralized, computerized system to implement complex randomization schemes (stratified, block) across multiple clinical sites in a trial, ensuring allocation concealment and protocol adherence [33]. |

| Pre-specified Randomization Protocol | A detailed document created before the study begins, defining the allocation ratio, stratification factors, block sizes, and method. This prevents ad-hoc decisions and mitigates bias [36] [33]. |

| Stratification Factors | Pre-identified key prognostic variables (e.g., specific cancer stage, age group, known genetic mutation) used to create strata for stratified randomization, ensuring these factors are balanced across treatment groups [32] [39]. |

| Palmarumycin C3 | Palmarumycin C3, MF:C20H12O6, MW:348.3 g/mol |

| Coronarin E | Coronarin E, MF:C20H28O, MW:284.4 g/mol |

Technical Support Center: Troubleshooting & FAQs

Q1: After merging my TCGA, GEO, and in-house data, my PCA plot shows strong separation by dataset, not biological group. What is this and how do I fix it? A: This is a classic sign of a major batch effect. The technical differences between platforms (e.g., different sequencing machines, protocols, labs) are overshadowing the biological signal.

- Solution: Apply a batch effect correction algorithm after normalizing and filtering the data individually for each cohort.

- Protocol - ComBat-seq Correction:

- Input: Raw count matrices from each cohort.

- Software: R package

sva. - Code:

Q2: My in-house cohort uses a different lncRNA annotation (GENCODE v35) than the public data (GENCODE v19). How do I harmonize them? A: Inconsistent annotations will lead to missing or incorrect data. You must lift over all annotations to a common version.