Preventing Overfitting in m6A-lncRNA Signatures: A Cross-Validation Guide for Robust Biomarker Development

This article provides a comprehensive framework for researchers and drug development professionals to construct and validate prognostic m6A-related lncRNA signatures while rigorously preventing overfitting.

Preventing Overfitting in m6A-lncRNA Signatures: A Cross-Validation Guide for Robust Biomarker Development

Abstract

This article provides a comprehensive framework for researchers and drug development professionals to construct and validate prognostic m6A-related lncRNA signatures while rigorously preventing overfitting. It covers the foundational biology of m6A and lncRNA interactions, practical methodologies for model construction using techniques like LASSO regression, advanced troubleshooting with interpretable machine learning, and robust validation strategies. By synthesizing current best practices from computational biology and clinical research, this guide aims to enhance the reproducibility, clinical translatability, and predictive power of m6A-lncRNA models in cancer research and therapeutic development.

The Biological Bridge: Understanding m6A and lncRNA Interactions in Cancer

FAQs: Core Concepts of m6A RNA Methylation

1. What is m6A RNA methylation and why is it important? N6-methyladenosine (m6A) is the most prevalent, abundant, and conserved internal chemical modification found in messenger RNAs (mRNAs) and various non-coding RNAs in eukaryotes [1] [2]. It is a dynamic and reversible process that regulates several facets of RNA metabolism, including RNA splicing, export, localization, translation, and stability [1] [3]. Due to its comprehensive roles in fundamental biological processes, m6A is crucial in embryonic development, cell fate determination, and a variety of physiological processes. Dysregulation of m6A is closely linked to cancer progression, metastasis, and drug resistance [2].

2. What are the core components of the m6A writer complex? The m6A modification is installed by a multi-component methyltransferase complex ("writer"). The core complex includes [1] [3] [4]:

- METTL3: The catalytic subunit that transfers the methyl group.

- METTL14: Serves as an essential RNA-binding scaffold, allosterically activating and enhancing METTL3's catalytic activity. It forms a stable heterodimer with METTL3.

- WTAP: A regulatory subunit that interacts with METTL3 and METTL14, facilitating their localization to nuclear speckles and modulating m6A levels, though it lacks catalytic activity itself. Other important adapter proteins include KIAA1429 (VIRMA), which guides region-selective methylation, particularly in the 3'UTR; RBM15/RBM15B, which recruits the complex to specific RNA sites; and ZC3H13, which is critical for the nuclear localization of the writer complex [1].

3. Which proteins serve as m6A erasers? The removal of m6A is performed by demethylases ("erasers"), making the modification reversible. The two known m6A erasers are [4] [2]:

- FTO (Fat mass and obesity-associated protein): The first m6A demethylase discovered. It preferentially demethylates m6Am, an m6A-related modification, but also acts on m6A.

- ALKBH5: The other major m6A demethylase, which plays critical roles in various biological processes and cancers. The activity and specificity of these erasers can be highly context-dependent, varying by cell type, subcellular localization, and external stimuli [3].

4. How do m6A reader proteins exert their functions? m6A reader proteins recognize and bind to m6A-modified RNAs, executing the functional outcomes of the modification. They contain various m6A-binding domains and can be categorized as follows [4]:

- YTH Domain Family: This is a major class of readers.

- YTHDF1: Promotes translation efficiency, often in cooperation with YTHDF3.

- YTHDF2: Regulates mRNA stability and degradation.

- YTHDF3: Assists in translation and decay.

- YTHDC1: Regulates alternative splicing in the nucleus.

- YTHDC2: Enhances translation efficiency and can decrease RNA abundance.

- Other Readers: These include:

- IGF2BPs (IGF2BP1/2/3): Promote the stability and storage of target mRNAs.

- HNRNPs (e.g., HNRNPC/G): Bind to m6A-modified RNAs and regulate their processing by facilitating structural changes in the RNA.

Troubleshooting Guides for m6A Research

Issue 1: Inconsistent m6A-seq/MeRIP-seq Results

| Potential Cause | Solution / Verification Step |

|---|---|

| Inadequate Immunoprecipitation (IP) Efficiency | Use knockout-validated antibodies for IP [4]. Include positive and negative control RNAs to verify IP specificity and efficiency. |

| RNA Degradation or Low Quality | Always use RNA with high integrity (RIN > 8). Perform all RNA handling and fragmentation steps on ice with RNase-free reagents. |

| Insufficient Input RNA | Ensure you are using the recommended amount of input RNA (typically 1-5 µg for total RNA). Pilot experiments can help determine the optimal input for your sample type. |

| Improper Normalization | Use spike-in RNAs (e.g., from other species) with known m6A status to control for technical variability during library preparation and sequencing [5]. |

| High Background Noise | Optimize washing stringency after IP. For single-nucleotide resolution, consider advanced methods like miCLIP, which can reduce background and map sites more precisely [4]. |

Issue 2: Overfitting in m6A-Related Prognostic Model Construction A common challenge in constructing prognostic signatures based on m6A-related lncRNAs is the risk of overfitting, where a model performs well on training data but poorly on independent validation data.

- Preventive Protocol: Rigorous Cross-Validation

- Data Splitting: Divide your entire dataset (e.g., from TCGA) randomly into a training set (e.g., 70%) and a hold-out test set (e.g., 30%). The test set should not be used until the final model evaluation.

- Feature Selection on Training Set: Within the training set only, use univariate Cox regression to identify m6A-related lncRNAs significantly associated with survival.

- LASSO Regression: Apply the LASSO (Least Absolute Shrinkage and Selection Operator) Cox regression to the training set. LASSO penalizes the complexity of the model, forcing the coefficients of less important variables toward zero, thus preventing overfitting [6] [7]. The penalty parameter (λ) should be determined via tenfold cross-validation within the training set, typically choosing the λ value that gives the minimum partial likelihood deviance or the largest λ within one standard error of the minimum (the "1-SE" rule for a more parsimonious model).

- Model Validation: Apply the final model with the selected lncRNAs and their coefficients from the training set to the untouched test set. Evaluate its performance on the test set using Kaplan-Meier survival analysis and time-dependent Receiver Operating Characteristic (ROC) curves [6] [7].

- External Validation: For maximum robustness, validate the model on a completely independent cohort from a different source (e.g., GEO database) [8].

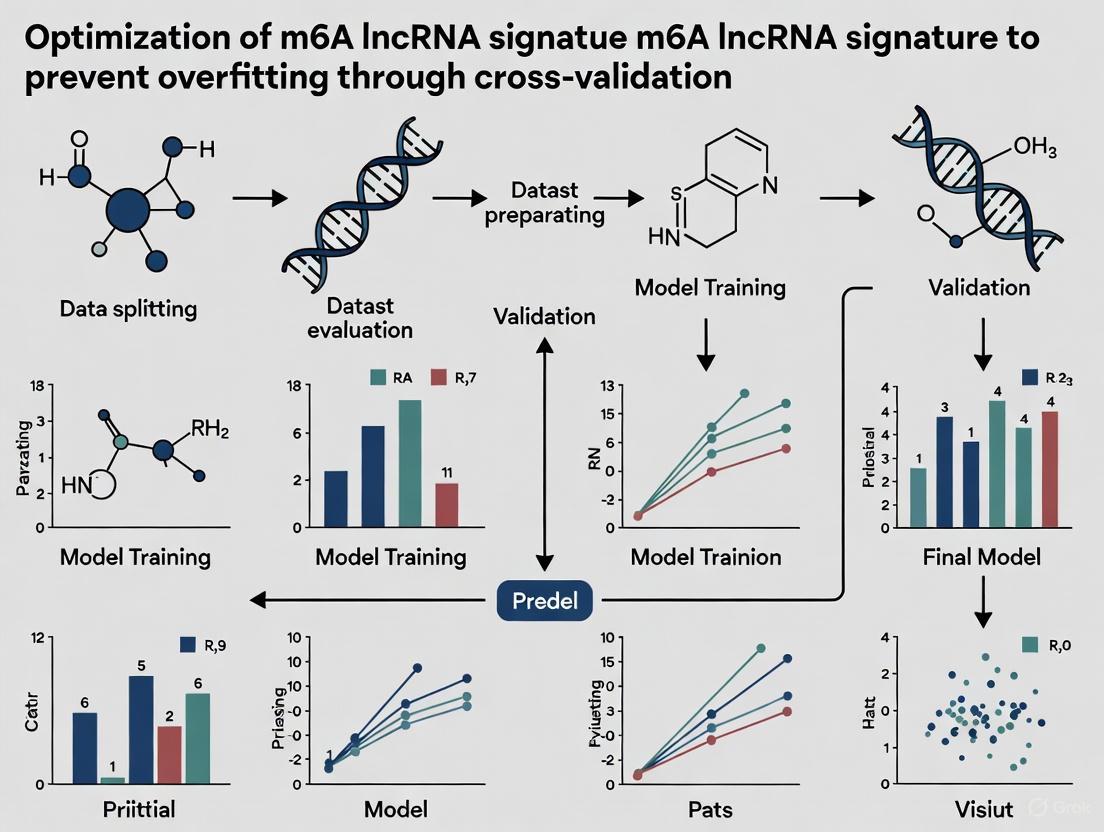

The following workflow outlines the key steps for building a robust prognostic model, integrating the cross-validation and feature selection methods described above to prevent overfitting.

Issue 3: Difficulty in Visualizing RNA Localization and Expression

- Solution A: Fluorescence In Situ Hybridization (FISH)

- Method: Use DNA or RNA probes complementary to your target RNA sequence, labeled with a fluorophore. This is highly sequence-specific and can be used to detect individual RNA strands in fixed cells [9].

- Troubleshooting: High background can be an issue. Consider derivatives like molecular beacons or Forced Intercalation (FIT) probes, which fluoresce only upon binding to the target, significantly reducing background signal [9].

- Solution B: MS2-MCP System for Live-Cell Imaging

- Method: Engineer the RNA of interest to include multiple MS2-binding sites (MBS). Co-express this with a GFP-fused MS2 coat protein (MCP). The GFP-MCP will bind to the MBS, allowing tracking of single mRNA molecules in living cells [9].

- Troubleshooting: Be aware that the large tag appendages might interfere with normal mRNA function or localization. Generating a transgenic organism for this can be time-consuming [9].

Research Reagent Solutions

The following table lists key reagents for studying m6A RNA methylation, drawing from validated research tools and methodologies.

| Reagent / Tool | Category | Primary Function / Application |

|---|---|---|

| METTL3 Antibody [4] | Writer | Immunoprecipitation (IP), Western Blot (WB), Immunohistochemistry (IHC) to study writer complex localization and expression. |

| ALKBH5 Antibody [4] | Eraser | WB, IHC to detect levels of the m6A demethylase. |

| YTHDF2 Antibody [4] | Reader | IP, WB, IHC, ICC/IF to investigate reader protein function and abundance. |

| m6A-Specific Antibody (e.g., ab151230) [4] | Detection | Core reagent for m6A mapping techniques (MeRIP-seq, miCLIP). |

| 5-Ethynyluridine (EU) [9] | Metabolic Labeling | Incorporates into newly transcribed RNA; can be visualized via click chemistry with a fluorophore for RNA dynamics studies. |

| LASSO Regression Model [6] [7] | Bioinformatics | Statistical method to prevent overfitting during prognostic signature construction by penalizing model complexity. |

| ssGSEA Algorithm [6] [7] | Bioinformatics | Used to evaluate immune cell infiltration and immune function scores in the tumor microenvironment based on m6A-related signatures. |

| Molecular Beacons / FIT Probes [9] | Imaging | Fluorescent probes for highly specific, low-background RNA visualization in live or fixed cells. |

m6A Regulators at a Glance

The table below provides a concise summary of the key proteins involved in m6A RNA methylation, highlighting their main components and functions.

| Regulator Type | Key Components | Primary Function |

|---|---|---|

| Writers | METTL3, METTL14, WTAP, KIAA1429 (VIRMA), RBM15/15B, ZC3H13 [1] [2] | Form a multi-protein complex that installs the m6A mark on RNA co-transcriptionally. METTL3 is the catalytic core. |

| Erasers | FTO, ALKBH5 [4] [2] | Enzymatically remove the m6A mark, enabling dynamic and reversible regulation of RNA methylation. |

| Readers | YTHDF1/2/3, YTHDC1/2, IGF2BP1/2/3, HNRNPC/G [4] [2] | Recognize and bind to m6A-modified RNAs, dictating the functional outcome (e.g., splicing, decay, translation). |

The following diagram illustrates the coordinated workflow of m6A methylation, from the installation of the mark by writers to its recognition by readers and removal by erasers, ultimately influencing the fate of the modified RNA.

Functional Roles of Long Non-Coding RNAs in Gene Regulation and Oncogenesis

Long non-coding RNAs (lncRNAs) are RNA molecules exceeding 200 nucleotides in length that lack protein-coding capacity. Once considered transcriptional "noise," they are now recognized as critical regulators of diverse cellular processes, with tissue-specific expression patterns particularly evident in tumors [10]. Their intricate involvement in tumorigenesis spans cancer initiation, progression, recurrence, metastasis, and chemotherapy resistance [10].

The functional significance of lncRNAs is profoundly influenced by post-transcriptional modifications, with N6-methyladenosine (m6A) emerging as a pivotal regulator. As the most common internal RNA modification in eukaryotes, m6A dynamically and reversibly fine-tunes RNA metabolism through writer (methyltransferases), eraser (demethylases), and reader (recognition proteins) proteins [11] [12]. This modification system significantly influences lncRNA generation, stability, and molecular interactions, creating a sophisticated regulatory layer in oncogenesis [13] [14].

Frequently Asked Questions (FAQs)

Q1: What fundamental roles do lncRNAs play in gene regulation and cancer development?

LncRNAs function through diverse mechanistic pathways to regulate gene expression. They can act as transcriptional regulators by modulating chromatin architecture and recruiting transcription factors, or influence post-transcriptional processes including RNA splicing, stability, and translation [10]. Through these mechanisms, lncRNAs impact critical cancer hallmarks such as uncoordinated cell proliferation, resistance to apoptosis, and metastatic potential [15]. Their expression patterns offer promising biomarkers for early cancer detection and prognosis, while their functional roles present opportunities for innovative therapeutic strategies [10].

Q2: How does m6A modification influence lncRNA function in cancer contexts?

m6A modification significantly impacts lncRNA stability, processing, and molecular interactions. For instance, METTL3-mediated m6A modification of lncRNA XIST suppresses colon cancer tumorigenicity and migration [11]. Similarly, YTHDF3 recognizes m6A-modified lncRNA GAS5, promoting its degradation and exacerbating colorectal cancer progression [16]. In bladder cancer, RBM15 and METTL3 synergistically promote m6A modification of specific lncRNAs, facilitating malignant progression [13]. These examples illustrate how m6A modifications can either promote or suppress tumorigenesis depending on the specific lncRNA and cellular context.

Q3: What practical strategies can prevent overfitting when developing m6A-related lncRNA prognostic signatures?

Robust prognostic model development requires careful statistical approaches. The following table summarizes key methodological considerations identified from multiple studies:

Table 1: Strategies for Preventing Overfitting in Prognostic Signature Development

| Method | Implementation | Study Example |

|---|---|---|

| LASSO Regression | Applies regularization to shrink coefficients and select most relevant features | Used in CRC [17], bladder cancer [13], and ovarian cancer [14] studies |

| Cross-Validation | Employ k-fold (typically 10-fold) validation during model training | Implemented in colon adenocarcinoma [11] and other cancer studies |

| Multi-Dataset Validation | Validate final model in independent patient cohorts from different sources | CRC models validated across 6 GEO datasets [18]; Ovarian cancer validated in GSE9891, GSE26193 [14] |

| External Experimental Validation | Confirm lncRNA expression in independent patient samples | CRC study validation in 55-patient in-house cohort [18]; Ovarian cancer validation in 60 clinical specimens [14] |

Q4: How can researchers identify authentic m6A-related lncRNAs for their studies?

Multiple complementary approaches can identify m6A-related lncRNAs. The most comprehensive strategy integrates:

- Co-expression Analysis: Calculate correlation coefficients between m6A regulators and lncRNAs (typically |R| > 0.4, p < 0.001) [11] [14]

- Database Mining: Utilize resources like M6A2Target documenting lncRNAs methylated or bound by m6A regulators [18]

- Experimental Evidence: Employ methylated RNA immunoprecipitation sequencing (MeRIP-seq) to confirm direct m6A modification

- Functional Impact Assessment: Evaluate expression changes following m6A regulator knockdown/overexpression [18]

Troubleshooting Common Experimental Challenges

Problem: Inconsistent prognostic signature performance across validation cohorts

Solution:

- Ensure consistent normalization methods across training and validation datasets

- Account for batch effects using algorithms like "Combat" when combining datasets [16]

- Verify that lncRNA detection probes are comparable across different platforms

- Consider biological variables including cancer subtypes, stages, and patient demographics that might affect signature performance

Problem: Difficulty distinguishing true m6A-related lncRNAs from incidental correlations

Solution:

- Apply stringent correlation thresholds (|R| > 0.4, p < 0.001) [11] [14]

- Require evidence from multiple identification methods (co-expression plus database or experimental support)

- Validate top candidates through experimental approaches such as RIP-qPCR or MeRIP-PCR

- Consider only lncRNAs with reasonable expression levels (e.g., median FPKM > 1) to ensure biological relevance [18]

Problem: Low predictive accuracy of m6A-lncRNA prognostic models

Solution:

- Incorporate clinical parameters with established prognostic value (e.g., pathologic stage) into nomograms [11]

- Consider integrating multiple RNA modification types (e.g., both m6A and m5C) for comprehensive signatures [16]

- Ensure proper feature selection through LASSO regression to eliminate redundant variables

- Validate time-dependent ROC curves at multiple intervals (1, 3, 5 years) to assess temporal performance [17]

Key Experimental Workflows

The development of robust m6A-related lncRNA signatures follows a systematic workflow that integrates bioinformatics analyses with experimental validation:

Diagram 1: m6A-LncRNA Signature Development Workflow

Research Reagent Solutions

Table 2: Essential Research Reagents for m6A-LncRNA Studies

| Reagent/Category | Specific Examples | Research Application |

|---|---|---|

| m6A Writers | METTL3, METTL14, METTL16, WTAP, RBM15/RBM15B, VIRMA, ZC3H13 | Methyltransferase enzymes that catalyze m6A modification [11] [13] |

| m6A Erasers | FTO, ALKBH5 | Demethylase enzymes that remove m6A modifications [11] [14] |

| m6A Readers | YTHDF1-3, YTHDC1-2, HNRNPC, HNRNPA2B1, IGF2BP1-3 | Recognition proteins that bind m6A-modified RNAs [18] [11] [14] |

| Data Resources | TCGA, GEO datasets (GSE17538, GSE39582, GSE9891, etc.) | Provide transcriptomic data and clinical information for analysis [18] [16] [14] |

| Analytical Tools | R packages: "limma", "DESeq2", "glmnet", "pRRophetic" | Differential expression, LASSO regression, drug sensitivity prediction [18] [11] |

Advanced Technical Considerations

Integrating Multi-Omics Data Advanced m6A-lncRNA studies increasingly integrate multiple data types. For example, investigating cross-talk between m6A- and m5C-related lncRNAs in colorectal cancer has revealed complex regulatory networks affecting tumor microenvironment and immunotherapy response [16]. Such integrated approaches provide more comprehensive insights into cancer mechanisms than single-modification analyses.

Tumor Microenvironment and Immunotherapy Applications m6A-related lncRNA signatures show promise in predicting immunotherapy responses. Studies have demonstrated that low-risk colorectal cancer patients based on m6A/m5C-related lncRNA profiles exhibit enhanced response to anti-PD-1/L1 immunotherapy [16]. Similarly, distinct risk groups show different sensitivities to various chemotherapeutic agents, enabling potential treatment stratification [11].

Functional Validation Approaches Beyond computational predictions, rigorous functional validation is essential. This includes:

- In vitro assays measuring proliferation, invasion, and migration following lncRNA modulation

- In vivo models such as the N-methyl-N-nitrosourea (MNU)-induced rat bladder carcinoma model [13]

- Mechanistic studies investigating specific pathways (e.g., METTL3/RBM15 synergistic promotion of bladder cancer progression) [13]

The investigation of m6A-modified lncRNAs represents a frontier in cancer research, offering insights into tumor biology and promising clinical applications. Robust signature development requires meticulous attention to statistical methods, particularly overfitting prevention through regularization and multi-cohort validation. As research progresses, integrating these molecular signatures with clinical parameters and therapeutic response data will be essential for realizing their potential in personalized cancer medicine.

Core Molecular Mechanisms: How does m6A directly regulate lncRNA function?

N6-methyladenosine (m6A) regulates long non-coding RNA (lncRNA) function and stability through a complex interplay between writer, reader, and eraser proteins. This modification represents a critical layer of post-transcriptional control that significantly influences lncRNA biology.

Reader-Protein Mediated Stability Control: The m6A reader protein HNRNPA2B1 directly binds to m6A-modified lncRNAs to enhance their stability. A key example is the lncRNA NORHA, where HNRNPA2B1 binding at multiple m6A sites (including A261, A441, and A919) stabilizes the transcript in sow granulosa cells (sGCs). This stabilization promotes sGC apoptosis by activating the NORHA-FoxO1 axis, which subsequently represses cytochrome P450 family 19 subfamily A member 1 (CYP19A1) expression and suppresses 17β-estradiol biosynthesis [19].

Reader-Dependent Functional Modulation: The m6A reader IGF2BP2 functions as a critical stabilizer for specific lncRNAs. In renal cell carcinoma (RCC), IGF2BP2, mediated by the methyltransferase METTL14, recognizes m6A modification sites on the lncRNA LHX1-DT and promotes its stability. This stabilized LHX1-DT then acts as a competing endogenous RNA (ceRNA) by sponging miR-590-5p, which in turn downregulates PDCD4, ultimately inhibiting RCC cell proliferation and invasion [20].

Writer-Mediated Regulation: The m6A methyltransferase complex, particularly METTL3, serves as a crucial mediator in lncRNA regulation. Research demonstrates that HNRNPA2B1 functions as a critical mediator of METTL3-dependent m6A modification, modulating NORHA expression and activity in cellular systems [19].

The following diagram illustrates these core regulatory pathways:

Experimental Protocols: Key Methodologies for Investigating m6A-lncRNA Interactions

Transcriptome-Wide m6A Site Mapping

Purpose: To identify specific m6A modification sites on lncRNAs at a transcriptome-wide scale [19].

Protocol:

- RNA Isolation and Fragmentation: Extract high-quality total RNA using TRIzol reagent. Fragment RNA to 100-150 nucleotides using RNA fragmentation buffer.

- Immunoprecipitation: Incubate fragmented RNA with anti-m6A antibody (5μg) and protein A/G magnetic beads in IP buffer (150mM NaCl, 10mM Tris-HCl, pH 7.4, 0.1% NP-40) for 2 hours at 4°C.

- Washing and Elution: Wash beads 3 times with IP buffer. Elute m6A-modified RNA using elution buffer (6.7mM N6-methyladenosine in IP buffer).

- Library Preparation and Sequencing: Construct libraries using the eluted RNA with standard kits. Sequence on Illumina platform (150bp paired-end).

- Bioinformatic Analysis: Map reads to reference genome. Call m6A peaks using specialized software (e.g., exomePeak, MeTPeak). Validate specific sites through motif analysis.

RNA Immunoprecipitation (RIP) for Reader-lncRNA Binding

Purpose: To validate direct binding between m6A reader proteins and specific lncRNAs [19] [20].

Protocol:

- Cell Lysis: Lyse cells in RIP lysis buffer (150mM KCl, 25mM Tris pH 7.4, 5mM EDTA, 0.5mM DTT, 0.5% NP-40) supplemented with protease inhibitors and RNase inhibitors.

- Antibody Binding: Incubate 5μg of target antibody (e.g., anti-HNRNPA2B1, anti-IGF2BP2) or control IgG with protein A/G magnetic beads for 30 minutes at room temperature.

- Immunoprecipitation: Incubate antibody-bound beads with cell lysate (containing 500μg total protein) for 4 hours at 4°C with rotation.

- Washing: Wash beads 5 times with RIP wash buffer.

- RNA Extraction: Isolate bound RNA using TRIzol LS reagent. Treat with DNase I to remove genomic DNA contamination.

- Analysis: Analyze target lncRNA enrichment by RT-qPCR or RNA sequencing.

Luciferase Reporter Assays for Functional Validation

Purpose: To investigate how m6A modifications affect lncRNA function and interaction networks [20].

Protocol:

- Vector Construction: Clone wild-type and m6A site-mutant lncRNA sequences into psiCHECK-2 vector downstream of Renilla luciferase gene.

- Cell Transfection: Seed 293T or relevant cell line in 24-well plates. Transfect with 500ng of reporter construct using lipofectamine 3000.

- Dual-Luciferase Assay: After 48 hours, harvest cells and measure Firefly and Renilla luciferase activities using Dual-Luciferase Reporter Assay System.

- Data Analysis: Normalize Renilla luciferase activity to Firefly luciferase activity. Compare relative luciferase activity between wild-type and mutant constructs.

Troubleshooting Common Experimental Challenges

FAQ: Addressing Specific Technical Issues

Q: Why do I observe high background in my m6A-RIP experiments? A: High background often results from antibody nonspecificity or insufficient washing. Titrate your anti-m6A antibody to determine optimal concentration (typically 2-5μg). Increase wash stringency by adding high-salt washes (300mM NaCl). Include proper controls: IgG control, RNA input control, and beads-only control. Validate antibody specificity with synthetic m6A-modified and unmodified RNA oligos [19].

Q: How can I distinguish direct stabilization effects from indirect transcriptional regulation? A: Perform transcriptional inhibition assays using actinomycin D (2-5μg/mL) at multiple time points (0, 2, 4, 8 hours) after reader protein knockdown/overexpression. Measure lncRNA half-life by RT-qPCR. Combine with m6A site mutation in luciferase reporter constructs to confirm direct effects [19] [20].

Q: What approaches can validate functional outcomes of specific m6A-lncRNA axes? A: Employ multiple complementary approaches: (1) CRISPR/Cas9-mediated m6A site editing; (2) Reader protein knockdown via siRNA/shRNA; (3) Rescue experiments with wild-type and m6A site-mutant lncRNAs; (4) Functional assays relevant to your biological context (e.g., apoptosis, proliferation, migration) [19] [20].

Troubleshooting Guide for Common Problems

Table: Troubleshooting m6A-lncRNA Experiments

| Problem | Potential Causes | Solutions |

|---|---|---|

| Poor RIP enrichment | Inadequate antibody specificity | Validate antibody with positive controls; try different lots |

| Insufficient crosslinking | Optimize UV crosslinking time (typically 150-400 mJ/cm²) | |

| RNA degradation | Use fresh RNase inhibitors; work on ice | |

| Inconsistent luciferase results | m6A site context missing | Include longer genomic fragments (>500bp) around sites |

| Transfection efficiency | Normalize with co-transfected control; use stable lines | |

| Cell-type specific effects | Verify reader/writer expression in your cell model | |

| High variability in RNA stability assays | Uneven actinomycin D treatment | Pre-warm media; use fresh stock solutions |

| Inaccurate time points | Strictly adhere to collection times; technical replicates | |

| Poor separation in risk models | Overfitting | Implement cross-validation; use multiple datasets |

| Biological heterogeneity | Increase sample size; validate with orthogonal methods |

Research Reagent Solutions: Essential Tools for m6A-lncRNA Studies

Table: Key Research Reagents for m6A-lncRNA Investigations

| Reagent Category | Specific Examples | Function/Application |

|---|---|---|

| m6A Writers | METTL3/METTL14 expression plasmids | Gain-of-function studies; rescue experiments |

| m6A Erasers | FTO, ALKBH5 inhibitors (e.g., FB23, IOX3) | Increase m6A levels; assess modification effects |

| m6A Readers | HNRNPA2B1, IGF2BP2 antibodies | RIP assays; Western blot; immunohistochemistry |

| Validation Tools | Anti-m6A antibodies (Abcam, Synaptic Systems) | meRIP; dot blot; immunofluorescence |

| Luciferase reporter vectors (psiCHECK-2) | Functional validation of m6A sites | |

| Critical Assays | Actinomycin D | RNA stability/half-life measurements |

| Ribosome profiling kits | Translation efficiency assessment | |

| Bioinformatic Tools | exomePeak, MeTPeak | m6A peak calling from sequencing data |

| SRAMP | m6A site prediction in lncRNAs |

Preventing Overfitting in m6A-lncRNA Signature Development

The development of prognostic signatures based on m6A-related lncRNAs requires rigorous methodological approaches to prevent overfitting and ensure clinical applicability.

Cross-Validation Strategies: Implement multiple validation cycles using independent datasets. For example, in pancreatic ductal adenocarcinoma research, signatures developed in TCGA datasets were validated in independent ICGC cohorts [21]. Similarly, colorectal cancer prognostic models were validated through both internal cross-validation and temporal validation (1, 3, and 5-year predictions) [17].

Statistical Regularization Methods: Employ least absolute shrinkage and selection operator (LASSO) Cox regression to minimize overfitting risk. This approach penalizes model complexity while selecting the most informative m6A-related lncRNAs for prognostic signatures [17] [21]. The optimal penalty parameter should be estimated through tenfold cross-validation.

Clinical Applicability Assessment: Enhance model robustness by developing nomograms that integrate the m6A-lncRNA signature with conventional clinical parameters. These nomograms should demonstrate superior predictive accuracy compared to both the signature alone and traditional staging systems, as demonstrated in PDAC research [21].

The following diagram illustrates a robust workflow for developing validated m6A-lncRNA signatures:

Advanced Technical Considerations: Ribosome Association and Its Implications

Recent evidence reveals unexpected complexity in lncRNA regulation, particularly regarding ribosome association and its impact on stability:

Ribosome Engagement Effects: Ribosome association can either stabilize or destabilize lncRNAs through competing mechanisms. Protection from nucleases can increase stability, while ribosome-associated decay pathways (e.g., nonsense-mediated decay) may promote degradation. Ribosome profiling studies show that up to 70% of cytosolic lncRNAs interact with ribosomes in human cell lines, suggesting this is a widespread phenomenon [22].

Translation Coupling: The relationship between translation efficiency and RNA stability, partly explained by codon optimality, may extend to certain lncRNAs. In humans, codons with G or C at the third position (GC3) associate with increased transcript stability, while those with A or U at the third position (AU3) typically reduce stability [22].

Experimental Implications: When investigating lncRNA stability, consider potential ribosome association through ribosome profiling or polysome fractionation. The interaction between translation and lncRNA decay offers broad implications for RNA biology and provides new insights into lncRNA regulation in both cellular and disease contexts [22].

Frequently Asked Questions (FAQs)

Q1: What are the core components of the m6A modification machinery that interact with lncRNAs? The m6A modification process is governed by three classes of proteins often called "writers," "erasers," and "readers." Writers, such as the METTL3-METTL14-WTAP complex, VIRMA, and RBM15, install the m6A modification. Erasers, including FTO and ALKBH5, remove the modification. Readers, such as YTHDF1-3, YTHDC1-2, and IGF2BP1-3, recognize the m6A marks and determine the functional outcome on the target lncRNA, influencing its stability, splicing, transport, and translation [23] [24].

Q2: How can I prevent overfitting when building a prognostic m6A-related lncRNA signature? The most robust method to prevent overfitting is to employ the least absolute shrinkage and selection operator (LASSO) Cox regression analysis combined with 10-fold cross-validation. This statistical approach penalizes the complexity of the model, forcing it to select only the lncRNAs with the strongest prognostic power, thereby reducing the risk of modeling noise. This methodology has been successfully implemented in multiple studies to construct reliable multi-lncRNA signatures [17] [11] [14].

Q3: What is a common workflow for identifying and validating m6A-related lncRNA signatures? A standard, validated workflow consists of the following stages [11] [14]:

- Data Acquisition: Obtain RNA-seq data and clinical information from public repositories like The Cancer Genome Atlas (TCGA).

- lncRNA Identification: Filter and extract the expression profiles of known lncRNAs.

- Correlation Analysis: Identify m6A-related lncRNAs by calculating correlation coefficients (e.g., Spearman or Pearson) between the expression of established m6A regulators and all lncRNAs.

- Prognostic Screening: Use univariate Cox regression to select lncRNAs significantly associated with patient survival.

- Signature Construction: Apply LASSO Cox regression to refine the lncRNA list and build a multi-lncRNA prognostic model.

- Model Validation: Validate the model's performance in an internal test set and, if possible, in independent external datasets (e.g., from GEO). Evaluate using Kaplan-Meier survival analysis and time-dependent Receiver Operating Characteristic (ROC) curves.

Q4: Our lab identified an m6A-related lncRNA associated with drug resistance. What are the first steps to validate its functional role? Initial functional validation typically involves gain-of-function and loss-of-function experiments in relevant cell line models. Knockdown of the lncRNA using siRNAs or shRNAs in resistant cell lines is performed to see if it restores drug sensitivity. Conversely, overexpressing the lncRNA in sensitive cell lines can test if it confers resistance. The core mechanistic step is to determine if this function is dependent on m6A modification by knocking down key "writer" or "eraser" proteins (e.g., METTL3, FTO) and assessing if the lncRNA's effect is abolished [24].

Q5: How can an m6A-lncRNA signature inform treatment selection, particularly for immunotherapy? Risk scores derived from m6A-lncRNA signatures have been shown to correlate with the tumor immune microenvironment. Studies in colorectal and colon cancer have found that low-risk patients often exhibit stronger immune cell infiltration and higher expression of immune checkpoints like PD-1 and CTLA-4, suggesting they might be better candidates for immunotherapy. Furthermore, these models can predict sensitivity to specific chemotherapeutic and targeted drugs, helping to guide personalized therapy selection [17] [11].

Troubleshooting Guides

Issue 1: Poor Performance or Lack of Generalization in the Prognostic Model

| Potential Cause | Diagnostic Steps | Solution |

|---|---|---|

| Overfitting | The model performs well on the training data but poorly on the validation/test data. | Apply LASSO regression with 10-fold cross-validation during model construction. Ensure the number of lncRNAs in the signature is small relative to the number of patient samples [11]. |

| Batch Effects | Significant performance drop when applying the model to an external dataset from a different source. | Use batch effect correction algorithms (e.g., ComBat) when integrating datasets. Validate the model in multiple independent cohorts to ensure robustness [14]. |

| Incorrect Risk Stratification | The Kaplan-Meier curve does not show a significant separation between high- and low-risk groups. | Re-evaluate the correlation and Cox regression thresholds. Use the median risk score from the training set as the cutoff for the test set, do not recalculate the median in the test set [17] [14]. |

Issue 2: Difficulty in Establishing a Mechanistic Link Between an m6A-Modified lncRNA and Drug Resistance

| Potential Cause | Diagnostic Steps | Solution |

|---|---|---|

| Unclear m6A dependency | Knocking down the lncRNA has an effect, but it's unknown if m6A modification regulates this effect. | Perform MeRIP-qPCR or RIP-qPCR to confirm the lncRNA directly binds to m6A writers/readers. Modulate m6A levels (e.g., knock down METTL3/FTO) and see if the lncRNA's stability and function change [24]. |

| Complex ceRNA Networks | The lncRNA may act as a sponge for multiple miRNAs, making it difficult to pinpoint the key pathway. | Construct a competing endogenous RNA (ceRNA) network bioinformatically. Validate key miRNA interactions using luciferase reporter assays. Focus on downstream pathways known to be involved in drug resistance (e.g., PI3K/AKT) [24] [14]. |

| Inadequate Cell Model | Using a drug-sensitive cell line to study resistance mechanisms. | Generate isogenic drug-resistant cell lines by long-term culture in low doses of the therapeutic agent (e.g., tyrosine kinase inhibitors). This models the development of clinical resistance [24]. |

Quantitative Evidence of m6A-lncRNA Axes in Cancer

The table below summarizes key evidence from recent studies documenting the role of specific m6A-lncRNA axes in cancer progression and therapy resistance.

| Cancer Type | m6A Regulator | lncRNA | Functional Role & Mechanism | Clinical/Experimental Evidence | Ref |

|---|---|---|---|---|---|

| Colorectal Cancer | Not Specified | LINC00543 | Part of an 8-lncRNA prognostic signature; linked to immune function, particularly type I interferon response. | AUC of prognostic model: 0.753 (1-year), 0.682 (3-year), 0.706 (5-year). High-risk group had poorer prognosis. | [17] |

| Colon Adenocarcinoma | Multiple | 12-lncRNA Signature | Risk model predicts prognosis, immunotherapy response, and drug sensitivity. | Model was an independent prognostic factor. Low-risk group showed more sensitivity to Afatinib, Metformin and better response to immunotherapy. | [11] |

| Ovarian Cancer | Multiple | 7-lncRNA Signature | Predicts patient prognosis; a related ceRNA network suggests mechanistic involvement in OC progression. | Validated in TCGA and two independent GEO datasets (GSE9891, GSE26193) and 60 clinical specimens. | [14] |

| Chronic Myeloid Leukemia | FTO | SENCR, PROX1-AS1, LINC00892 | FTO-mediated m6A hypomethylation stabilizes these lncRNAs, promoting TKI resistance via PI3K signaling (e.g., ITGA2, F2R). | Upregulated in TKI-resistant patients. Knockdown restored TKI sensitivity. PI3K inhibitor (Alpelisib) eradicated resistant cells in vivo. | [24] |

Experimental Protocols

Protocol 1: Constructing an m6A-Related lncRNA Prognostic Signature

This protocol is adapted from established methodologies used in multiple cancer studies [11] [14].

Data Collection:

- Download RNA sequencing (RNA-seq) data and corresponding clinical data (e.g., survival time, survival status, pathologic stage) for your cancer of interest from TCGA.

- Extract the expression matrix of a predefined set of m6A regulators (Writers: METTL3, METTL14, WTAP, etc.; Erasers: FTO, ALKBH5; Readers: YTHDF1-3, IGF2BP1-3, etc.).

Identification of m6A-Related lncRNAs:

- From the RNA-seq data, filter and extract the expression profile of all long non-coding RNAs.

- Perform correlation analysis (e.g., Pearson or Spearman) between each m6A regulator and each lncRNA across all tumor samples.

- Identify m6A-related lncRNAs using a strict threshold (commonly |correlation coefficient| > 0.4 and p-value < 0.001).

Prognostic Model Construction:

- Randomly divide the patient cohort into a training set (e.g., 70%) and a test set (e.g., 30%).

- On the training set, perform univariate Cox regression analysis on the m6A-related lncRNAs to identify those significantly associated with overall survival (p < 0.05).

- Subject the significant lncRNAs to LASSO Cox regression analysis with 10-fold cross-validation to select the most robust predictors and prevent overfitting.

- Use the results of the LASSO analysis to perform multivariate Cox regression to assign a coefficient to each selected lncRNA.

- Calculate the risk score for each patient using the formula:

Risk Score = ∑(Expr_lncRNA_i * Coef_lncRNA_i).

Model Validation:

- Apply the risk score formula to the test set and the entire TCGA cohort.

- Classify patients into high-risk and low-risk groups based on the median risk score from the training set.

- Use Kaplan-Meier survival analysis with the log-rank test to evaluate the difference in survival between the two groups.

- Assess the predictive accuracy of the model using time-dependent ROC curve analysis for 1, 3, and 5-year overall survival.

Protocol 2: Validating the Functional Role of an m6A-Modified lncRNA in Drug Resistance

This protocol is based on mechanistic studies in leukemia and other cancers [23] [24].

Establish Resistant Cell Lines:

- Generate TKI-resistant (e.g., imatinib, nilotinib) or other drug-resistant cell lines by chronically exposing sensitive parental cells (e.g., K562) to increasing concentrations of the drug over 8-10 weeks.

Confirm m6A Modification and Dependency:

- MeRIP-qPCR: Use an anti-m6A antibody to immunoprecipitate methylated RNA and detect the specific enrichment of your target lncRNA in the pull-down fraction compared to the input via qPCR.

- Functional Dependency: Knock down m6A erasers (e.g., FTO, ALKBH5) or writers (e.g., METTL3) in the resistant cells using siRNAs or shRNAs. Measure the expression and half-life of the target lncRNA via qPCR after transcriptional inhibition (e.g., Actinomycin D assay). If the lncRNA is stabilized by m6A erasure, FTO knockdown should decrease its stability.

Functional Rescue Experiments:

- Knock down the target lncRNA (e.g., SENCR, PROX1-AS1) in the resistant cell lines using specific shRNAs.

- Perform in vitro drug sensitivity assays (e.g., CCK-8, CellTiter-Glo) to measure the IC50 of the therapeutic drug. Successful knockdown should significantly lower the IC50, indicating re-sensitization.

- Rescue the phenotype by overexpressing a wild-type lncRNA construct and, as a control, an m6A site-mutant lncRNA construct. If the function is m6A-dependent, the mutant should not fully rescue the resistance.

Identify Downstream Pathway:

- Use RNA-seq or qPCR arrays after lncRNA knockdown to identify differentially expressed genes.

- Perform pathway enrichment analysis (e.g., KEGG, GO) to find activated signaling pathways (e.g., PI3K/AKT).

- Validate in vivo using a xenograft mouse model. Treat mice engrafted with resistant cells with a pathway-specific inhibitor (e.g., PI3K inhibitor Alpelisib) and monitor tumor growth and survival.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function/Brief Explanation | Example Usage |

|---|---|---|

| TCGA & GEO Databases | Primary sources for high-throughput transcriptomic data and clinical information needed to discover and validate lncRNA signatures. | Used as the training and validation cohorts in nearly all cited studies [17] [11] [14]. |

| LASSO Cox Regression | A statistical method that performs both variable selection and regularization to enhance prediction accuracy and interpretability of the prognostic model. | Core algorithm for constructing the multi-lncRNA signatures while preventing overfitting [11] [14]. |

| shRNAs/siRNAs | Synthetic RNA molecules used for targeted knockdown of specific genes (e.g., lncRNAs, FTO, METTL3) in loss-of-function studies. | Used to knock down lncRNAs (SENCR, PROX1-AS1) and FTO to validate their functional role in TKI resistance [24]. |

| m6A Immunoprecipitation (MeRIP) | Technique that uses an anti-m6A antibody to pull down methylated RNA fragments, allowing for the identification and validation of m6A-modified transcripts like lncRNAs. | Essential for confirming the direct m6A modification on lncRNAs of interest (e.g., via MeRIP-qPCR) [24]. |

| TIDE Algorithm / Immunophenoscore (IPS) | Computational tools to predict tumor immune dysfunction and exclusion (TIDE) or quantify the immunogenicity of a tumor (IPS), correlating risk scores with immunotherapy response. | Used to predict which patient risk groups are more likely to respond to anti-PD-1/CTLA-4 immunotherapy [11]. |

| pRRophetic R Package | A computational tool that uses gene expression data to predict the chemosensitivity of tumor samples to a wide array of compounds based on the GDSC database. | Used to estimate IC50 values for drugs like Afatinib, Doxorubicin, and Olaparib in different risk groups [11]. |

| Epinortrachelogenin | Epinortrachelogenin, CAS:125072-69-7, MF:C20H22O7, MW:374.4 g/mol | Chemical Reagent |

| Corchoionoside C | Corchoionoside C, CAS:185414-25-9, MF:C19H30O8, MW:386.4 g/mol | Chemical Reagent |

Signaling Pathway and Workflow Diagrams

Diagram 1: FTO-lncRNA-PI3K Axis in Drug Resistance

Diagram Title: FTO-lncRNA-PI3K Axis in TKI Resistance

Diagram 2: m6A-lncRNA Signature Development Workflow

Diagram Title: m6A-lncRNA Signature Development Workflow

N6-methyladenosine (m6A) RNA modification represents the most prevalent internal chemical alteration in eukaryotic mRNA and non-coding RNA, functioning as a reversible and dynamic regulator that critically influences RNA splicing, stability, export, translation, and degradation [25] [26]. This modification process is orchestrated by three classes of regulatory proteins: methyltransferases ("writers" such as METTL3, METTL14, and WTAP), demethylases ("erasers" including FTO and ALKBH5), and binding proteins ("readers" like YTHDF1-3 and IGF2BP1-3) that interpret the m6A marks [27] [11]. Long non-coding RNAs (lncRNAs) are transcripts exceeding 200 nucleotides without protein-coding capacity that regulate gene expression at epigenetic, transcriptional, and post-transcriptional levels [26]. The intersection of these fields has revealed that m6A modifications significantly influence lncRNA function, and conversely, lncRNAs can regulate m6A modifications, creating a complex regulatory network with profound implications for cancer biology [6] [28].

The integration of m6A and lncRNA research has opened new avenues for prognostic biomarker development across multiple cancer types. m6A-related lncRNA signatures have demonstrated remarkable predictive power for patient survival outcomes, tumor progression, and therapeutic responses [21] [11] [29]. These signatures typically comprise multiple m6A-related lncRNAs identified through comprehensive bioinformatics analyses of large cancer datasets, particularly from The Cancer Genome Atlas (TCGA), followed by experimental validation [27] [6] [30]. The prognostic utility of these signatures stems from their ability to capture critical aspects of tumor behavior, including immune microenvironment composition, metastatic potential, and drug resistance mechanisms, providing a more comprehensive prognostic picture than single biomarkers [25] [21].

Key Research Reagent Solutions

Table 1: Essential Research Reagents for m6A-lncRNA Investigations

| Reagent Category | Specific Examples | Research Application |

|---|---|---|

| m6A Regulator Antibodies | Anti-METTL3, Anti-METTL14, Anti-ALKBH5, Anti-YTHDF1 | Immunohistochemistry validation of m6A regulator expression in tumor tissues [6] |

| Cell Culture Reagents | DMEM with 10% FBS, penicillin-streptomycin | Maintenance of cancer cell lines (e.g., 143B osteosarcoma, HCT116 colon cancer) for functional studies [25] [28] |

| RNA Isolation & qRT-PCR Kits | Trizol RNA extraction, cDNA synthesis kits, SYBR Green Master Mix | Validation of lncRNA expression in patient tissues and cell lines [6] [28] [29] |

| Cell Proliferation Assays | Cell Counting Kit-8 (CCK-8) | Functional assessment of lncRNA effects on cancer cell growth [28] [29] |

| siRNA/shRNA Constructs | siRNA targeting UBA6-AS1, LINC00528 | Knockdown studies to investigate lncRNA functional mechanisms [28] [31] |

Experimental Protocols for Signature Development and Validation

Bioinformatics Identification of m6A-related lncRNAs

The standard workflow begins with data acquisition from TCGA and other databases such as GEO or ICGC, containing RNA-seq data and clinical information for specific cancer types [27] [21] [30]. Following data preprocessing and normalization, researchers identify m6A-related lncRNAs through co-expression analysis between known m6A regulators and all annotated lncRNAs. The typical parameters include a Pearson correlation coefficient >0.4 and p-value <0.001 [25] [26] [31]. For example, in a colon adenocarcinoma study, this approach identified 1,573 m6A-related lncRNAs from 14,142 annotated lncRNAs [28]. Univariate Cox regression analysis then screens these lncRNAs to identify those significantly associated with overall survival (p < 0.05), typically reducing the candidate pool to 5-30 prognostic lncRNAs [11] [30].

Prognostic Signature Construction Using LASSO Regression

To prevent overfitting—a critical concern in multi-gene signature development—researchers employ Least Absolute Shrinkation and Selection Operator (LASSO) Cox regression analysis [27] [11]. This technique penalizes the magnitude of regression coefficients, effectively reducing the number of lncRNAs in the final model while maintaining predictive power. The process involves 10-fold cross-validation to determine the optimal penalty parameter (λ) at the minimum partial likelihood deviance [21] [29]. A risk score formula is then generated: Risk score = (β1 × Exp1) + (β2 × Exp2) + ... + (βn × Expn), where β represents the regression coefficient and Exp represents the expression level of each included lncRNA [11] [29]. Patients are stratified into high-risk and low-risk groups using the median risk score as cutoff, and Kaplan-Meier analysis with log-rank testing validates the signature's prognostic value [6] [21].

Diagram 1: Comprehensive Workflow for Developing m6A-lncRNA Prognostic Signatures

Immune Microenvironment and Drug Sensitivity Analysis

The tumor immune microenvironment evaluation represents a crucial validation step for m6A-lncRNA signatures. Researchers employ multiple algorithms to assess immune characteristics, including ESTIMATE for calculating stromal, immune, and ESTIMATE scores [25] [26], CIBERSORT for quantifying 22 types of immune cell infiltration [25] [27], and single-sample GSEA (ssGSEA) for evaluating immune function and pathway activity [21] [29]. Additionally, the Tumor Immune Dysfunction and Exclusion (TIDE) algorithm predicts immunotherapy response, while tumor mutation burden (TMB) calculations offer complementary immunogenicity metrics [28] [29]. For drug sensitivity assessment, researchers utilize the R package "pRRophetic" to predict half-maximal inhibitory concentration (IC50) values for various chemotherapeutic agents based on the GDSC database, identifying potential therapeutic vulnerabilities associated with specific risk groups [21] [11] [29].

Technical FAQs and Troubleshooting Guides

Signature Development and Validation

Q1: What correlation thresholds are appropriate for identifying genuine m6A-related lncRNAs?

A: Most studies employ absolute Pearson correlation coefficients >0.4 with statistical significance (p < 0.001) [25] [26] [31]. However, when working with larger sample sizes, stricter thresholds (>0.5) may reduce false positives. For smaller datasets (n < 100), a threshold of >0.3 may be acceptable if supported by additional evidence from databases like M6A2Target that document validated m6A-lncRNA interactions [30]. Always perform sensitivity analyses to ensure results are robust across different threshold values.

Q2: How can we prevent overfitting when constructing multi-lncRNA signatures?

A: Implement multiple safeguards: (1) Utilize LASSO regression with 10-fold cross-validation to penalize model complexity [27] [21]; (2) Split datasets into training (typically 50-70%) and testing cohorts before model development [28] [29]; (3) Validate signatures in completely independent external cohorts from GEO or ICGC databases [21] [30]; (4) Apply bootstrapping methods (1000+ resamples) to assess model stability [27]; (5) Ensure the events-per-variable ratio exceeds 10, preferably including 10-15 outcome events per lncRNA in the signature [11].

Experimental Validation Challenges

Q3: What approaches effectively validate the functional roles of signature lncRNAs?

A: Employ a multi-method validation strategy: (1) Confirm differential expression in patient tissues versus normal controls using qRT-PCR [30] [28]; (2) Perform loss-of-function experiments using siRNA or shRNA knockdown in relevant cancer cell lines [28] [31]; (3) Assess phenotypic effects through functional assays (CCK-8 for proliferation, transwell for migration/invasion) [28] [29]; (4) Investigate molecular mechanisms via RNA immunoprecipitation to confirm m6A regulator interactions [25]; (5) Validate clinical relevance through immunohistochemistry of paired m6A regulators [6].

Q4: How do we address discrepancies between bioinformatics predictions and experimental results?

A: First, verify data quality and normalization methods in bioinformatics analyses. Second, ensure cell line models appropriately represent the cancer type studied. Third, consider tissue-specific and context-dependent functions of lncRNAs that may not be captured in vitro. Fourth, examine potential compensation mechanisms in knockout models that might mask phenotypes. Fifth, validate key bioinformatics predictions (e.g., immune cell infiltration) using orthogonal methods such as flow cytometry or multiplex immunohistochemistry on patient samples [25] [26].

Table 2: Performance of m6A-lncRNA Signatures Across Various Cancers

| Cancer Type | Number of lncRNAs in Signature | Predictive Performance (AUC) | Key Clinical Associations |

|---|---|---|---|

| Osteosarcoma [25] | 6 | 1-year AUC: 0.70-0.80 | Immune score, tumor purity, monocyte infiltration |

| Early-Stage Colorectal Cancer [27] | 5 | 3-year AUC: 0.754 (test cohort) | Response to camptothecin and cisplatin |

| Breast Cancer [6] | 6 | 3-year AUC: 0.70-0.85 | M2 macrophage infiltration, immune status |

| Pancreatic Ductal Adenocarcinoma [21] | 9 | 3-year AUC: 0.65-0.75 | Somatic mutations, immunocyte infiltration, chemosensitivity |

| Colon Adenocarcinoma [11] | 12 | 3-year AUC: 0.70-0.80 | Pathologic stage, immunotherapy response |

| Laryngeal Carcinoma [31] | 4 | 1-year AUC: 0.65-0.75 | Smoking status, immune microenvironment |

Integration with Clinical Practice and Therapeutic Development

The transition of m6A-lncRNA signatures from research tools to clinical applications requires addressing several methodological considerations. First, standardization of analytical protocols across institutions is essential, particularly for RNA extraction, library preparation, and normalization procedures in transcriptomic analyses [30] [28]. Second, the development of cost-effective targeted assays measuring only signature lncRNAs (rather than whole transcriptome sequencing) would enhance clinical feasibility. Third, establishing universal risk score cutoffs through multi-institutional consortia would improve reproducibility [21] [29].

For therapeutic development, m6A-lncRNA signatures offer two major advantages: they identify novel therapeutic targets and enable patient stratification for treatment selection [11] [28]. For instance, in colon adenocarcinoma, the lncRNA UBA6-AS1 was identified as a functional oncogene that promotes cell proliferation, representing a potential therapeutic target [28]. Similarly, in osteosarcoma, AC004812.2 was characterized as a protective factor that inhibits cancer cell proliferation and regulates m6A readers IGF2BP1 and YTHDF1 [25]. Beyond targeting specific lncRNAs, these signatures can guide treatment selection by predicting response to chemotherapy, immunotherapy, and targeted therapies [27] [11].

Diagram 2: Clinical Applications of m6A-lncRNA Signatures in Precision Oncology

The emerging evidence suggests that m6A-lncRNA signatures not only predict patient outcomes but also reflect fundamental biological processes driving cancer progression. Their association with tumor immune microenvironments [25] [26], cellular metabolism [11], and drug resistance mechanisms [21] [29] positions these signatures as valuable tools for advancing personalized cancer medicine. As validation studies accumulate and technological advances reduce implementation costs, m6A-lncRNA signatures are poised to become integral components of cancer diagnostics and therapeutic development pipelines.

Building Your Signature: A Step-by-Step Guide to Model Construction with Built-In Regularization

Frequently Asked Questions (FAQs)

Q1: What are the main challenges when downloading TCGA data for multi-omics analysis, and how can I overcome them?

The primary challenges include complex file naming conventions with 36-character opaque file IDs, difficulty linking disparate data types to individual case IDs, and the need to use multiple tools for a complete workflow. The TCGADownloadHelper pipeline addresses these by providing a streamlined approach that uses the GDC portal's cart system for file selection and the GDC Data Transfer Tool for downloads, while automatically replacing cryptic file names with human-readable case IDs using the GDC Sample Sheet [32] [33].

Q2: How can I ensure my m6A-related lncRNA prognostic model doesn't overfit the data?

Multiple strategies exist to prevent overfitting. Employ LASSO Cox regression analysis with 10-fold cross-validation to identify lncRNAs most correlated with overall survival while penalizing model complexity [11]. Additionally, validate your model in independent testing cohorts and use the median risk score from the training set to stratify patients in validation sets [11]. For robust performance assessment, calculate time-dependent ROC curves for 1-, 3-, and 5-year survival predictions [17].

Q3: What preprocessing steps are critical for GEO data before analysis?

For microarray data from GEO, essential preprocessing includes data aggregation, standardization, and quality control. Use the default 90th percentile normalization method for data preprocessing. When selecting differentially expressed genes, apply thresholds such as ≥2 and ≤-2 fold change with Benjamini-Hochberg corrected p-value of 0.05 to ensure statistical significance while controlling for false discoveries [34].

Q4: How can I integrate data from both TCGA and GEO databases effectively?

Successful integration requires careful batch effect removal between datasets. Apply algorithms like the 'ComBat' algorithm from the sva R package to eliminate potential batch effects between different datasets. Ensure consistent gene annotation using resources like GENCODE and perform differential expression analysis with standardized thresholds (e.g., \|log2FC\|>1 and adjusted p-value<0.05) across all datasets [35] [36].

Troubleshooting Guides

Issue 1: Difficulty Managing TCGA Data Structure

Problem: Researchers struggle with TCGA's complex folder structure and cryptic filenames, making it difficult to correlate multi-modal data for individual patients [32] [33].

Solution: Table: TCGA Data Types and File Formats

| Data Type | File Formats | Analysis Pipelines | Common Challenges |

|---|---|---|---|

| Whole-Genome Sequencing | BAM (alignments), VCF (variants) | BWA, CaVEMan, Pindel, BRASS | Large file sizes, complex variant calling outputs |

| RNA Sequencing | BAM, count files | STAR, Arriba | Linking expression to clinical outcomes |

| DNA Methylation | IDAT, processed matrices | Minfi, SeSAMe | Normalization, batch effects |

| Clinical Data | XML, TSV | Custom parsing | Inconsistent formatting across cancer types |

Implementation Steps:

- Install TCGADownloadHelper from GitHub and set up the conda environment using the provided yaml file [32]

- Create the required folder structure with subdirectories for clinicaldata, manifests, and samplesheets_prior

- Download your cart file (manifest), sample sheet, and clinical metadata from the GDC portal

- Configure the data/config.yaml file with your specific directory locations and file names

- Execute the pipeline to download data and automatically reorganize files with human-readable case IDs [32] [33]

Issue 2: Preventing Overfitting in Prognostic Signature Development

Problem: Models with too many features perform well on training data but poorly on validation data, limiting clinical utility [17] [11].

Solution: Table: Overfitting Prevention Techniques for Signature Development

| Technique | Implementation | Key Parameters | Validation Approach |

|---|---|---|---|

| LASSO Regression | glmnet package in R | Regularization parameter λ via 10-fold cross-validation | Monitor deviance vs lambda plot |

| Feature Selection | Univariate Cox PH regression + multivariate analysis | p<0.01 for initial screening | Consistency across training/test splits |

| Risk Stratification | Median risk score threshold | Cohort-specific median calculation | Kaplan-Meier analysis in validation sets |

| Performance Assessment | Time-dependent ROC curves | 1-, 3-, 5-year AUC values | Calibration plots, decision curve analysis |

Implementation Steps:

- Identify m6A-related lncRNAs through Spearman's correlation analysis (absolute correlation coefficient > 0.4 and p < 0.001) [11]

- Apply univariate Cox proportional hazards regression to identify prognostic lncRNAs (p < 0.05)

- Use LASSO Cox regression with 10-fold cross-validation to construct the final model with minimal features

- Calculate risk scores using the formula: Risk score = Σ(Coefi * Expi) where Coef represents regression coefficient and Exp represents expression level [11]

- Validate using independent datasets and assess clinical utility with decision curve analysis [35]

Issue 3: Handling GEO Data with Different Platforms and Normalization Methods

Problem: Inconsistent preprocessing of GEO data leads to irreproducible differential expression results [34] [35].

Solution:

Implementation Steps:

- Download raw data from GEO accession pages and note the platform used (e.g., GPL26963 for lncRNA arrays) [34]

- For microarray data, use Agilent Feature Extraction or appropriate platform-specific tools for data aggregation and normalization

- Apply 90th percentile normalization method for lncRNA array data [34]

- Use the "limma" package in R for differential expression analysis with thresholds of \|log2FC\|>1 and adjusted p<0.05 [35]

- Perform functional enrichment analysis using clusterProfiler for GO and KEGG pathways [35]

Experimental Protocols for Validation

Protocol 1: Experimental Validation of lncRNA Expression

Purpose: Validate computational predictions of key lncRNAs using patient samples [36].

Materials:

- TRIzol reagent for RNA extraction

- NanoDrop spectrophotometer for RNA quantification

- HiScript III RT SuperMix kit for cDNA synthesis

- ChamQ Universal SYBR qPCR Master Mix

- Primers for target lncRNAs (e.g., LINC01615, AC007998.3) [36]

Methods:

- Collect CRC tumor tissues and matched adjacent normal tissues (ensure proper ethical approval)

- Extract total RNA using TRIzol reagent following manufacturer's protocol

- Measure RNA concentration and quality using NanoDrop

- Synthesize cDNA using reverse transcription kit

- Perform qPCR reactions with gene-specific primers and SYBR Green master mix

- Calculate relative expression using the 2−ΔΔCt method with GAPDH as reference gene

- Analyze differences using two-sided Wilcoxon's rank-sum test [36]

Protocol 2: Construction and Validation of Nomograms

Purpose: Develop clinically applicable tools for survival prediction [35].

Methods:

- Identify independent prognostic factors through univariate and multivariate Cox regression analyses

- Develop the nomogram using the rms package in R, integrating the risk model with clinical factors like pathologic stage

- Validate temporal discrimination via time-dependent ROC curves with AUC quantification

- Assess prediction accuracy using calibration curves

- Evaluate clinical utility through decision curve analysis to determine net benefit [35]

Workflow Diagrams

Data Integration and Analysis Workflow

m6A-LncRNA Signature Development Process

Research Reagent Solutions

Table: Essential Research Reagents and Materials

| Reagent/Material | Function/Purpose | Example Sources/Products |

|---|---|---|

| TRIzol Reagent | Total RNA extraction from tissues | Thermo Fisher Scientific [35] [37] |

| Agilent lncRNA Microarray | lncRNA expression profiling | Agilent-085982 Arraystar human lncRNA V5 microarray [34] |

| HiScript III RT SuperMix | cDNA synthesis from RNA | Vazyme Biotech [36] |

| ChamQ SYBR qPCR Master Mix | Quantitative PCR reactions | Vazyme Biotech [36] |

| GDC Data Transfer Tool | TCGA data download | NCI Genomic Data Commons [32] [33] |

| CIBERSORTx Algorithm | Immune cell infiltration estimation | CIBERSORTx web portal [35] [36] |

Frequently Asked Questions (FAQs)

Q1: What are the primary methods for identifying m6A-related lncRNAs from transcriptomic data? The most common method involves correlation analysis between lncRNA expression profiles and known m6A regulators using large-scale datasets like TCGA. Researchers typically calculate Spearman or Pearson correlation coefficients between lncRNAs and m6A regulators (writers, erasers, and readers), then apply statistical thresholds to identify significant associations. Studies often use an absolute correlation coefficient > 0.3-0.4 with a p-value < 0.05 as selection criteria [38] [39] [30].

Q2: What correlation thresholds are typically used to define m6A-related lncRNAs? Research protocols commonly employ the following thresholds:

Table: Standard Correlation Thresholds for m6A-lncRNA Identification

| Application | Correlation Coefficient | P-value | Reference |

|---|---|---|---|

| Initial screening | >0.2 or <-0.2 | <0.05 | [30] |

| Standard identification | >0.3 | <0.05 | [39] |

| Stringent selection | >0.4 | <0.05 | [38] |

Q3: How can I validate that my identified m6A-related lncRNAs are functionally significant? Beyond computational identification, experimental validation is crucial. This includes:

- Knockdown experiments: Assessing functional impact on proliferation, invasion, migration, and apoptosis in cancer cell lines (e.g., A549 for lung cancer) [38]

- Drug resistance assays: Evaluating impact on chemoresistance (e.g., cisplatin resistance) [38]

- Mechanistic studies: Examining effects on epithelial-mesenchymal transition (EMT) and key signaling pathways [38]

Q4: What are the common pitfalls in m6A-lncRNA signature development and how can I avoid them? Common issues include:

- Overfitting: When using multiple lncRNAs for prognostic signatures, employ LASSO Cox regression analysis to select the most relevant features [40] [30]

- Lack of validation: Always validate findings in independent cohorts (e.g., GEO datasets) [30]

- Insufficient statistical power: Ensure adequate sample sizes through power calculations

Troubleshooting Guides

Problem: Poor Correlation Between m6A Regulators and Candidate lncRNAs

Potential Causes and Solutions:

Insufficient data quality

- Solution: Verify RNA sequencing quality metrics and normalize expression data properly

- Check: Ensure adequate read depth and mapping quality for both coding and non-coding transcripts

Inappropriate correlation method

- Solution: Use Spearman correlation for non-normally distributed data rather than Pearson correlation

- Alternative: Apply weighted co-expression network analysis (WGCNA) for more robust association detection [40]

Tissue-specific effects

- Solution: Consider that m6A-lncRNA relationships may be tissue-specific; verify findings in context-appropriate datasets [41]

Problem: Prognostic Signature Performs Poorly in Validation Cohorts

Validation Strategy Table:

Table: Validation Approaches for m6A-lncRNA Signatures

| Validation Type | Method | Purpose | Acceptance Criteria |

|---|---|---|---|

| Internal validation | Bootstrap resampling or cross-validation | Assess model stability | Consistency index >0.7 |

| External validation | Independent datasets (e.g., GEO) | Generalizability | AUC >0.65 in external sets |

| Clinical validation | Association with clinicopathological features | Clinical relevance | Significant correlation with known prognostic factors |

| Experimental validation | Functional assays in cell lines/animal models | Biological relevance | Reproducible phenotypic effects |

Implementation Steps:

- Perform LASSO regression to reduce overfitting [30]

- Apply time-dependent ROC analysis to assess predictive accuracy [38]

- Construct nomograms combining your signature with clinical parameters [38] [40]

- Validate in at least 2-3 independent cohorts with sufficient sample size (>100 patients) [30]

Problem: Uncertain Functional Significance of Identified m6A-related lncRNAs

Experimental Workflow:

Key Experimental Considerations:

- Use appropriate cell lines relevant to your tissue of interest (e.g., A549 for lung cancer, patient-derived cells when possible) [38]

- Include both normal and cancer cells for comparison where feasible [38]

- Assess multiple functional endpoints (proliferation, invasion, migration, apoptosis, drug resistance) [38]

- Examine effects on relevant signaling pathways through gene set enrichment analysis (GSEA) [38]

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Reagents for m6A-lncRNA Research

| Reagent Type | Specific Examples | Function/Application |

|---|---|---|

| m6A Regulator Targets | METTL3/METTL14 antibodies, FTO/ALKBH5 inhibitors | Writer/eraser manipulation and detection |

| Cell Lines | A549 (lung), patient-derived glioblastoma cells | Functional validation in disease-relevant models [38] [41] |

| Analysis Tools | CIBERSORT, DESeq2, glmnet, survival R packages | Immune infiltration, differential expression, LASSO regression, survival analysis [38] [30] |

| Validation Reagents | siRNA/shRNA constructs, cisplatin chemotherapy | Functional assessment and drug resistance evaluation [38] |

| Sequencing Methods | MeRIP-seq, miCLIP, direct RNA sequencing | m6A modification mapping at various resolutions [42] [43] |

Experimental Protocols

Protocol 1: Identification of m6A-Related lncRNAs from TCGA Data

Data Acquisition

Expression Correlation Analysis

- Calculate Spearman correlation coefficients between all lncRNAs and m6A regulators

- Apply filtration threshold (absolute correlation coefficient >0.3, p-value <0.05)

- Identify m6A-related lncRNAs meeting these criteria [39]

Survival Analysis

- Perform univariate Cox regression analysis to identify prognostic m6A-related lncRNAs

- Use significant lncRNAs in multivariate Cox regression to establish risk scores [38]

Protocol 2: Functional Validation of m6A-Related lncRNAs

Expression Validation

Functional Assays

- Transfert appropriate cell lines with siRNA or shRNA targeting candidate lncRNAs

- Assess proliferation (MTT assay), invasion (Transwell), migration (wound healing)

- Evaluate apoptosis (Annexin V staining) and drug sensitivity (e.g., to cisplatin) [38]

This technical support guide provides comprehensive methodologies for identifying, validating, and troubleshooting m6A-related lncRNA research, with specific emphasis on preventing overfitting through appropriate statistical methods and validation frameworks.

In high-dimensional biological research, such as the development of m6A-related lncRNA signatures for cancer prognosis, the number of predictor variables (genes, lncRNAs) often far exceeds the number of observations (patient samples). This n << p scenario makes conventional statistical methods prone to overfitting, where models perform well on training data but fail to generalize to new datasets. LASSO (Least Absolute Shrinkage and Selection Operator) Cox regression addresses this challenge by performing automatic variable selection while simultaneously preventing overfitting through regularization. This technical guide provides troubleshooting and methodological support for researchers implementing LASSO Cox regression in their genomic signature development workflows.

Frequently Asked Questions (FAQs)

Q1: What is the primary advantage of using LASSO Cox regression for high-dimensional survival data?

LASSO Cox regression combines the Cox proportional hazards model with L1 regularization to perform automatic variable selection in survival analysis. Its key advantages include:

- Automatic Variable Selection: Shrinks coefficients of less important variables to exactly zero, effectively selecting only the most relevant features [44].

- Handles High-Dimensional Data: Functions effectively even when the number of variables approaches or exceeds the sample size (p ≥ n) [44] [45].

- Prevents Overfitting: Regularization through penalty parameters improves model generalizability to new data [46].

- Clinical Interpretability: Produces sparse models with selected key variables, facilitating biological interpretation [44].

Q2: Why does my LASSO Cox model select zero variables, and how can I address this?

A model selecting zero variables indicates that at the chosen lambda value, all coefficients are shrunk to zero. This commonly occurs when:

- Insufficient Predictive Signal: Your predictor variables may not contain meaningful information about the survival outcome [47].

- Overly Stringent Lambda: The penalty parameter λ may be too large, forcing all coefficients to zero [46].

- Suboptimal Evaluation Metric: Using classification error as the cross-validation metric can be problematic for survival data [47].

Troubleshooting Steps:

- Verify Variable Standardization: LASSO requires standardized predictors; ensure you're using the default

standardize = TRUEinglmnet[47]. - Adjust Cross-Validation Metric: Switch from

type.measure = "class"totype.measure = "deviance"or use AUC-based metrics [47]. - Explore Lambda Values: Examine the full cross-validation results using

plot(cv.modelfitted)to identify where the error curve minimizes. - Check Event Frequency: Ensure you have adequate events (at least 5-10 events per candidate predictor) for reliable estimation [44].

Q3: How does cross-validation work in LASSO Cox regression, and why is it crucial?

Cross-validation (CV) is essential for determining the optimal regularization parameter (λ) and estimating model performance without overfitting. The process involves:

- K-Fold Cross-Validation: Data is partitioned into k subsets (typically k=10), with each subset serving as a validation set while the remaining k-1 subsets are used for training [44] [46].

- Lambda Selection: CV identifies the λ value that minimizes prediction error (lambda.min) or the most parsimonious model within one standard error of the minimum (lambda.1se) [44].

- Performance Estimation: The outer CV loop provides an unbiased estimate of how the model will perform on new, unseen data [45].

For high-dimensional genomic studies, nested (double) cross-validation is recommended, where an inner loop selects features and an outer loop estimates performance, providing more reliable generalizability estimates [45].

Q4: What are the key differences between LASSO Cox and traditional Cox regression?

Table: Comparison between LASSO Cox and Traditional Cox Regression

| Feature | LASSO Cox Regression | Traditional Cox Regression |

|---|---|---|

| Variable Selection | Automatic via L1 penalty | Manual or stepwise selection |

| High-Dimensional Data | Handles p >> n scenarios | Fails when p ≥ n |

| Coefficient Estimation | Shrinks coefficients toward zero | Maximum likelihood estimation |

| Overfitting Risk | Reduced via regularization | High in high-dimensional settings |

| Model Interpretation | Sparse, parsimonious models | All variables retained in final model |

| Implementation | Requires tuning parameter (λ) selection | No tuning parameters needed |

Q5: How should I preprocess my data before applying LASSO Cox regression?

Proper data preprocessing is critical for reliable LASSO Cox results:

- Standardization: Ensure all predictor variables are standardized to have mean = 0 and variance = 1 before analysis, as LASSO is sensitive to variable scales [44] [46].

- Missing Data: Implement appropriate missing data handling (imputation or complete-case analysis) based on the missingness mechanism and proportion.

- Outlier Detection: Identify and address extreme outliers that might disproportionately influence results.

- Censoring Assurance: Verify that censoring is non-informative and appropriately documented in your survival data.

Troubleshooting Common Experimental Issues

Problem 1: Unstable Feature Selection Across Samples

Symptoms: Different subsets of your data yield different selected features, indicating instability in the model.

Solutions:

- Implement repeated nested cross-validation to aggregate feature importance across multiple runs [45].

- Use the

lambda.1seinstead oflambda.minfor more conservative feature selection [44]. - Apply stability selection methods or bootstrap aggregation to identify consistently selected features.

- Consider the SurvRank algorithm, which weights features according to their performance across CV folds [45].

Problem 2: Poor Model Performance on Validation Data

Symptoms: Your model shows good discrimination on training data (high C-index) but performs poorly on test data.

Solutions:

- Re-evaluate your predictor variables for biological relevance and measurement quality.

- Ensure strict separation between training and test sets during model development.

- Reduce the number of candidate predictors through pre-filtering using univariate methods.

- Check for systematic differences between your training and validation cohorts.