RFE-SMOTE Pipeline: Tackling Imbalanced Data in Chemical Research and Drug Discovery

This article provides a comprehensive guide for researchers and drug development professionals on implementing a pipeline combining Recursive Feature Elimination (RFE) and the Synthetic Minority Oversampling Technique (SMOTE) to address...

RFE-SMOTE Pipeline: Tackling Imbalanced Data in Chemical Research and Drug Discovery

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on implementing a pipeline combining Recursive Feature Elimination (RFE) and the Synthetic Minority Oversampling Technique (SMOTE) to address the pervasive challenge of imbalanced chemical data. Covering foundational concepts, practical implementation, and advanced optimization, we explore how this methodology enhances model performance in critical areas such as molecular property prediction, drug discovery, and materials science. The article also presents a rigorous validation framework and comparative analysis against other techniques, offering actionable insights for building more robust, reliable, and generalizable predictive models in chemical and biomedical applications.

The Imbalanced Data Challenge in Chemistry: Why Your Models Fail and How to Fix Them

In the field of chemical research, the integrity and predictive power of machine learning (ML) models are heavily dependent on the quality and distribution of the underlying data. A pervasive challenge in this domain is the prevalence of imbalanced data, a phenomenon where certain classes of data are significantly underrepresented within a dataset [1]. In chemical datasets, this often manifests as a substantial overabundance of inactive compounds compared to active ones, or a much larger number of non-toxic substances than toxic ones [1] [2]. This imbalance poses a significant threat to the development of robust and reliable models, as standard ML algorithms, which often assume an even distribution of classes, tend to become biased toward the majority class. Consequently, models may achieve high overall accuracy by simply always predicting the majority class, while failing entirely to identify the critical minority class—such as a promising drug candidate or a toxic substance [1] [3]. This application note defines imbalanced data within the context of chemical research, quantifies its prevalence and impact, and provides detailed protocols for addressing this issue, with a specific focus on the RFE-SMOTE pipeline.

Prevalence and Quantitative Impact of Imbalance in Chemical Data

Imbalanced data is not an exception in chemical research; it is the norm. This skew in data distribution arises from natural molecular abundance biases, selection bias in experimental processes, and the fundamental reality that desirable outcomes (like highly active drug molecules) are often rare [1]. The following table summarizes the typical imbalance ratios encountered across various chemical research fields.

Table 1: Prevalence of Imbalanced Data in Key Chemical Research Areas

| Research Field | Nature of Imbalance | Reported Imbalance Ratio | Primary Source |

|---|---|---|---|

| Drug Discovery [1] [4] | Active vs. Inactive compounds in High-Throughput Screening (HTS) | Ranges from 1:10 to as high as 1:104 | PubChem Bioassays |

| Genotoxicity Prediction [2] | Genotoxic (Positive) vs. Non-genotoxic (Negative) compounds | ~1:14 (250 Positive vs. 3921 Negative after curation) | OECD TG 471 (Ames test) data from eChemPortal |

| Environmental Chemical Risk Assessment [5] | A bias toward environmental endpoint data over human health endpoint data | A 4:1 bias in keyword frequency was observed | Bibliometric analysis of 3150 peer-reviewed articles |

| Toxicology [2] | Toxic vs. Non-toxic compounds in various toxicity assays | Varies by endpoint, but generally skewed toward negatives | ToxCast database and other toxicity screening data |

The impact of these imbalances on model performance is profound and quantifiable. As shown in a study on AI-based drug discovery for infectious diseases, models trained on highly imbalanced HIV dataset (ratio 1:90) performed poorly, with Matthews Correlation Coefficient (MCC) values below zero (-0.04), indicating no better than random prediction [4]. After applying data-balancing techniques, the same models showed significant improvement in key metrics like Balanced Accuracy, Recall, and F1-score [4]. This demonstrates that without corrective measures, models built on imbalanced chemical data are likely to be ineffective and unreliable for real-world applications.

Experimental Protocols for Addressing Data Imbalance

Protocol 1: The RFE-SMOTE-XGBoost Pipeline for Predictive Modeling

This integrated protocol is designed to systematically handle imbalanced chemical datasets by combining feature selection with data balancing to build a high-performance predictive model, as demonstrated in spinal disease research [6].

I. Materials and Software

- Programming Environment: Python (v3.8+)

- Key Libraries: scikit-learn (for RFE, SMOTE), XGBoost, pandas, numpy

- Computational Resources: Standard desktop computer sufficient for datasets up to ~10,000 samples and ~1,000 features.

II. Procedure Workflow Diagram: RFE-SMOTE-XGBoost Pipeline

Step 1: Data Preprocessing and Feature Engineering

- Load the chemical dataset (e.g., structural descriptors, assay results).

- Handle missing values. For chemical data, a replacement with random low values has been shown to be effective [7].

- Split the dataset into training and testing subsets (e.g., 80/20 split). Crucially, apply all subsequent steps only to the training set to avoid data leakage.

Step 2: Recursive Feature Elimination (RFE)

- Select an estimator for RFE. A linear model such as Logistic Regression or a tree-based model like XGBoost can be used.

- Set the target number of features to select. This can be a fixed number (e.g., 20) or optimized via cross-validation.

- Fit the RFE selector on the training data:

Step 3: Data Balancing with SMOTE

- Apply the Synthetic Minority Over-sampling Technique (SMOTE) exclusively to the feature-selected training data.

- SMOTE generates synthetic samples for the minority class by interpolating between existing instances [1].

- The test set (

X_test_selected,y_test) remains untouched to reflect the real-world imbalance for unbiased evaluation.

Step 4: Model Training and Evaluation

- Train an XGBoost classifier on the balanced, feature-selected training data.

- Evaluate the final model's performance on the original, unaltered test set.

- Use metrics robust to imbalance: F1-score, Matthews Correlation Coefficient (MCC), Balanced Accuracy, Precision, and Recall [6] [4]. Do not rely on accuracy alone.

Protocol 2: Benchmarking Data Balancing Techniques

This protocol provides a standardized method for comparing the effectiveness of different data-balancing strategies on a specific imbalanced chemical dataset.

I. Materials and Software

- As in Protocol 1, with the addition of the

imbalanced-learnlibrary.

II. Procedure

Step 1: Baseline Establishment

- Train and evaluate your chosen ML models (e.g., Random Forest, XGBoost, SVM) on the original, unaltered imbalanced training set. This establishes a performance baseline.

Step 2: Application of Balancing Techniques

- Apply a suite of balancing techniques to the training data. Test a range of methods:

Step 3: Model Training and Comparative Analysis

- For each balanced training set generated in Step 2, train the same set of ML models.

- Evaluate all models on the same, original (unbalanced) test set.

- Compare the performance of all models across all balancing strategies using the robust metrics listed in Protocol 1.

Table 2: The Scientist's Toolkit: Key Reagents and Computational Tools

| Item Name | Function/Description | Application Context |

|---|---|---|

| SMOTE [1] | Synthetic Minority Over-sampling Technique. Generates new synthetic samples for the minority class to balance the dataset. | Drug discovery, materials science, genotoxicity prediction. |

| RFE (Recursive Feature Elimination) [6] | A feature selection method that recursively removes the least important features to build a model with optimal features. | High-dimensional chemical data (e.g., molecular descriptors, -omics data). |

| XGBoost [6] [5] | An optimized gradient boosting algorithm known for its speed and performance, particularly on structured data. | General-purpose predictive modeling in chemical and environmental research. |

| MACCS Keys / Morgan Fingerprints [2] | Molecular fingerprinting systems that encode the structure of a chemical compound into a bit string. | Representing chemical structures for QSAR and toxicity prediction models. |

| Sample Weight (SW) [2] | A cost-sensitive learning method that assigns higher weights to minority class samples during model training. | An alternative to resampling, useful when dataset size must be preserved. |

The prevalence of imbalanced data in chemical datasets presents a formidable challenge that, if unaddressed, severely limits the practical utility of machine learning models. The RFE-SMOTE-XGBoost pipeline represents a powerful, integrated solution that simultaneously tackles the "curse of dimensionality" through feature selection and the bias toward the majority class through data balancing [6]. The effectiveness of this approach is evidenced by its ability to achieve high accuracy (97.56%) and a low mean square error (0.1111) in complex classification tasks [6].

No single balancing technique is universally superior. The optimal strategy depends on the dataset's specific characteristics, including the degree of imbalance, the complexity of the feature space, and the algorithm used [4] [2]. For instance, while SMOTE is widely effective, RUS has been shown to outperform it in some highly imbalanced drug discovery datasets [4]. Therefore, the benchmarking protocol outlined herein is critical for identifying the best approach for a given problem. By systematically defining the problem, quantifying its impact, and providing detailed, actionable protocols, this application note equips researchers with the necessary tools to enhance the robustness, reliability, and predictive power of their machine learning models in chemical research.

Imbalanced data presents a significant challenge in chemical research, where the rarity of positive hits or specific material properties can bias machine learning (ML) models, limiting their predictive accuracy and real-world applicability [8]. This imbalance is a widespread issue across various chemical disciplines, from drug discovery to materials science, yet it remains inadequately addressed, often leading to models that fail to accurately predict underrepresented classes [8]. This application note details the common sources of this imbalance and provides a standardized protocol for implementing a Recursive Feature Elimination combined with Synthetic Minority Oversampling Technique (RFE-SMOTE) pipeline to mitigate these effects. The content is structured to provide researchers, scientists, and drug development professionals with practical methodologies and visual workflows to enhance the robustness of their predictive models.

In chemical research, imbalanced datasets frequently arise from intrinsic experimental and procedural constraints. The table below summarizes the primary sources and their impacts on model performance.

Table 1: Common Sources and Impacts of Data Imbalance in Chemical Research

| Research Domain | Source of Imbalance | Typical Imbalance Ratio | Impact on Model Performance |

|---|---|---|---|

| Drug Discovery [8] [9] [10] | High-throughput screening (HTS) where most compounds are inactive. | Can be extreme (e.g., 738 active vs. 356,551 inactive compounds) [9]. | Models are biased toward predicting inactivity; true active hits are missed. |

| Toxicology & Safety (e.g., DILI, hERG) [10] | Low incidence of adverse effects in experimental data. | Highly imbalanced (e.g., only 0.7–3.3% are frequent hitters) [10]. | Fails to identify compounds with toxicological liabilities, posing clinical risks. |

| Materials Science [8] | Rare discovery of materials with targeted properties (e.g., high conductivity, specific catalysis). | Varies, but often severe for novel material classes [8]. | Hampers the identification of promising new materials for design and production. |

| Clinical & Diagnostic Chemistry [7] [11] [12] | Low disease prevalence in patient cohorts or rare pathological grades. | ~5% in hormone-treated animal detection [7]; common in medical datasets [11]. | Low recall for minority class; poor diagnostic capability for the condition of interest. |

The RFE-SMOTE Pipeline: An Integrated Solution

The RFE-SMOTE pipeline synergistically combines feature selection and data balancing to address class imbalance. Recursive Feature Elimination (RFE) enhances model performance and interpretability by iteratively removing the least important features, leaving only the most informative predictors [11] [13]. Subsequently, the Synthetic Minority Oversampling Technique (SMOTE) generates synthetic examples for the minority class by interpolating between existing minority instances in feature space, thus providing the model with a more balanced dataset to learn from [14]. This combination prevents models from being overwhelmed by redundant features and biased toward the majority class.

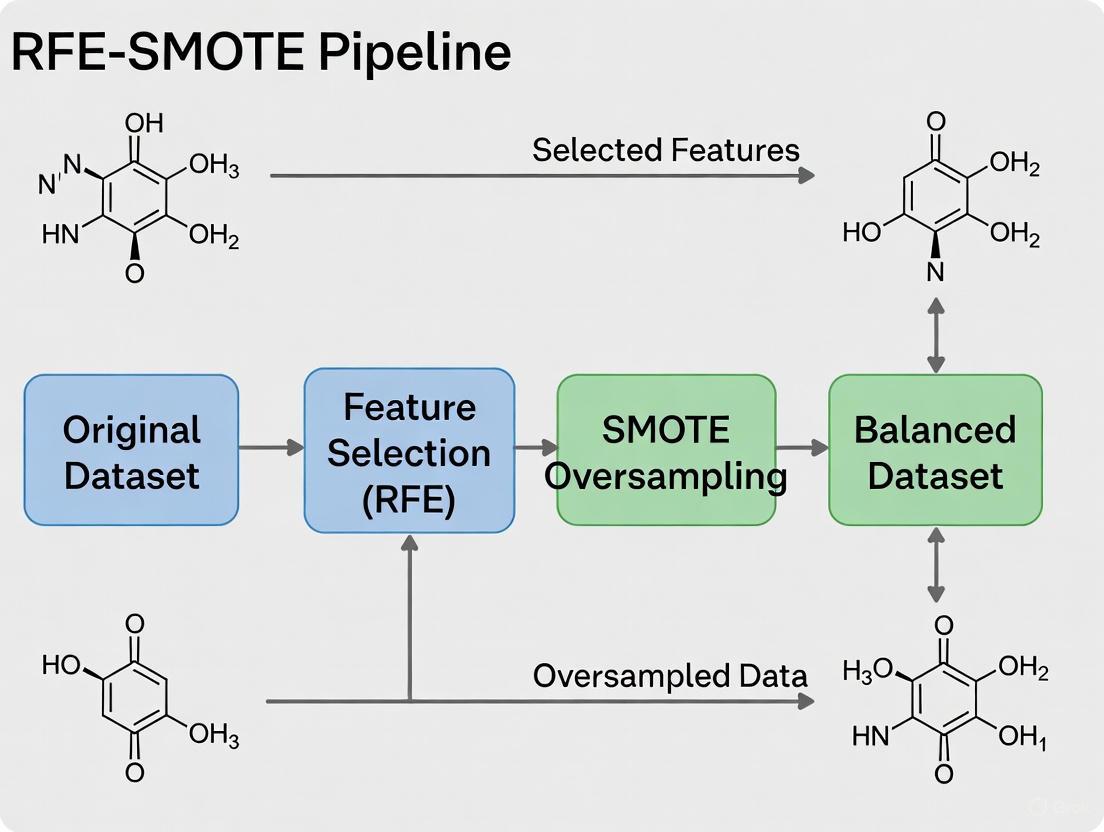

Workflow Visualization

The following diagram illustrates the logical flow and key decision points in the standard RFE-SMOTE pipeline.

Figure 1: RFE-SMOTE Pipeline Workflow. This flowchart outlines the standard protocol for processing imbalanced chemical data, from initial feature selection to final model deployment.

Application Notes & Experimental Protocols

Protocol 1: Implementing RFE for Feature Selection

This protocol details the feature selection process using Recursive Feature Elimination.

1.1 Objective: To identify the most informative feature subset from a high-dimensional chemical dataset (e.g., molecular fingerprints, spectral features, or physiochemical descriptors) to improve model generalizability and performance.

1.2 Materials & Reagents: Table 2: Essential Computational Reagents for RFE-SMOTE Pipeline

| Research Reagent | Function/Description | Example Application in Protocol |

|---|---|---|

| Molecular Fingerprints [9] | Binary vectors encoding molecular structure. | Used as high-dimensional input features for RFE. |

| Estimator (e.g., SVM, Random Forest) [11] [13] | A core ML model used by RFE to rank feature importance. | RFE uses the classifier's coefficients or feature importances. |

| Recursive Feature Elimination (RFE) [11] [13] | A wrapper-mode feature selection method. | Iteratively removes the least important feature(s). |

| Synthetic Minority Oversampling Technique (SMOTE) [14] [11] | A data-level method to balance class distribution. | Generates synthetic samples for the minority class after RFE. |

1.3 Method:

- Data Preparation: Encode chemical structures or reactions into a feature set (e.g., 2048-bit Morgan fingerprints) [9]. Pre-process the data by handling missing values (e.g., replacement with random low values) [7] and applying necessary transformations (e.g., log transformation) [7].

- Initialize RFE: Select an appropriate estimator (e.g., Logistic Regression, Support Vector Machine). Define the target number of features to select or the step-size (number of features removed per iteration).

- Feature Ranking: Fit the RFE model on the training data. The algorithm will recursively train the model and prune the least important features based on the estimator's coefficients or feature importances [11] [13].

- Feature Subset Selection: Obtain the final mask or list of the top

kselected features. Transform the original training and test sets to include only thesekfeatures.

Protocol 2: Applying SMOTE for Data Balancing

This protocol should be applied after feature selection and strictly on the training set only to prevent data leakage.

2.1 Objective: To balance the class distribution of the training data by generating synthetic samples for the minority class, thereby reducing classifier bias.

2.2 Method:

- Data Partitioning: Split the feature-selected dataset into training and testing sets (a typical ratio is 80:20) [9].

- SMOTE Application: Instantiate the SMOTE object (e.g.,

SMOTE()from theimbalanced-learnlibrary). Apply thefit_resamplemethod exclusively to the training data. The algorithm will [14]: a. Select a random example from the minority class. b. Find its k-nearest neighbors (typically k=5). c. Choose a random neighbor and create a synthetic example at a randomly selected point along the line segment connecting the two in feature space. - Data Verification: Post-resampling, verify that the class distribution in the training set is balanced (e.g., using a

Counterobject) [14]. The test set must remain untouched and in its original, imbalanced state to evaluate real-world model performance.

Protocol 3: Model Training and Evaluation

3.1 Objective: To train a machine learning model on the balanced, feature-selected data and evaluate its performance using appropriate metrics.

3.2 Method:

- Model Training: Train the chosen classifier (e.g., Extremely Randomized Trees - ERT, Random Forest, SVM) on the balanced training set.

- Model Evaluation: Predict on the original, imbalanced test set. Use metrics that are robust to imbalance:

- F1-Score: The harmonic mean of precision and recall.

- Recall (Sensitivity): The ability to correctly identify all relevant minority class instances.

- ROC-AUC: The area under the Receiver Operating Characteristic curve.

- G-mean: The geometric mean of sensitivity and specificity [11].

Performance Comparison and Case Studies

The effectiveness of the RFE-SMOTE pipeline and its variants is demonstrated across diverse chemical and clinical research applications.

Table 3: Performance of RFE-SMOTE and Variants in Practical Applications

| Application Field | Dataset & Imbalance Context | Pipeline Used | Reported Performance |

|---|---|---|---|

| Soft Tissue Sarcoma Grading [11] | 252 patient MRI features; Pathological grade imbalance. | RFE + SMOTETomek + Extremely Randomized Trees (ERT) | Accuracy: 81.57% (up to 95.69% with SRS splitting) [11] |

| Liver Disease Diagnosis [12] | Indian Patient Liver Disease (ILPD) dataset; Disease prevalence imbalance. | RFE + SMOTE-ENN + Ensemble Model | Accuracy: 93.2%; Brier Score: 0.032 [12] |

| Antimalarial Drug Discovery [9] | PubChem (AID 720542); 738 active vs. 356,551 inactive compounds. | SMOTE + Gradient Boost Machines (GBM) | Accuracy: 89%; ROC-AUC: 92% [9] |

| Growth Hormone Treatment Detection [7] | 1241 bovine urine samples (65 treated); ~5% imbalance. | SMOTE + Logistic Regression | Effective model for identifying treated animals [7] |

The Scientist's Toolkit

Table 4: Key Software and Analytical Tools

| Tool Name | Type | Function in Pipeline |

|---|---|---|

| scikit-learn | Python Library | Provides implementations for RFE, various classifiers (LogisticRegression, RandomForest), and evaluation metrics. |

| imbalanced-learn | Python Library | Specialized library offering SMOTE, ADASYN, SMOTETomek, and other resampling algorithms [14]. |

| RDKit | Cheminformatics Library | Used to compute molecular descriptors and fingerprints (e.g., Morgan fingerprints) from chemical structures [9]. |

| 7(Z)-Pentacosene | 7(Z)-Pentacosene|High-Purity Reference Standard | 7(Z)-Pentacosene is a high-purity hydrocarbon for entomology and chemical ecology research. This product is For Research Use Only and not for human or veterinary use. |

| Protostemotinine | Protostemotinine | High-purity Protostemotinine (CAS 169534-85-4), a Stemona alkaloid for neuroscience and entomology research. For Research Use Only. Not for human or veterinary use. |

In the field of chemical machine learning (ML), imbalanced datasets are a pervasive and critical challenge, often leading to models that are biased, unreliable, and misleading. Such imbalance occurs when one class of data, typically the class of greatest scientific interest—such as an active drug molecule or a high-performing catalyst—is significantly underrepresented compared to other classes [15]. When trained on these datasets, standard ML models frequently fail to accurately predict the properties or activities associated with these rare instances, directly compromising the robustness and applicability of the models in real-world scenarios like drug discovery and materials design [15].

The integration of feature selection and data balancing techniques offers a powerful solution to these challenges. The RFE-SMOTE pipeline, which combines Recursive Feature Elimination (RFE) for feature selection with the Synthetic Minority Over-sampling Technique (SMOTE) for data balancing, has emerged as a particularly effective strategy [3] [16]. This protocol details the consequences of imbalanced chemical data on ML models and provides a standardized methodology for implementing an RFE-SMOTE pipeline to mitigate these issues, thereby enhancing the predictive performance and generalizability of models in chemical research.

Quantitative Evidence of Pipeline Efficacy

The effectiveness of hybrid pipelines that integrate feature selection with SMOTE is demonstrated by performance improvements across diverse domains, from medical diagnostics to materials science. The following table summarizes quantitative evidence from recent studies.

Table 1: Performance Improvements from Integrated SMOTE-Feature Selection Pipelines

| Application Domain | Dataset | Core Methodology | Key Performance Metrics | Reference |

|---|---|---|---|---|

| Liver Disease Diagnosis | Indian Patient Liver Disease (ILPD) | Hybrid Ensemble (RFE + SMOTE-ENN) | Accuracy: 93.2% Brier Score Loss: 0.032 | [3] |

| Parkinson's Disease Detection | PhysioNet Gait Database | CRISP Pipeline (Correlation Filtering + RFE + SMOTE) | Subject-wise Accuracy: 98.3% (vs. 96.1% baseline) | [16] [17] |

| Polymer Materials Design | 23 Rubber Materials Dataset | Borderline-SMOTE with XGBoost | Improved prediction of mechanical properties after balancing | [15] |

| Catalyst Development | 126 Heteroatom-doped Arsenenes | SMOTE for data balancing | Improved predictive performance for hydrogen evolution reaction catalysts | [15] |

Experimental Protocols

Protocol 1: Standardized RFE-SMOTE Pipeline for Chemical Data

This protocol provides a step-by-step methodology for implementing the RFE-SMOTE pipeline to address data imbalance in chemical ML tasks, such as molecular property prediction.

1. Data Preprocessing and Partitioning

- Input: Raw chemical dataset (e.g., molecular descriptors, assay results).

- Procedure:

- a. Handle missing values using imputation (e.g., median for numerical features, mode for categorical features).

- b. Standardize numerical features by removing the mean and scaling to unit variance.

- c. Split the preprocessed dataset into training and test sets using a standard 70:30 or 80:20 ratio. Crucially, apply all subsequent steps only to the training set to prevent data leakage and ensure a valid evaluation of model generalization [18].

2. Feature Selection via Recursive Feature Elimination (RFE)

- Objective: To identify the most informative features and reduce dimensionality, which can enhance model performance and interpretability.

- Procedure:

- a. Estimator Selection: Choose a base estimator capable of ranking feature importance, such as a Support Vector Machine with a linear kernel or a Decision Tree.

- b. RFE Execution: Use the

RFECV(Recursive Feature Elimination with Cross-Validation) class from scikit-learn to automatically select the optimal number of features. This object recursively removes the least important features, using cross-validation performance on the training set to determine the best feature subset. - c. Transformation: Fit the

RFECVobject on the training set and use it to transform both the training and test sets.

3. Data Balancing with SMOTE

- Objective: To synthetically generate samples for the minority class and balance the class distribution in the training data.

- Procedure:

- a. SMOTE Application: Import

SMOTEfrom theimblearnlibrary. Apply thefit_resamplemethod only to the feature-selected training data from the previous step. This generates new synthetic instances for the minority class by interpolating between existing minority class instances. - b. Validation: Check the class distribution of the resampled training data to confirm balance has been achieved.

- a. SMOTE Application: Import

4. Model Training and Validation

- Procedure:

- a. Training: Train the chosen ML model (e.g., XGBoost, Random Forest) on the balanced, feature-selected training set.

- b. Validation: Use k-fold cross-validation (e.g., 5-fold or 10-fold) on the training set to tune hyperparameters and perform initial model assessment.

- Critical Step: The test set, which was split in Step 1 and transformed in Steps 2 and 3, must remain completely unseen during the training and validation process.

5. Model Evaluation on the Test Set

- Objective: To assess the model's performance on unseen, real-world data.

- Procedure:

- a. Prediction: Use the final trained model to make predictions on the processed test set.

- b. Metrics Calculation: Evaluate performance using metrics robust to imbalanced data:

Protocol 2: Advanced SMOTE Extensions for Complex Data

For datasets where the minority class contains outliers or noise, standard SMOTE can generate poor synthetic samples. This protocol outlines the use of advanced variants.

1. Identify the Need: If initial model performance is poor despite standard SMOTE, the minority class may contain abnormal instances [19]. 2. Select an Advanced SMOTE:

- Dirichlet ExtSMOTE: Uses the Dirichlet distribution to assign weights to neighboring instances, making it more robust to outliers. Reported to achieve superior F1-score, MCC, and PR-AUC [19].

- BGMM SMOTE: Uses Bayesian Gaussian Mixture Models to model the probability distribution of the minority class before generating new samples [19]. 3. Integration with Pipeline: Replace the standard SMOTE in Protocol 1 with the chosen advanced SMOTE extension.

Workflow Visualization

The following diagram illustrates the logical flow and key stages of the standardized RFE-SMOTE pipeline.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Implementing the RFE-SMOTE Pipeline

| Tool/Reagent | Function/Description | Application Note |

|---|---|---|

| Scikit-learn | A core open-source ML library in Python. | Provides implementations for RFE, data preprocessing, and base classifiers. Essential for the feature selection and model training steps. |

| Imbalanced-learn (imblearn) | A library extending scikit-learn, dedicated to handling imbalanced datasets. | Provides the SMOTE class and its variants (e.g., SMOTE-NC). Crucially, it provides the Pipeline class that ensures SMOTE is correctly applied only during training folds [18]. |

| XGBoost (Extreme Gradient Boosting) | An optimized ensemble learning algorithm based on gradient boosted decision trees. | Often used as the final classifier due to its high performance. It was the top performer in the CRISP pipeline for Parkinson's detection [16] [17]. |

| Dirichlet ExtSMOTE | An advanced SMOTE extension that uses the Dirichlet distribution to mitigate the influence of outliers in the minority class. | Recommended for complex chemical datasets where the minority class is not homogeneous, as it achieves better F1-score and PR-AUC [19]. |

| Molecular Descriptors & Fingerprints | Numerical representations of chemical structures (e.g., molecular weight, topological indices, ECFP fingerprints). | These are the typical "features" used in chemical ML. RFE is applied to these descriptors to find the most relevant ones for the target property. |

| UVARIGRANOL B | Uvarigranol B|CAS 164204-79-9|For Research | High-purity Uvarigranol B, a natural polyoxygenated cyclohexene for anti-inflammatory and antimycobacterial research. For Research Use Only. Not for human use. |

| Isonormangostin | Isonormangostin, MF:C23H24O6, MW:396.4 g/mol | Chemical Reagent |

In the field of chemical research, the proliferation of high-dimensional data, characterized by a vast number of molecular descriptors or chemical measurements relative to the number of samples, presents significant analytical challenges. These "wide" datasets are frequently imbalanced, where the number of observations belonging to each outcome class (e.g., toxic vs. non-toxic) is unequal [20] [21]. This combination of high dimensionality and class imbalance can severely bias standard machine learning models, causing them to overfit and perform poorly in predicting the minority class, which is often the class of greatest scientific interest [3] [21].

Addressing these challenges requires a robust preprocessing pipeline that integrates feature selection to reduce dimensionality and data resampling to rectify class imbalance. Among the most effective feature selection methods is Recursive Feature Elimination (RFE), a wrapper technique known for its ability to identify a parsimonious set of highly predictive features by iteratively pruning the least important ones [22] [23]. For mitigating class imbalance, the Synthetic Minority Oversampling Technique (SMOTE) and its variants are widely adopted; they generate synthetic examples for the minority class, preventing models from being biased toward the majority class [3] [24]. The strategic combination of RFE and SMOTE into an RFE-SMOTE pipeline offers a powerful, integrated solution for building reliable and interpretable predictive models from complex chemical data [25] [3].

Core Concepts and Definitions

The Problem of High-Dimensional, Imbalanced Data

Wide data, a common feature in modern chemical studies such as toxicology or drug discovery, refers to datasets where the number of features (p) vastly exceeds the number of instances (n) [20]. This structure leads to the curse of dimensionality, increasing the risk of model overfitting, escalating computational costs, and complicating the identification of meaningful patterns amidst noise [20]. When wide data is also imbalanced, the problems are exacerbated. Standard classifiers tend to be overwhelmed by the majority class, leading to a high misclassification rate for the critical minority class—a consequence with serious implications in areas like toxicity prediction, where failing to identify a harmful compound is a grave error [3] [21].

Recursive Feature Elimination (RFE)

RFE is a powerful wrapper feature selection method that operates through a recursive, backward elimination process [22] [23]. Its core strength lies in its iterative reassessment of feature importance, which allows for a more thorough evaluation than single-pass methods [22].

- Process: The algorithm starts by training a model on all features. It then ranks the features based on a model-specific importance metric (e.g., coefficients for linear models, Gini importance for tree-based models), eliminates the least important feature(s), and retrains the model on the reduced subset. This cycle repeats until a predefined number of features remains or a performance threshold is met [22] [23].

- Key Variants: The flexibility of RFE has led to several impactful variants:

- SVM-RFE: The original implementation that uses a Support Vector Machine as the core model, renowned for its effectiveness but often computationally intensive [26].

- RF-RFE: Utilizes a Random Forest model, which is particularly adept at capturing complex, non-linear feature interactions [22] [27].

- Enhanced RFE: Incorporates modifications to the elimination process, such as variable step sizes, to achieve a favorable balance between computational efficiency and performance [26] [23].

- U-RFE (Union with RFE): Employs multiple base estimators to generate different feature subsets and then performs a union analysis to create a final, robust feature set, effectively combining the strengths of various algorithms [28].

Data Resampling with SMOTE

Data resampling techniques adjust the class distribution of a dataset. While simple random oversampling and undersampling are options, they carry risks of overfitting and information loss, respectively [26]. SMOTE provides a more sophisticated alternative.

- SMOTE (Synthetic Minority Oversampling Technique): This algorithm generates synthetic examples for the minority class rather than simply replicating existing instances. It works by identifying a minority class instance's k-nearest neighbors and creating new, interpolated instances along the line segments joining the instance and its neighbors [24].

- Hybrid Variants: To handle noise and outliers more effectively, SMOTE is often combined with cleaning techniques.

- SMOTE-ENN: Combines SMOTE with the Edited Nearest Neighbors (ENN) rule. After oversampling, ENN removes any instance (from both classes) whose class label differs from the class of the majority of its nearest neighbors. This cleans the overlapping regions between classes [3].

- SMOTE-Tomek: Another hybrid method that uses Tomek links to identify and remove borderline or noisy instances after applying SMOTE [3].

Quantitative Comparison of Techniques

Table 1: Performance Comparison of RFE Variants on Different Predictive Tasks

| RFE Variant | Core Model | Key Characteristic | Reported Performance | Best Suited For |

|---|---|---|---|---|

| SVM-RFE | Support Vector Machine | High predictive performance, but can be slow [26]. | Considered a benchmark; high accuracy in gene selection [26]. | Scenarios where predictive accuracy is the top priority and computational resources are sufficient. |

| RF-RFE | Random Forest | Captures complex feature interactions; retains larger feature sets [22] [27]. | AUC: 0.967 in predicting depression risk from chemical exposures [27]. | Complex, high-dimensional datasets with non-linear relationships. |

| Enhanced RFE | Variable | Modifies elimination process for efficiency [26] [23]. | Substantial feature reduction with minimal accuracy loss [22] [23]. | Practical applications requiring a balance between performance, interpretability, and computational cost. |

| U-RFE | Multiple (LR, SVM, RF) | Creates a union feature set from multiple models [28]. | F1-score: 0.851 in classifying multi-category cancer deaths [28]. | High feature redundancy; aims for robust feature sets by leveraging multiple perspectives. |

Table 2: Performance of Data Resampling Techniques on an Imbalanced Liver Disease Dataset

| Resampling Technique | Description | Reported Accuracy | Key Advantage |

|---|---|---|---|

| No Resampling | Original imbalanced dataset (Baseline) | ~71-74% (Baseline) [3] | Highlights the severity of the class imbalance problem. |

| SMOTE-ENN | Synthetic oversampling followed by data cleaning using ENN. | 93.2% [3] | Effectively reduces noise and clarifies class boundaries. |

| SMOTE-ENN with AdaBoost | Combines the cleaned data with a boosting algorithm. | High performance (specific accuracy not stated) [3] | Leverages ensemble learning on a balanced, clean dataset. |

The Integrated RFE-SMOTE Pipeline: Protocol and Application

The sequential integration of RFE and SMOTE forms a cohesive and powerful preprocessing workflow for imbalanced, high-dimensional chemical data. The recommended order is to perform feature selection first, followed by data resampling [20]. This sequence prevents the synthetic instances generated by SMOTE from influencing the feature selection process, thereby ensuring that the selected features are derived from the original data distribution and enhancing the generalizability of the final model.

Experimental Protocol: RFE-SMOTE for Predictive Toxicology

The following protocol, inspired by applications in ionic liquid toxicity prediction and depression risk modeling, provides a detailed methodology for constructing a robust classification model [27] [25].

1. Problem Definition and Data Preparation

- Objective: To build a binary classifier for predicting the toxicity of ionic liquids based on molecular descriptors and fingerprints [25].

- Data Collection: Assemble a dataset containing molecular structures (e.g., represented as SMILES strings) and corresponding toxicity labels (e.g., toxic vs. non-toxic towards a specific organism).

- Feature Engineering: Calculate a comprehensive set of molecular descriptors (e.g., topological, electronic, geometric) and generate molecular fingerprints (e.g., ECFP4) from the structures. This creates the high-dimensional feature space.

2. Feature Selection with Recursive Feature Elimination

- Algorithm: Employ RF-RFE (Random Forest Recursive Feature Elimination) [27].

- Implementation:

- Initialize RFE using a Random Forest classifier as the base estimator.

- Use a step-size strategy, such as eliminating 10% of the lowest-ranked features at each iteration, to balance speed and thoroughness [26].

- Integrate the RFE process within a 10-fold cross-validation loop to ensure robust feature ranking and mitigate overfitting [27].

- The output of this stage is an optimal subset of the most informative molecular descriptors.

3. Data Balancing with SMOTE-ENN

- Algorithm: Apply the SMOTE-ENN hybrid resampler to the feature-selected dataset [3].

- Implementation:

- First, apply SMOTE to the minority class (e.g., "toxic" compounds) to synthetically increase its representation until the classes are balanced.

- Subsequently, apply the Edited Nearest Neighbors (ENN) rule. For each instance in the dataset, if its class label differs from the majority of its k (typically k=3) nearest neighbors, remove that instance. This step cleans the dataset of noisy and borderline examples from both classes.

4. Model Training and Validation

- Model Training: Train a final predictive model, such as a Random Forest or an XGBoost classifier, on the processed dataset (which now has a reduced feature set and balanced classes) [27] [25].

- Hyperparameter Tuning: Use a technique like GridSearchCV to systematically optimize the model's hyperparameters, further enhancing performance [25].

- Performance Evaluation: Validate the model using a strict hold-out test set or nested cross-validation. Report metrics that are robust to imbalance, including:

- Area Under the ROC Curve (AUC-ROC)

- F1-Score

- Precision and Recall (prioritizing high recall if identifying all toxic compounds is critical)

The Scientist's Toolkit: Essential Research Reagents

Table 3: Key Computational Tools and Their Functions in the RFE-SMOTE Pipeline

| Tool / Algorithm | Category | Primary Function in the Pipeline | Key Parameters to Optimize |

|---|---|---|---|

| Random Forest (RF) | Ensemble Model / Base Estimator for RFE | Serves as the core model for RF-RFE, providing robust feature importance scores based on Gini impurity or mean decrease in accuracy [22] [27]. | n_estimators, max_depth, max_features |

| SMOTE-ENN | Hybrid Resampler | Generates synthetic minority class instances (SMOTE) and subsequently cleans the resulting dataset by removing noisy samples (ENN), leading to well-defined class clusters [3]. | sampling_strategy (SMOTE), n_neighbors (for both SMOTE and ENN) |

| k-Fold Cross-Validation | Model Validation Framework | Integrated within the RFE process to provide a robust estimate of feature importance and model performance, guarding against overfitting [27]. | number_of_folds (typically 5 or 10) |

| GridSearchCV | Hyperparameter Optimization | Exhaustively searches a predefined parameter grid for the final predictive model to identify the combination that yields the best cross-validated performance [25]. | param_grid, cv (number of cross-validation folds) |

| Molecular Descriptors/Fingerprints | Chemical Feature Representation | Quantitative representations of chemical structure that form the high-dimensional input feature space for the pipeline (e.g., for QSAR modeling) [25]. | Descriptor type (e.g., topological, electronic), fingerprint type and radius (e.g., ECFP4) |

| 6-O-Feruloylglucose | 6-O-Feruloylglucose for Research|Feruloyl Glucose | High-purity 6-O-Feruloylglucose, a natural hydroxycinnamic acid glycoside for plant metabolism and food chemistry research. For Research Use Only. Not for human consumption. | Bench Chemicals |

| Junipediol B | Junipediol B, MF:C10H12O4, MW:196.20 g/mol | Chemical Reagent | Bench Chemicals |

Imbalanced data presents a significant challenge in chemical machine learning (ML), where critical classes—such as active drug molecules or toxic compounds—are often severely underrepresented [15]. This imbalance leads to biased models that fail to accurately predict minority class properties, ultimately limiting their utility in drug discovery and materials science [15]. Addressing this issue requires sophisticated approaches that simultaneously manage class distribution and feature space complexity.

This application note explores the integration of Recursive Feature Elimination (RFE) and the Synthetic Minority Over-sampling Technique (SMOTE) as a synergistic pipeline for analyzing imbalanced chemical data. We detail the theoretical foundations, provide validated experimental protocols, and present performance metrics from real-world chemical applications to guide researchers in implementing this powerful combined approach.

Theoretical Foundations and Synergy

The Class Imbalance Problem in Chemical Data

In chemical datasets, imbalance arises from natural molecular distribution biases, selection bias in experimental data collection, and the inherent rarity of target phenomena [15]. For instance, in high-throughput screening for drug discovery, the number of active compounds is typically dwarfed by inactive ones [15] [9]. Standard ML classifiers exhibit bias toward majority classes, resulting in poor sensitivity for detecting critical minority classes like bioactive molecules or hazardous materials.

SMOTE for Data Balancing

The Synthetic Minority Over-sampling Technique (SMOTE) addresses class imbalance by generating synthetic minority class samples through linear interpolation between existing minority instances and their k-nearest neighbors [15] [29]. This data-level approach enhances the model's ability to learn minority class characteristics without simple duplication, thereby reducing overfitting [15].

While powerful, SMOTE has limitations: it can amplify the effect of outliers and noisy examples, and generated samples may not always perfectly conform to the true underlying minority class distribution [19] [29]. Advanced variants like Borderline-SMOTE, ADASYN, and Dirichlet ExtSMOTE have been developed to mitigate these issues, particularly when abnormal instances exist within the minority class [15] [19].

Recursive Feature Elimination (RFE) for Feature Selection

Recursive Feature Elimination (RFE) is a wrapper-style feature selection method that recursively constructs a model, ranks features by their importance, and removes the least important features [3] [25]. When paired with Random Forest—which provides robust Gini importance metrics—RFE becomes particularly effective for high-dimensional chemical data like molecular fingerprints or spectral features [30].

The Gini importance measures the total reduction in node impurity (Gini impurity) achieved by a feature across all trees in the forest, providing a multivariate feature relevance score that captures complex interactions [30]. RFE using this importance eliminates irrelevant features, reduces noise, and improves model generalizability.

Synergistic Benefits of the Combined Pipeline

The RFE-SMOTE pipeline delivers complementary advantages that address core challenges in imbalanced chemical data analysis:

- Enhanced Model Generalization: RFE removes noisy, irrelevant, or redundant features that can mislead SMOTE's interpolation process and degrade synthetic sample quality. This results in a more discriminative feature space for subsequent classification [30].

- Optimal Data Utilization: SMOTE enables balanced learning, while RFE ensures the model focuses on the most predictive molecular descriptors or fingerprints, preventing overfitting to spurious correlations in high-dimensional data [9] [25].

- Improved Computational Efficiency: Dimensionality reduction via RFE decreases computational costs for both model training and the SMOTE synthetic sample generation process [30].

Experimental Protocols and Workflows

Comprehensive RFE-SMOTE Workflow

The following diagram illustrates the integrated pipeline for processing imbalanced chemical data:

Step-by-Step Protocol for Classification of Bioactive Compounds

Objective: To build a predictive model for identifying active inhibitors of the AMA-1–RON2 protein interaction, a target for antimalarial drug discovery [9].

Dataset:

- Source: PubChem BioAssay (AID 720542) [9]

- Initial Composition: 738 active compounds (minority) vs. 356,551 inactive compounds (majority) [9]

- Preprocessing: Remove inconclusive samples, deduplicate, and convert SMILES structures to molecular fingerprints.

Protocol:

Data Preparation and Splitting

- Encode molecular structures using 2048-bit Morgan fingerprints (radius=2) via the RDKit library [9].

- Split data into training (80%) and test (20%) sets, stratifying by activity class to preserve imbalance ratio in the test set.

- Critical Note: The test set must remain completely untouched by SMOTE to ensure realistic performance evaluation.

Recursive Feature Elimination (RFE)

- Initialize a

RandomForestClassifieron the training set only. - Rank all 2048 fingerprint features by their Gini importance [30].

- Implement RFE to recursively remove the least important features. Evaluate cross-validation performance (e.g., 5-fold) at each step to identify the optimal number of features.

- Transform both training and test sets to retain only the optimal feature subset.

- Initialize a

Data Balancing with SMOTE

- Apply the SMOTE algorithm exclusively to the training set that has been reduced by RFE.

- Use the default k-nearest neighbors value (k=5) for interpolation. For datasets with potential outliers, consider robust variants like Dirichlet ExtSMOTE [19].

- Balance the minority and majority classes to a 1:1 ratio, resulting in a final balanced training set.

Model Training and Validation

- Train the final classification model (e.g.,

GradientBoostingMachineorRandomForestClassifier) on the balanced, feature-selected training set. - Predict on the original, unmodified test set.

- Evaluate performance using metrics robust to imbalance: F1-score, Matthews Correlation Coefficient (MCC), Precision-Recall AUC (PR-AUC), and ROC-AUC [19] [9].

- Train the final classification model (e.g.,

The Scientist's Toolkit: Essential Research Reagents

Table 1: Key software tools and libraries for implementing the RFE-SMOTE pipeline.

| Tool/Library | Type | Primary Function | Application Note |

|---|---|---|---|

| RDKit | Cheminformatics Library | Generates molecular descriptors and Morgan fingerprints from SMILES [9]. | Encodes chemical structures into numerical features for ML. |

| scikit-learn | ML Library | Provides RandomForestClassifier, RFE, and evaluation metrics [31]. |

Core framework for building the entire RFE-SMOTE pipeline. |

| imbalanced-learn | ML Library | Implements SMOTE and its advanced variants (e.g., SMOTE-ENN) [3]. | Handles all data-level resampling operations. |

| SMOTE-ENN | Hybrid Resampler | Combines SMOTE with Edited Nearest Neighbors to clean overlapping samples [3] [12]. | Useful when the class boundary is unclear. |

| Gini Importance | Feature Metric | Measures feature relevance based on total impurity reduction in Random Forest [30]. | The core ranking criterion for the RFE process. |

| Cnidioside B methyl ester | Cnidioside B methyl ester, MF:C19H24O10, MW:412.4 g/mol | Chemical Reagent | Bench Chemicals |

| Evofolin C | Evofolin C, MF:C14H18O2, MW:218.29 g/mol | Chemical Reagent | Bench Chemicals |

Performance Metrics and Comparative Analysis

Quantitative Performance in Chemical Applications

The effectiveness of the RFE-SMOTE pipeline is demonstrated by its application across diverse chemical domains, from drug discovery to materials science.

Table 2: Performance metrics of the RFE-SMOTE pipeline in real-world chemical applications.

| Application Domain | Dataset / Target | Key Methodology | Performance Outcome | Citation |

|---|---|---|---|---|

| Antimalarial Drug Discovery | AMA-1–RON2 Inhibitors | Morgan Fingerprints + RFE + SMOTE + GBM | Accuracy: 89%, ROC-AUC: 92% | [9] |

| Materials Science | Polymer Material Properties | Feature Selection + Borderline-SMOTE + XGBoost | Improved prediction of mechanical properties on balanced datasets. | [15] |

| Catalyst Design | Hydrogen Evolution Reaction Catalysts | SMOTE for data balancing + ML model | Enhanced predictive performance and candidate screening. | [15] |

| Toxicity Prediction | Ionic Liquid Toxicity | RFE + Data Augmentation + Meta-Ensemble | R²: 0.99, MAE: 0.024 (with augmentation) | [25] |

Impact of Individual Pipeline Components

Table 3: Comparative analysis of model performance with different preprocessing strategies.

| Preprocessing Strategy | Estimated Accuracy | Estimated PR-AUC | Key Advantages | Limitations Mitigated |

|---|---|---|---|---|

| Baseline (No Processing) | Low | Low | — | Baseline for comparison. |

| SMOTE Only | Medium | Medium | Improves recall for the minority class. | Class imbalance. |

| RFE Only | Medium | Medium | Reduces overfitting; improves interpretability. | High dimensionality, noisy features. |

| RFE + SMOTE (Full Pipeline) | High | High | Synergistic improvement in generalizability and predictive power. | Both imbalance and high dimensionality. |

Advanced Strategies and Practical Considerations

Enhanced SMOTE Variants

For challenging datasets with significant noise or complex distributions, consider these advanced SMOTE extensions:

- Borderline-SMOTE: Identifies and oversamples minority instances near the class decision boundary, which are often most critical for classification [15].

- Dirichlet ExtSMOTE: Leverages the Dirichlet distribution to generate synthetic samples, demonstrating improved F1-score and MCC, particularly in the presence of abnormal minority instances [19].

- SMOTE-ENN: A hybrid method that combines SMOTE with the Edited Nearest Neighbors (ENN) rule to clean the resulting data by removing both majority and minority samples that are misclassified by their nearest neighbors [3] [12]. This is highly effective for datasets with high class overlap.

Critical Implementation Notes

- Data Leakage Prevention: The most crucial practice is to perform feature selection (RFE) and oversampling (SMOTE) exclusively on the training dataset. The test set must remain completely unmodified to obtain a truthful assessment of model performance on real-world, imbalanced data [9].

- Algorithm Selection: Tree-based ensemble methods like Random Forest and Gradient Boosting Machines (GBM) are naturally suited for this pipeline, as they provide robust feature importance measures and perform well on the structured data derived from chemical features [9] [30].

- Evaluation Metrics: Always prioritize metrics like PR-AUC, F1-score, and MCC over accuracy for model selection and evaluation, as they give a more realistic picture of performance on the minority class [19] [32].

The integration of Recursive Feature Elimination and SMOTE presents a powerful, synergistic strategy for tackling the pervasive challenge of imbalanced data in chemical ML. This pipeline systematically reduces feature space noise and complexity while creating a representative data distribution for model training. The provided protocols, performance benchmarks, and toolkit offer researchers a validated roadmap for enhancing the predictive accuracy and reliability of models in critical areas such as drug discovery and materials design, ultimately accelerating the path from data to discovery.

Building Your RFE-SMOTE Pipeline: A Step-by-Step Guide for Chemical Data

In chemical research, from drug discovery to materials science, the issue of imbalanced data is a pervasive challenge that can severely compromise the performance of machine learning models [1]. This phenomenon occurs when one class of data (e.g., active drug molecules, toxic compounds, or specific material properties) is significantly underrepresented compared to other classes [1]. Most conventional machine learning algorithms, including random forests and support vector machines, exhibit a inherent bias toward the majority class because they are designed to maximize overall accuracy, often at the expense of minority class recognition [1] [33]. In critical applications such as fraud detection, medical diagnostics, and chemical compound classification, misclassification of minority class instances can have substantial consequences [34] [1].

The Synthetic Minority Over-sampling Technique (SMOTE) was developed specifically to address this problem through an intelligent data-level approach that generates synthetic samples for the minority class rather than simply duplicating existing instances [14] [35]. Unlike random oversampling, which merely creates copies of minority class examples and can lead to overfitting, SMOTE creates synthetic examples through interpolation between existing minority class instances [14] [36]. This approach helps classifiers build more robust decision regions that encompass nearby minority class points, ultimately improving model generalization and performance on imbalanced chemical datasets [14].

The Core SMOTE Algorithm: Mechanism and Generation Process

Fundamental Principles and Step-by-Step Methodology

The SMOTE algorithm operates on the principle of feature space interpolation between existing minority class instances to generate plausible synthetic examples [14] [35]. The technique fundamentally expands the feature space representation of the minority class by creating new instances that lie between existing ones, thereby encouraging the development of larger and more general decision regions during classifier training [14]. The algorithm follows a systematic, multi-step process that can be implemented programmatically.

The complete SMOTE procedure unfolds through the following operational stages:

- Identification of Minority Class Instances: The algorithm begins by isolating all instances belonging to the minority class from the dataset [35] [36].

- Nearest Neighbor Calculation: For each minority class instance, the algorithm computes its k-nearest neighbors within the minority class using Euclidean distance in the feature space [14] [33]. The typical default value for k is 5 [14] [36].

- Synthetic Instance Generation: The algorithm randomly selects one of the k-nearest neighbors and creates a synthetic data point along the line segment connecting the original instance and the selected neighbor in feature space [14] [33].

- Iteration and Balancing: This process repeats for all minority class instances until the desired class balance is achieved, with the number of synthetic samples determined by a specified oversampling ratio [35].

The mathematical formulation for generating a new synthetic sample can be expressed as:

[x{\text{new}} = xi + \lambda \times (x{zi} - xi)]

Where (xi) is the original minority instance, (x{zi}) is one of its randomly selected k-nearest neighbors, and (\lambda) is a random number between 0 and 1 [33]. This interpolation formula ensures that synthetic examples are generated along the line segment between existing minority class points in the feature space.

Visual Representation of the SMOTE Mechanism

The following diagram illustrates the step-by-step process of synthetic sample generation in the core SMOTE algorithm:

Diagram 1: The step-by-step logical workflow of the core SMOTE algorithm for generating synthetic minority class samples.

Advanced SMOTE Variants and Their Quantitative Performance

Comparative Analysis of SMOTE Algorithm Family

While the standard SMOTE algorithm provides a fundamental solution to class imbalance, numerous variants have been developed to address specific limitations and adapt to different data characteristics [34] [35]. These variants improve upon the original algorithm by incorporating considerations for class boundaries, data density, feature types, and noise handling, making them particularly valuable for complex chemical datasets with unique distribution patterns [34] [1].

Table 1: Comprehensive Comparison of SMOTE Variants and Their Performance Characteristics

| Algorithm | Key Innovation | Best Use Cases | Performance Advantages | Limitations |

|---|---|---|---|---|

| Standard SMOTE | Linear interpolation between minority samples | Numeric datasets with moderate imbalance [35] | Creates diverse samples without replication [14] | May generate noise in overlapping regions; ignores internal distribution [34] |

| Borderline-SMOTE | Focuses on minority samples near class boundaries [35] | Datasets with class overlap and boundary confusion [35] | Strengthens decision boundaries; reduces misclassification at borders [34] | May ignore safe minority regions; sensitive to noise at boundaries [34] |

| ADASYN | Adaptive generation based on learning difficulty [35] | When imbalance severity differs across regions [35] | Shifts decision boundary toward difficult samples; adaptive distribution [34] | Can over-emphasize outliers; may increase complexity [34] |

| SMOTE-ENN | Combines oversampling with noise removal [35] | Noisy datasets with mislabeled samples [35] | Produces cleaner datasets; improves generalization [35] | May remove meaningful minority samples; increases computational cost [35] |

| SMOTE-NC | Handles mixed categorical and numerical features [35] | Datasets with both feature types [35] | Preserves categorical feature integrity; appropriate for real-world datasets [35] | Not suitable for purely numerical data; more complex implementation [35] |

| K-Means SMOTE | Incorporates clustering before oversampling [34] | Datasets with intra-class imbalance [34] | Addresses both inter-class and intra-class imbalance; reduces noise generation [34] | Sensitive to clustering parameters; may increase classification errors [34] |

Quantitative Performance Metrics Across Domains

Extensive experimental evaluations have demonstrated the performance improvements achievable through SMOTE and its variants across multiple domains. Recent research on an improved SMOTE algorithm (ISMOTE) reported significant performance enhancements compared to mainstream oversampling algorithms [34]. When evaluated across thirteen public datasets from KEEL, UCI, and Kaggle repositories using three different classifiers, the ISMOTE algorithm achieved relative improvements of 13.07% in F1-score, 16.55% in G-mean, and 7.94% in AUC compared to other methods [34].

In chemical research applications, SMOTE has demonstrated particularly valuable performance enhancements. In materials design, SMOTE combined with Extreme Gradient Boosting (XGBoost) improved the prediction accuracy of mechanical properties of polymer materials by effectively resolving class imbalance issues [1]. Similarly, in catalyst design applications, SMOTE addressed uneven data distribution in original datasets, significantly improving the predictive performance of machine learning models for hydrogen evolution reaction catalyst screening [1].

Table 2: Quantitative Performance Metrics of SMOTE and Variants Across Chemical Applications

| Application Domain | Base Classifier | Evaluation Metric | Without SMOTE | With SMOTE | Improvement |

|---|---|---|---|---|---|

| Polymer Materials Design [1] | XGBoost | Prediction Accuracy | Not Reported | Not Reported | Significant Enhancement |

| Catalyst Design [1] | Machine Learning Models | Predictive Performance | Not Reported | Not Reported | Notable Improvement |

| HDAC8 Inhibitor Screening [1] | Random Forest | Predictive Accuracy | Not Reported | Not Reported | Best Performance Achieved |

| General Benchmark (13 Datasets) [34] | Multiple Classifiers | F1-Score | Baseline | +13.07% | 13.07% Relative Improvement |

| General Benchmark (13 Datasets) [34] | Multiple Classifiers | G-Mean | Baseline | +16.55% | 16.55% Relative Improvement |

| General Benchmark (13 Datasets) [34] | Multiple Classifiers | AUC | Baseline | +7.94% | 7.94% Relative Improvement |

Experimental Protocols for SMOTE Implementation in Chemical Research

Standardized Protocol for SMOTE Application

Implementing SMOTE effectively in chemical research requires careful attention to data preprocessing, parameter selection, and model validation. The following step-by-step protocol provides a standardized methodology for applying SMOTE to imbalanced chemical datasets, ensuring reproducible and scientifically valid results.

Phase 1: Data Preprocessing and Exploration

- Data Cleaning and Normalization: Begin by addressing missing values, outliers, and normalization of features, as SMOTE's distance-based approach is sensitive to feature scales [33] [36]. For chemical datasets, this may include handling of null values in compound properties or reaction outcomes.

- Class Distribution Analysis: Quantify the imbalance ratio by calculating the ratio of majority to minority class samples [14] [33]. In chemical contexts, this might involve analyzing the ratio of active to inactive compounds or successful to unsuccessful reaction conditions.

- Feature Selection (Optional): Apply feature selection techniques such as Recursive Feature Elimination (RFE) to remove irrelevant variables that might distort the nearest neighbor calculations in SMOTE [37] [38]. This is particularly valuable in high-dimensional chemical data, such as molecular descriptors or spectroscopic features.

Phase 2: SMOTE Implementation and Parameter Configuration

- Algorithm Selection: Choose the appropriate SMOTE variant based on dataset characteristics (refer to Table 1). For chemical datasets with mixed data types (e.g., continuous molecular properties and categorical structural features), SMOTE-NC is typically most appropriate [35].

- Parameter Optimization: Configure key parameters, including:

- Synthetic Data Generation: Apply the selected SMOTE algorithm to generate synthetic minority class samples, ensuring that the sampling strategy aligns with the research objectives and dataset characteristics [35] [36].

Phase 3: Model Training and Validation

- Dataset Splitting: Partition the data into training and test sets before applying SMOTE, applying the technique only to the training set to prevent data leakage and overoptimistic performance estimates [14].

- Classifier Selection and Training: Implement appropriate classifiers for the chemical research context (e.g., Random Forest for compound classification, SVM for materials property prediction) using the SMOTE-augmented training data [1].

- Performance Validation: Evaluate model performance on the untouched test set using metrics appropriate for imbalanced data, including F1-score, G-mean, and AUC, rather than simple accuracy [34] [1].

Implementation Example Using Python

The following code example demonstrates the practical implementation of SMOTE using the imbalanced-learn library in Python, a common environment for chemical informatics research:

The RFE-SMOTE Pipeline for Enhanced Chemical Data Analysis

Integrated Feature Selection and Data Balancing

The integration of Recursive Feature Elimination (RFE) with SMOTE creates a powerful pipeline for addressing both feature redundancy and class imbalance simultaneously—a common scenario in chemical datasets [37] [38]. RFE is a feature selection algorithm that works by recursively removing the least important features and building a model on the remaining features until a specified number of features is reached [37]. This approach is particularly valuable for chemical data, which often contains numerous molecular descriptors, spectral features, or reaction conditions that may have varying degrees of relevance to the target property or activity [1].

The synergistic combination of RFE and SMOTE follows a sequential process where feature selection precedes synthetic sample generation. This order is crucial because feature selection performed after SMOTE might be biased by the synthetically generated samples [37] [38]. The RFE-SMOTE pipeline ensures that synthetic instances are generated in a reduced feature space containing only the most relevant variables, potentially improving both the quality of synthetic samples and the overall model performance [38].

Workflow Visualization of the Integrated RFE-SMOTE Pipeline

The following diagram illustrates the complete integrated workflow combining Recursive Feature Elimination with SMOTE for optimized processing of imbalanced chemical datasets:

Diagram 2: Integrated RFE-SMOTE pipeline for optimized processing of imbalanced chemical datasets, combining feature selection with synthetic data generation.

Implementation Protocol for RFE-SMOTE Pipeline

The effective implementation of the RFE-SMOTE pipeline requires careful sequencing of operations to prevent data leakage and ensure optimal performance:

- Initial Data Partitioning: Split the complete chemical dataset into training and testing subsets, typically using a 70:30 or 80:20 ratio [33].

- Feature Selection with RFE: Apply Recursive Feature Elimination exclusively to the training set to identify the most predictive features [37] [38]. Utilize a classifier appropriate for the chemical context (e.g., Random Forest for complex nonlinear relationships, Logistic Regression for interpretability) to determine feature importance [38].

- Feature Space Transformation: Apply the feature selection model to both training and test sets to create reduced-dimension datasets containing only the selected features [37].

- Synthetic Sample Generation with SMOTE: Apply the appropriate SMOTE variant exclusively to the transformed training data to generate synthetic minority class samples [35].

- Model Training and Validation: Train the final classification model on the balanced, feature-optimized training data and evaluate its performance on the untouched test set [14] [38].

This sequential approach ensures that feature selection is not influenced by synthetic samples and that the test set remains completely unseen during the training and optimization process, providing an unbiased evaluation of model performance.

Successful implementation of SMOTE-based methodologies in chemical research requires both computational tools and domain-specific resources. The following table outlines the essential components of the SMOTE research toolkit for chemical scientists.

Table 3: Essential Research Reagents and Computational Tools for SMOTE Implementation in Chemical Research

| Tool/Resource | Type | Specifications | Application in Chemical Research |

|---|---|---|---|

| Imbalanced-Learn (imblearn) | Python Library | Version 0.5.0 or higher [14] | Provides SMOTE and variants; integrates with scikit-learn pipeline [14] [35] |

| Scikit-Learn | Python Library | Version 0.22.1 or higher [38] | Offers RFE implementation; standard ML algorithms [37] [38] |

| Chemical Descriptors | Data Features | Molecular weight, logP, polar surface area, etc. [1] | Feature set for compound characterization in drug discovery [1] |

| Material Properties | Data Features | Mechanical, thermal, electronic properties [1] | Feature set for materials science applications [1] |

| Reaction Conditions | Data Features | Temperature, catalyst, solvent, concentration [1] | Feature set for reaction optimization and catalysis research [1] |

| Computational Environment | Infrastructure | Python 3.6+, Jupyter Notebook, adequate RAM for large datasets | Execution environment for SMOTE algorithms and chemical data analysis |

The deconstruction of SMOTE's synthetic data generation mechanism reveals a sophisticated approach to addressing the fundamental challenge of class imbalance in chemical datasets. By generating synthetic minority class samples through intelligent interpolation in feature space, SMOTE and its advanced variants enable more robust and accurate predictive models across diverse chemical research domains, from drug discovery to materials design [34] [1].

The integration of SMOTE within a comprehensive RFE-SMOTE pipeline further enhances its utility by addressing both feature redundancy and class imbalance simultaneously [37] [38]. This integrated approach is particularly valuable for chemical datasets characterized by high dimensionality and skewed class distributions [1]. As chemical research continues to generate increasingly complex and heterogeneous datasets, the continued evolution of SMOTE methodologies—including potential integrations with deep learning architectures, transfer learning frameworks, and domain-aware synthetic generation techniques—promises to further enhance its applicability and performance [34] [36].

When implemented following the standardized protocols and best practices outlined in this article, SMOTE provides chemical researchers with a powerful methodology for extracting meaningful insights from imbalanced datasets, ultimately accelerating the discovery and development of novel compounds, materials, and chemical processes.

Class imbalance is a pervasive challenge in chemical data science, particularly in drug discovery and molecular property prediction, where active compounds are often significantly outnumbered by inactive ones [1]. This imbalance can bias standard machine learning models, leading to poor predictive performance for the critical minority class. While the Synthetic Minority Over-sampling Technique (SMOTE) is a widely used solution, its linear interpolation between minority class instances often fails at complex chemical boundaries, potentially generating noisy samples that degrade model performance [34] [39].

Advanced SMOTE variants have been developed to address these limitations by strategically focusing on specific regions of the feature space. This Application Note explores three such variants—Borderline-SMOTE, SVM-SMOTE, and ADASYN—within the context of a broader Recursive Feature Elimination (RFE)-SMOTE pipeline for imbalanced chemical data. We provide a detailed comparative analysis, experimental protocols, and implementation frameworks to guide researchers in selecting and applying these methods effectively.

The following table summarizes the key characteristics, mechanisms, and optimal use cases for the three advanced SMOTE variants discussed in this note.

Table 1: Comparative Overview of Advanced SMOTE Variants

| Feature | Borderline-SMOTE | SVM-SMOTE | ADASYN |

|---|---|---|---|

| Core Mechanism | Identifies and oversamples "borderline" minority instances that are at risk of misclassification [40]. | Utilizes Support Vector Machines (SVM) to identify support vectors and generates samples near the decision boundary [40]. | Generates more synthetic samples for minority instances that are harder to learn, based on the local imbalance [41]. |

| Region of Focus | Decision boundary regions where minority and majority classes meet [40]. | Proximity of the SVM-derived optimal separating hyperplane [40]. | Hard-to-learn regions, determined by the density of majority class neighbors [41]. |

| Handling of Noise | Filters out noise by ignoring minority samples where all nearest neighbors are from the majority class [39] [40]. | Robust to noise due to the inherent properties of SVM, which focuses on support vectors [42]. | Can be susceptible to noise if outliers in the minority class are considered hard-to-learn [41]. |

| Ideal Chemical Data Scenario | Datasets with a clear but precarious separation between active/inactive compounds or toxic/non-toxic molecules. | Datasets with low degrees of overlap and a complex, non-linear decision boundary [40]. | Scenarios with sparse minority class regions and a high need to adaptively shift the decision boundary. |

| Key Advantage | Strengthens the minority class side of the decision boundary, reducing misclassification. | Creates a more defined and generalizable decision boundary by oversampling near support vectors. | Adaptively reduces bias by focusing on the most difficult minority class examples. |

Integration with an RFE-SMOTE Pipeline

Integrating these oversampling variants into a feature selection pipeline is crucial for robust model development. The CRISP (Correlation-filtered Recursive feature elimination and Integration of SMOTE Pipeline) framework demonstrates this effectively [16]. This multi-stage, lightweight framework sequentially applies:

- Correlation-based Feature Pruning: Removes highly redundant features to reduce dimensionality and computational cost.

- Recursive Feature Elimination (RFE): Selects the most informative feature subset for the classification task.

- SMOTE-based Class Balancing: Applies an advanced SMOTE variant (e.g., Borderline-SMOTE, SVM-SMOTE, or ADASYN) to the training folds generated during cross-validation, preventing data leakage and ensuring a balanced dataset for model training [16].

This pipeline has shown significant performance improvements in real-world applications. For instance, in gait-based Parkinson's disease screening using Vertical Ground-Reaction Force (VGRF) data, the CRISP pipeline boosted the subject-wise detection accuracy of an XGBoost classifier from 96.1% to 98.3% [16].

Workflow Visualization

The following diagram illustrates the logical flow of the integrated RFE-SMOTE pipeline, highlighting the stage where a specific SMOTE variant is applied.

Experimental Protocols

This section provides detailed methodologies for implementing the discussed SMOTE variants, designed to be reproducible for research scientists.

Protocol for Borderline-SMOTE

Objective: To generate synthetic samples specifically along the decision boundary to reinforce minority class regions most vulnerable to misclassification.

Materials: Python environment with imbalanced-learn (imblearn) library installed.

Procedure:

- Data Preprocessing: Standardize or normalize all numerical features. Encode categorical features if present (note: standard Borderline-SMOTE requires numerical data).

- Identify Borderline Instances: For each minority class sample, find its k-nearest neighbors (typically k=5). Categorize the sample as:

- Noise: If all k-nearest neighbors belong to the majority class. (Ignore this sample for oversampling).

- Borderline: If more than half, but not all, of its k-nearest neighbors belong to the majority class.

- Safe: If more than half of its k-nearest neighbors belong to the minority class.

- Synthetic Sample Generation: For each identified "borderline" instance:

- Randomly select one of its nearest neighbors that belongs to the minority class.

- Compute the difference vector between the selected neighbor and the borderline instance.

- Multiply this vector by a random number between 0 and 1.

- Add this scaled vector to the borderline instance to create a new synthetic sample [40].

- Integration: Add the newly generated synthetic samples to the original training set to create a balanced dataset.

Protocol for SVM-SMOTE

Objective: To create synthetic samples in the feature space proximate to the support vectors, thereby refining the optimal separating hyperplane.

Procedure:

- Data Preprocessing: Standardize/normalize features. SVM is sensitive to feature scales.

- Train an SVM Model: Fit a linear or non-linear Support Vector Machine classifier on the original imbalanced training data to obtain the support vectors.

- Identify Minority Support Vectors: Isolate the support vectors that belong to the minority class.

- Generate Synthetic Samples: For each minority support vector:

- Find its k-nearest neighbors from the entire dataset (or only minority class, depending on implementation).

- If fewer than half of these neighbors are from the majority class, perform extrapolation to expand the minority class region outward.

- Else, perform interpolation between the support vector and its minority neighbors to consolidate the existing region [40].

- Integration: Combine the synthetic samples with the original training data.

Protocol for ADASYN

Objective: To adaptively generate more synthetic samples for "hard-to-learn" minority class examples based on local class imbalance.

Procedure:

- Data Preprocessing: Standardize/normalize features.

- Compute Local Imbalance: For each minority class sample

xi, find its k-nearest neighbors. Calculate the ratioriof majority class samples among these k neighbors. - Normalize Ratios: Normalize the

rivalues to create a density distributiongi, wheregi = ri / sum(r)for all minority samples. This ensures that the total number of synthetic samples generated is proportional to the overall imbalance. - Determine Samples per Instance: For each minority sample